- Accounts

- Deployment and Upgrade

- Find the Version of your Exabeam Cloud Connectors Platform

- Increase Memory for the Exabeam Cloud Connectors Platform

- Enable HTTP Strict-Transport-Security Response Headers

- Verify Connectivity from the Exabeam Cloud Connectors App to an Integrated SIEM

- Revert Exabeam Cloud Connectors to an Earlier Version

- Logs and Reports

- Send Exabeam Cloud Connectors Logs to Exabeam Support

- Enable Remote Monitoring for on-Premise Deployments

- Opt Out of Health Reporting

- Audit Logging for Exabeam Cloud Connectors

- Set Up a Syslog Receiver that Logs Locally and Forwards to a Remote Destination

- Reduce the Size of Events Sent to Exabeam Data Lake or Exabeam Advanced Analytics

- Decrease Kafka's Data Retention Period

- Increase the Maximum Number of Open Files

- Enrichment

Logs and Reports

Send Exabeam Cloud Connectors Logs to Exabeam Support

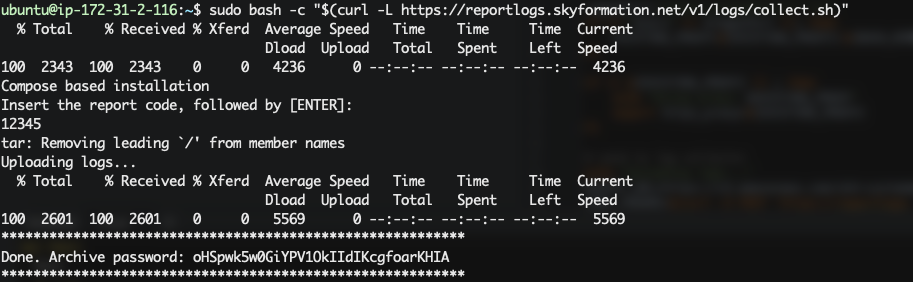

If you need assistance debugging or diagnosing an issue with Exabeam Cloud Connectors, you can use a script to collect and send your log files to Exabeam Support.

The script provides a simple one-click procedure that provides the following functions:

Creates an archive of the entire Exabeam Cloud Connectors logs

Creates a encryption password

Encrypts the archive using the encryption password

Securely uploads the encrypted archive to the Exabeam Cloud Connectors diagnostic cloud service using SSL

Note

Before using the script, please contact Exabeam Support to enable them to coordinate the log collection. The Exabeam Cloud Connectors logs collected by the script are sent to the Exabeam Support service at: https://report.skyformation.net. During this process, you must share the encryption key and password with the Exabeam Support to enable the support engineer to access the logs sent to the service.

To send your logs:

SSH into the Linux machine that hosts the Exabeam Cloud Connectors app with an account that has root-level permissions.

Run the command for your deployment:

Without a proxy

sudo bash -c "$(curl -L https://reportlogs.skyformation.net/v1/logs/collect.sh)"

With a proxy

sudo bash -c "$(curl -x https://proxyserver:8080 -L https://reportlogs.skyformation.net/v1/logs/collect.sh)"

When prompted for a report code, enter the code provided by Exabeam Support (usually the ticket number, i.e. 12345).

Note the password created for the encryption of the logs archive.

Notify Exabeam Support that a diagnostic file was uploaded and provide the password for the logs archive.

Support will analyze your logs and help diagnose any issues.

Enable Remote Monitoring for on-Premise Deployments

By enabling remote monitoring, Exabeam can monitor the health of your Cloud Connectors and help to resolve any issues.

In a text editor, open the following configuration file: /opt/exabeam/config/common/cloud-connection-service/custom/application.conf

Add the following setting with the Cloud Connector IP address:

cloud.plugin."Telemetry".config.telemetryCheckEndPoint += "http://[Cloud_Connector_IP_ADDRESS]:8445/prom/telemetry"

The following is an example of the application.conf file with the remote monitoring setting added:

include "../default/application_default.conf" cloud.plugin."Telemetry".config.telemetryCheckEndPoint += "http://[Cloud_Connector_IP_ADDRESS]:8445/prom/telemetry" cloud { service { cloud { // Http client proxy settings # proxyHost = "proxy-host" # proxyPort = 9000 // Optional, default "http" # proxyProtocol = "http" | "https" // Optional # proxyRealm = "realm" // Proxy authentication scheme the credentials apply to, // if scheme is not defined and proxyUsername and proxyPassword are defined // the scheme is set as "basic", otherwise proxy authentication is disabled # proxyScheme = "basic" or "ntlm" or "digest" // -- 1. Credentials for proxyScheme = "basic" or "digest" // After the first read time the value is replaced onto encrypted # proxyUsername = "user" // After the first read time the value is replaced onto encrypted # proxyPassword = "pass" // -- 2. Credentials for proxyScheme = "ntlm" // After the first read time the value is replaced onto encrypted # proxyUsername = "user" // After the first read time the value is replaced onto encrypted # proxyPassword = "pass" // optional, the host the authentication request is originating from # proxyWorkstation = "workstation" // optional, the domain to authenticate within # proxyDomain = "domain" } } }Restart the cloud connection service.

Opt Out of Health Reporting

To provide health reporting, Exabeam collects the following metadata from our customers.

A version that is installed on the system and license information (which modules are included in the license).

General system health status. For example, the memory used by Exabeam, and a number of threads.

For each sync to pull events, Exabeam logs the service from which we pulled the events, the endpoint, the result-count, the and status.

This data is very helpful for problem investigation. Exabeam provides monitoring functions that can automatically detect problems thus enabling us to be proactive in alerting you about any issues. As a result, it is recommended to keep health reporting enabled.

Important

The health monitor does not collect any customer data and does not examine the contents of events.

To opt out of health reporting:

Have the relevant tenant ID on hand. For a single tenant that would be

default-tenant-id.On the system where Exabeam Cloud Connectors is installed, open the terminal and execute the following command:

docker container exec -it sk4zookeeper bash

Change to the bin folder and run the

zkCli.shscript.cd bin ./zkCli.sh

Create the health reports settings.

/sk4/tenants/

<your tenant>/settings/health-report-settings ‘{ "whitelisted-fqdns": [] }’Verify that you can get to the health report settings by tying to get to

/sk4/tenants/.<your tenant>/settings/health-report-settingQuit the

zkCliand exit.

Audit Logging for Exabeam Cloud Connectors

With Exabeam Cloud Connectors 2.5.114 and later releases, Exabeam logs administrative and system actions in the audit log.

The following table lists all the actions logged for each domain:

Domain | Actions |

|---|---|

account | create, update, delete, activate, deactivate, test-connection, reset, sync-now |

account-endpoint | activate, deactivate |

tenant | create, update, delete |

siem | create, update, delete |

raw-configuration | update |

license | update |

The audit log is written to the same folder where all other Cloud Connectors logs are stored. You can identify the folder on the server hosting the Exabeam Cloud Connectors using the following command: 1docker volume inspect --format='{{.Mountpoint}}' sk4_logs. The directory is typically either /opt/exabeam/data/sk4/logs/ or /var/lib/docker/volumes/sk4_logs/_data.

Message Structure

All logs are written in the following slf4j pattern:

%d{yyyy-MM-dd HH:mm:ss} %-5p %c{1}:%L - %m%nThe message itself follows the pattern: schema-version | user | action | result | args

For example:

2020-12-08 10:25:24 - 1.0 | sk4admin | account-endpoint:deactivate | failed | 2b1b63c7-6c66-446e-993b-6293a552501b ;; Stackdriver - [name: sink1]

Set Up a Syslog Receiver that Logs Locally and Forwards to a Remote Destination

In certain situations you need to inspect the output of Exabeam Cloud Connectors during the normal operation of sending the logs to a (remote) syslog destination.

To send logs, you can use rsyslog, a syslog service that is installed by default on many Linux distributions. The setup will output to a local file at /var/log/sk4.log and use a local syslog listener at TCP on <machine private ip>:5514.

After you configure the SIEM on Exabeam Cloud Connectors to send logs to this destination, the app stores events locally in this file and simultaneously forwards them to an external SIEM destination.

To set up a syslog receiver, you must have root-level access on the Linux server.

Save the below script into a local file, e.g.

setup.sh.#!/usr/bin/env bash # setup rsyslog rule to split to both local file and to forward to another TCP destination while getopts "p:s:h" opt; do case $opt in h) HELPONLY=true ;; p) FWDPORT="$OPTARG" ;; s) FWDIP="$OPTARG" ;; \?) echo "Invalid option -$OPTARG" >&2 ;; esac done LOCAL_TARGET_FILE=/var/log/sk4.log print_help(){ echo "usage: sudo ./setup.sh -s [IP to forward messages to. mandatory] -p [port to forward messages to]" echo "locally messages will be logged to $LOCAL_TARGET_FILE" } if [[ ${HELPONLY} == true ]]; then print_help exit 0; fi if [[ -z "$FWDIP" ]]; then echo "Target IP was not provided via -s flag. Aborting, here's the help:" print_help exit 1; fi if [[ -z "$FWDPORT" ]]; then echo "Target port was not provided via -p flag. Aborting, here's the help:" print_help exit 1; fi cat <<EOT > /etc/rsyslog.d/20-sk4-local-and-fwd.conf # Load Modules module(load="imtcp") module(load="omfwd") ruleset(name="sk4localandsplit"){ # log locally action(type="omfile" File="/var/log/sk4.log") # forward to somewhere else action(type="omfwd" Target="$FWDIP" Port="$FWDPORT" Protocol="tcp") # stop propagating this message stop } input(type="imtcp" port="5514" ruleset="sk4localandsplit") EOT # ensure proper permissions to local file touch $LOCAL_TARGET_FILE chmod 666 $LOCAL_TARGET_FILE # apply by restarting rsyslog systemctl restart rsyslogChange the permissions of the script to make it executable.

chmod +x setup.sh

Run the script as sudo and provide the target IP address and port of the syslog receiver to which you want to forward the messages. For example:

sudo ./setup.sh -s 1.2.3.4 -p 1514

Run the ifconfig command and inspect the output to locate the private IP address of the host.

Note the IP address and then refer to Add a SIEM to the Exabeam Cloud Connectors Platform. You will use the IP address to set up SIEM Integration to send logs to this local IP address and port 5514.

Reduce the Size of Events Sent to Exabeam Data Lake or Exabeam Advanced Analytics

Exabeam Cloud Connectors can send events to Exabeam Data Lake and Exabeam Advanced Analytics in two formats: CEF (default) and JSON. This is independent of the actual raw event format. The Exabeam Cloud Connectors JSON format wraps the raw event in JSON whereas the Exabeam Cloud Connectors CEF format wraps the raw log in CEF. When data is sent in CEF format, each event comprises three main parts:

Data that is extracted by the application from the event is set on select CEF fields, per event type. Not all data items are extracted, only ones that have security value and are used later for analysis. They are set on the CEF field as a placeholder and disregard the original meaning of the CEF field.

The raw event, as it was received by the application from the cloud vendor. In most cases, the raw event is a JSON object, but sometimes it is not. It is put on the CEF field “cs6”, i.e. Custom String 6.

The raw event flattened as key=value pairs.

When you send logs in CEF format and the raw log is in JSON format, you can reduce the size of events by remove parts components that are unnecessary to your needs.

Note

Because removing additional fields can break parsing for events forwarded to Advanced Analytics or Data Lake, this workflow is temporarily disabled. Future product releases will include default parsers that support the reduced volume configuration.

Decrease Kafka's Data Retention Period

In Kafka, the default retention period is 24 hours. With Exabeam Cloud Connectors 2.4 and later versions, if you run out of disk space due to large number of events temporarily persisted, where you can't allocate more space, you can clear the current Kafka content and ensure all events are pushed to the syslog receiver. To prevent this from recurring, you can also decrease Kafka's data retention period.

For the Exabeam default installation, SK4_ROOT_DIR is /opt/exabeam/data/sk4. For non-default installation, the root directory is /opt/sk4.

To find the location of a volume, run the following command:

docker volume inspect <VOLUME_NAME>

Deactivate all accounts in the Exabeam Cloud Connectors web interface.

After a two-minute wait, stop the Exabeam Cloud Connectors server.

systemctl stop sk4compose

Delete content in the sk4_kafka_data volume.

Delete Kafka's offset tracking information in ZooKeeper.

docker-compose -f <SK4_ROOT_DIR>/docker-compose.yml up -d zookeeper docker container exec -it sk4zookeeper zkCli.sh deleteall /sk4kafka quit docker-compose -f <SK4_ROOT_DIR>/docker-compose.yml down

Delete server.properties in the sk4_kafka_conf volume.

Change Kafka's data retention period by updating the KAFKA_LOG_RETENTION_MS environment variable at the <SK4_ROOT_DIR>/docker-compose.yml file (.services.kafka.environment[KAFKA_LOG_RETENTION_MS]).

Start the Exabeam Cloud Connectors server.

systemctl start sk4compose

Increase the Maximum Number of Open Files

The maximum number of open files must be set to 1000000 in the Linux system. To verify the current number of open files in your system, run the following command: cat /proc/sys/fs/file-max. If the maximum number of open files is set to a lower number than 1000000, perform the following steps:

To edit the

sysctl.conffile, for Linux operating system, run the following command in shell:vi /etc/sysctl.conf

In the sysctl.conf file, update the configuration directive as follows:

fs.file-max=1000000

After saving and closing the Shell file, run the following command:

sysctl -p vi /etc/security/limits.conf

To set the maximum number of processes and open files, update the value for nproc and nofile in the

/etc/security/limits.conffile as follows:* soft nproc 1000000 * hard nproc 1000000 * soft nofile 1000000 * hard nofile 1000000

Search for the configuration files in the

/etc/security/limits.ddirectory. If the configuration files such aslimits.confwith a configuration for number of open files are present in the/etc/security/limits.ddirectory, your latest settings may get overridden. Hence update the configuration files to retain the changes.To verify if the maximum number of open files is set to 1000000, exit and then re-open the shell file, and run the command: ulimit -a

If the ulimit still shows 4096 or some other value, replicate the change in the

/etc/security/limits.d/20-nproc.conffile as well.