- Advanced Analytics

- Understand the Basics of Advanced Analytics

- Deploy Exabeam Products

- Considerations for Installing and Deploying Exabeam Products

- Things You Need to Know About Deploying Advanced Analytics

- Pre-Check Scripts for an On-Premises or Cloud Deployment

- Install Exabeam Software

- Upgrade an Exabeam Product

- Add Ingestion (LIME) Nodes to an Existing Advanced Analytics Cluster

- Apply Pre-approved CentOS Updates

- Configure Advanced Analytics

- Set Up Admin Operations

- Access Exabeam Advanced Analytics

- A. Supported Browsers

- Set Up Log Management

- Set Up Training & Scoring

- Set Up Log Feeds

- Draft/Published Modes for Log Feeds

- Advanced Analytics Transaction Log and Configuration Backup and Restore

- Configure Advanced Analytics System Activity Notifications

- Exabeam Licenses

- Exabeam Cluster Authentication Token

- Set Up Authentication and Access Control

- What Are Accounts & Groups?

- What Are Assets & Networks?

- Common Access Card (CAC) Authentication

- Role-Based Access Control

- Out-of-the-Box Roles

- Set Up User Management

- Manage Users

- Set Up LDAP Server

- Set Up LDAP Authentication

- Third-Party Identity Provider Configuration

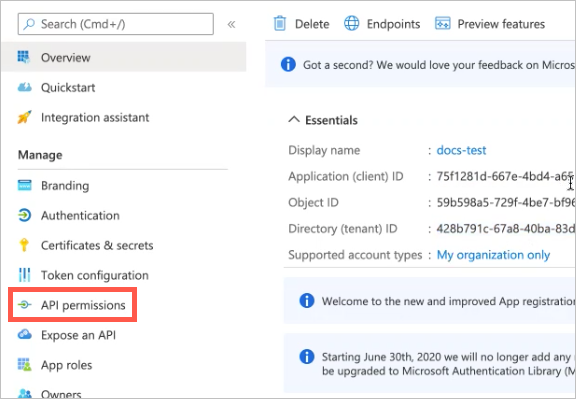

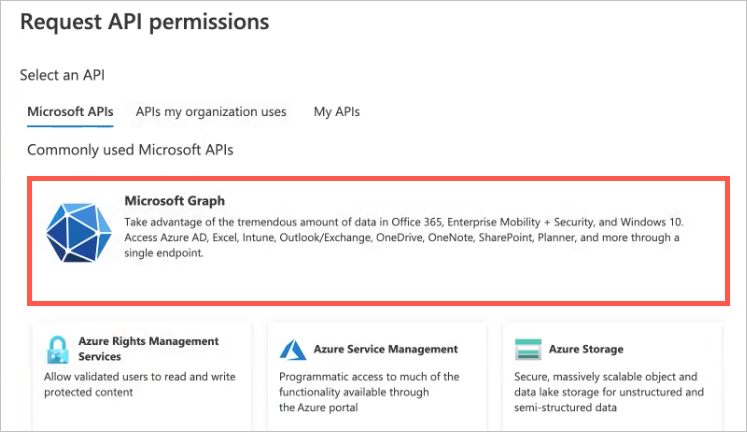

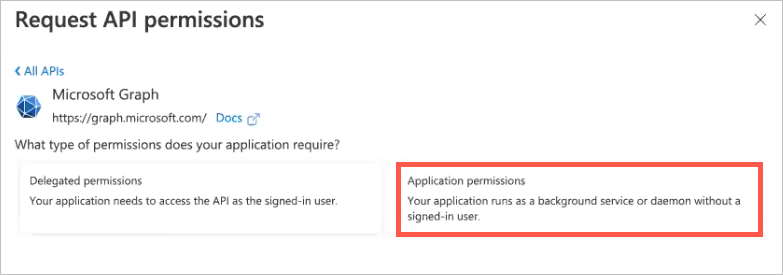

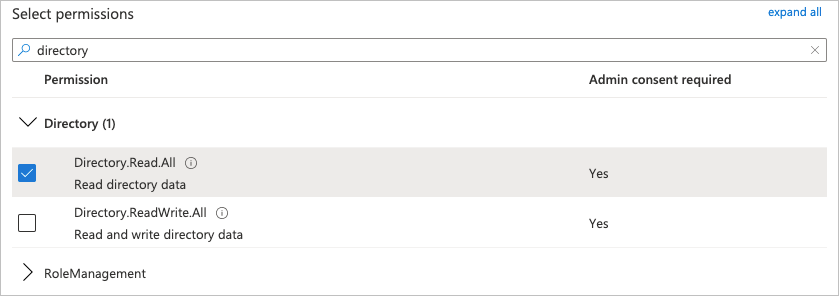

- Azure AD Context Enrichment

- Set Up Context Management

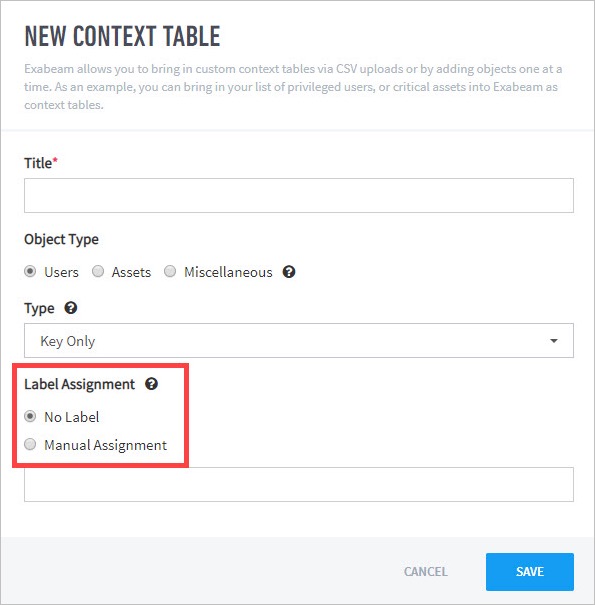

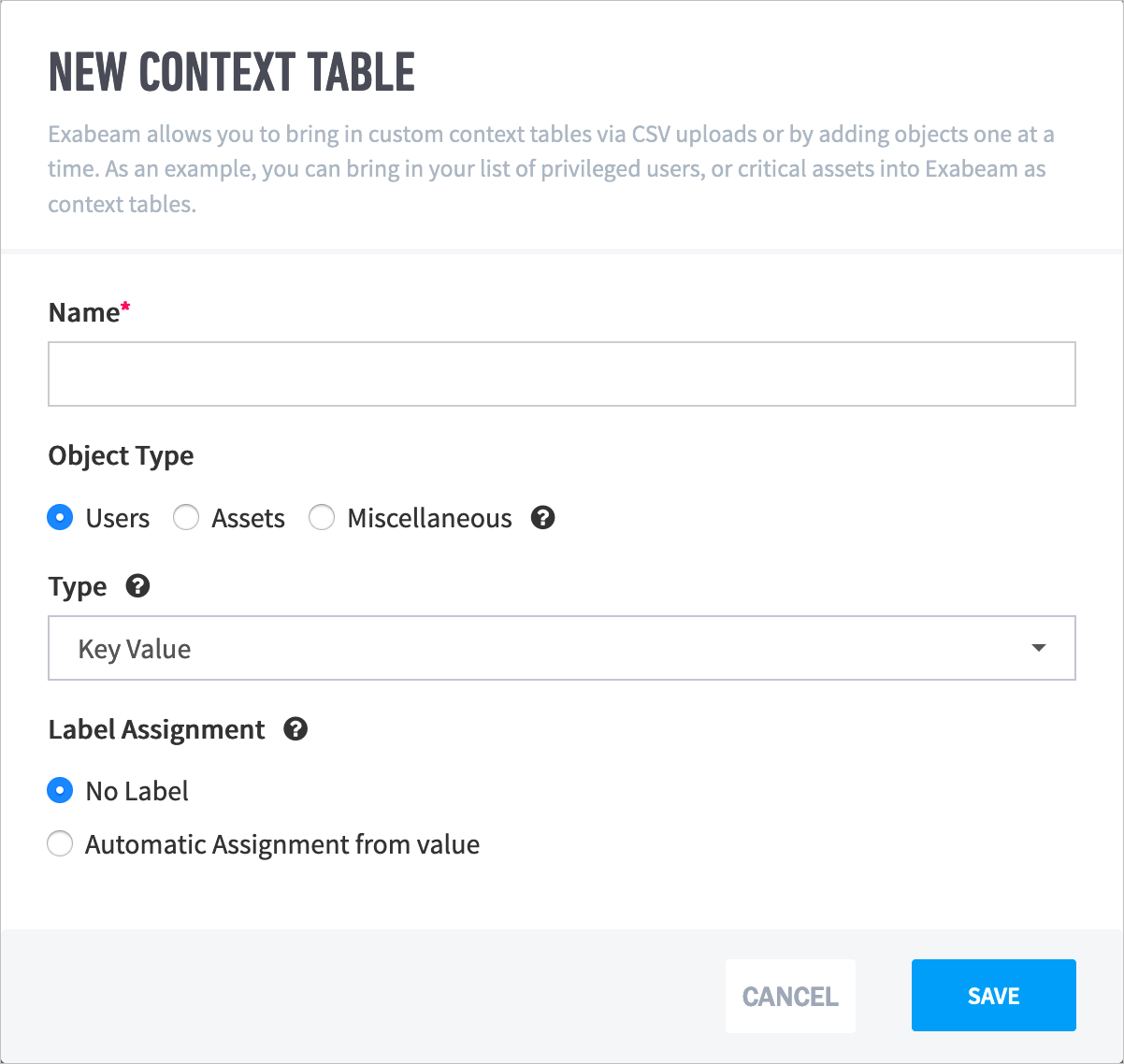

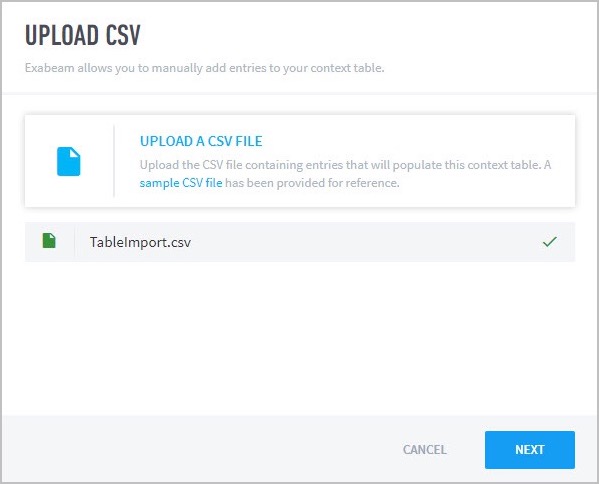

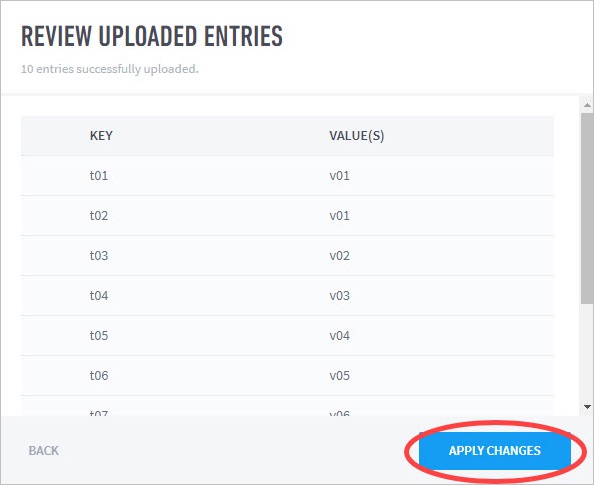

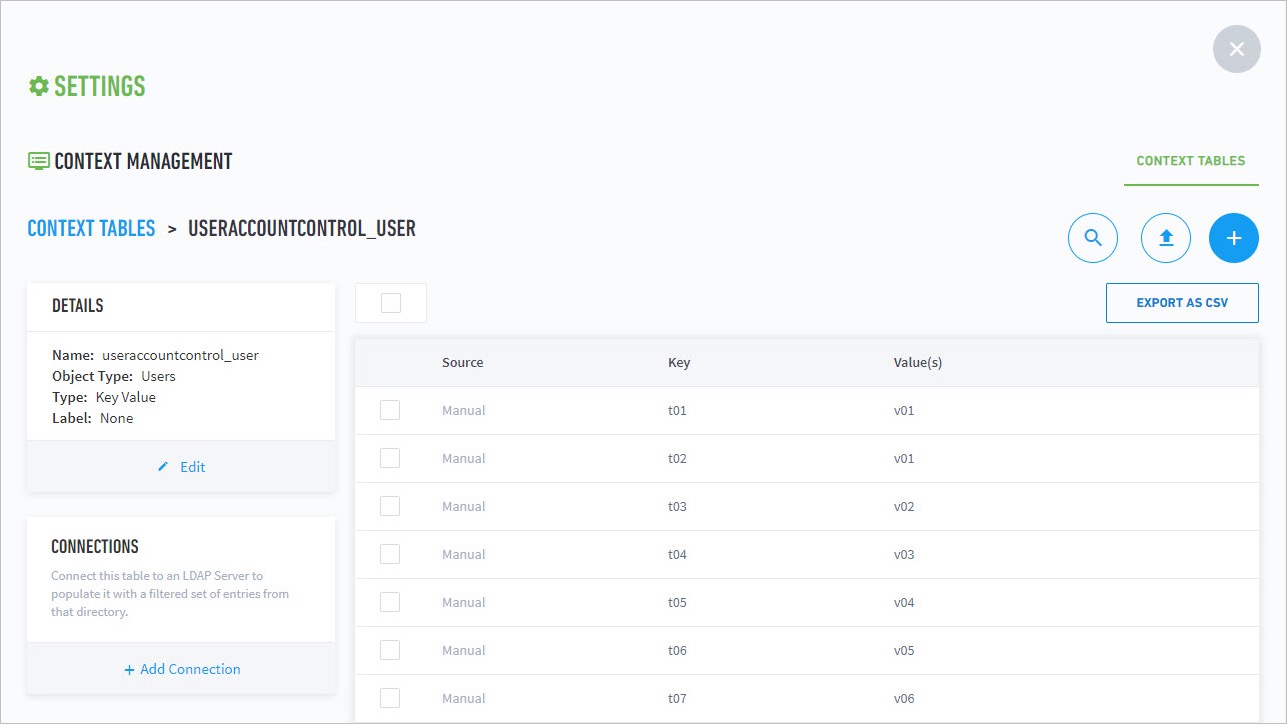

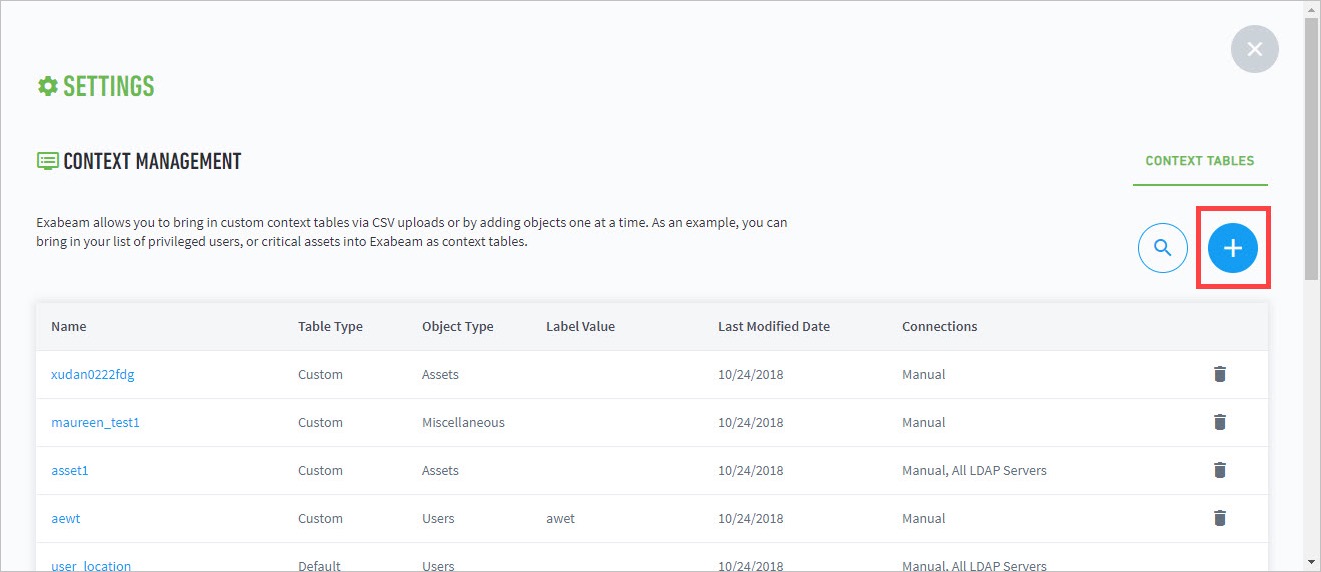

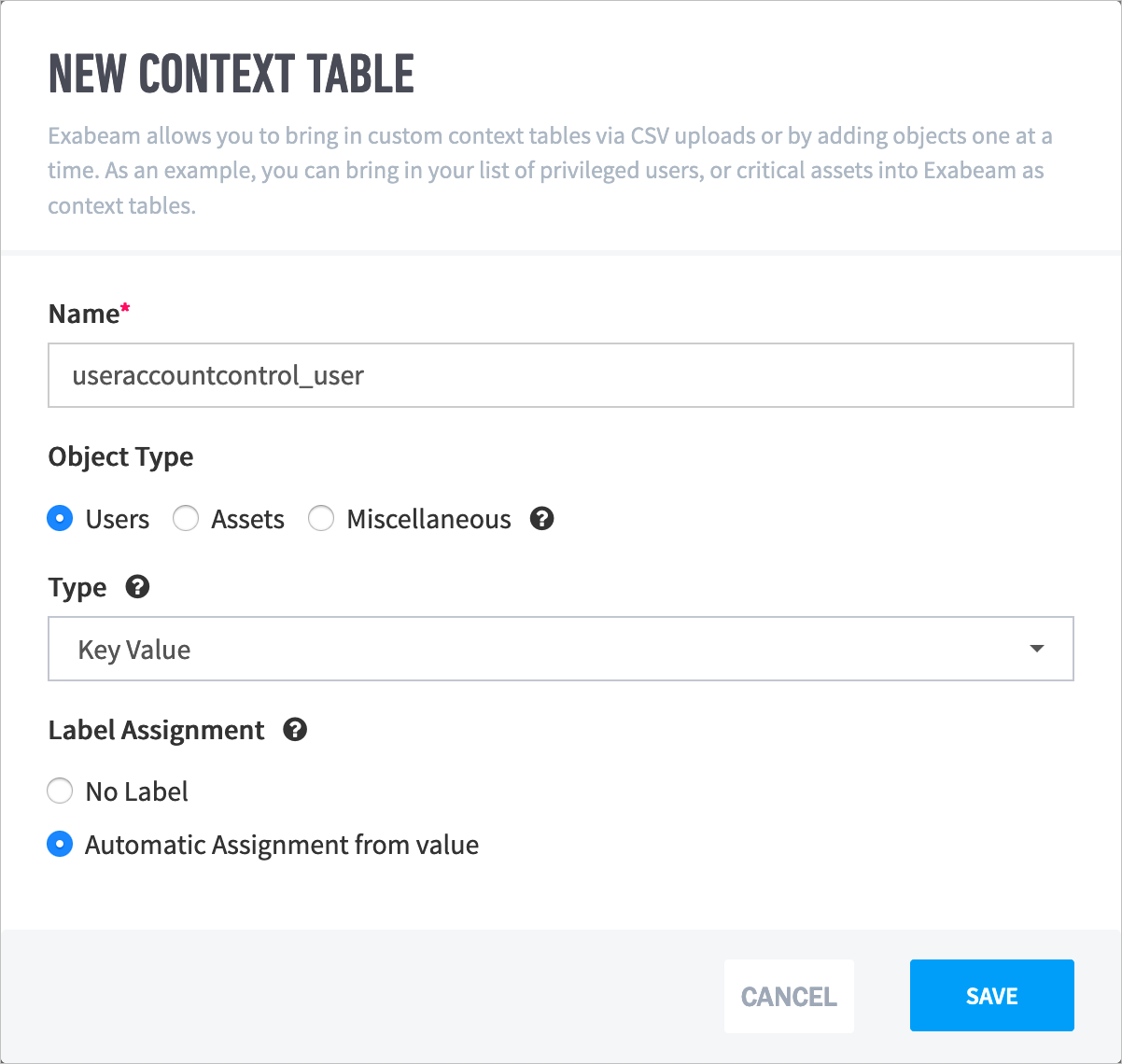

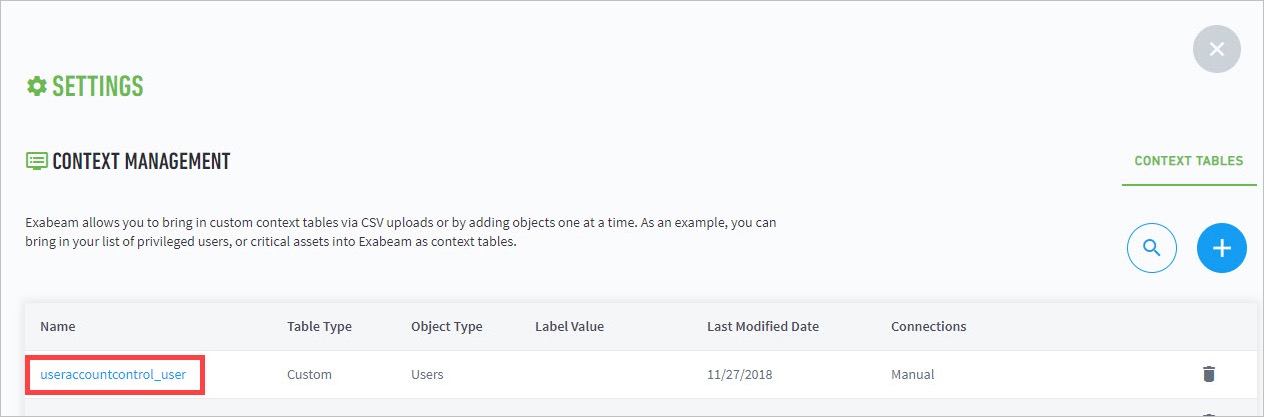

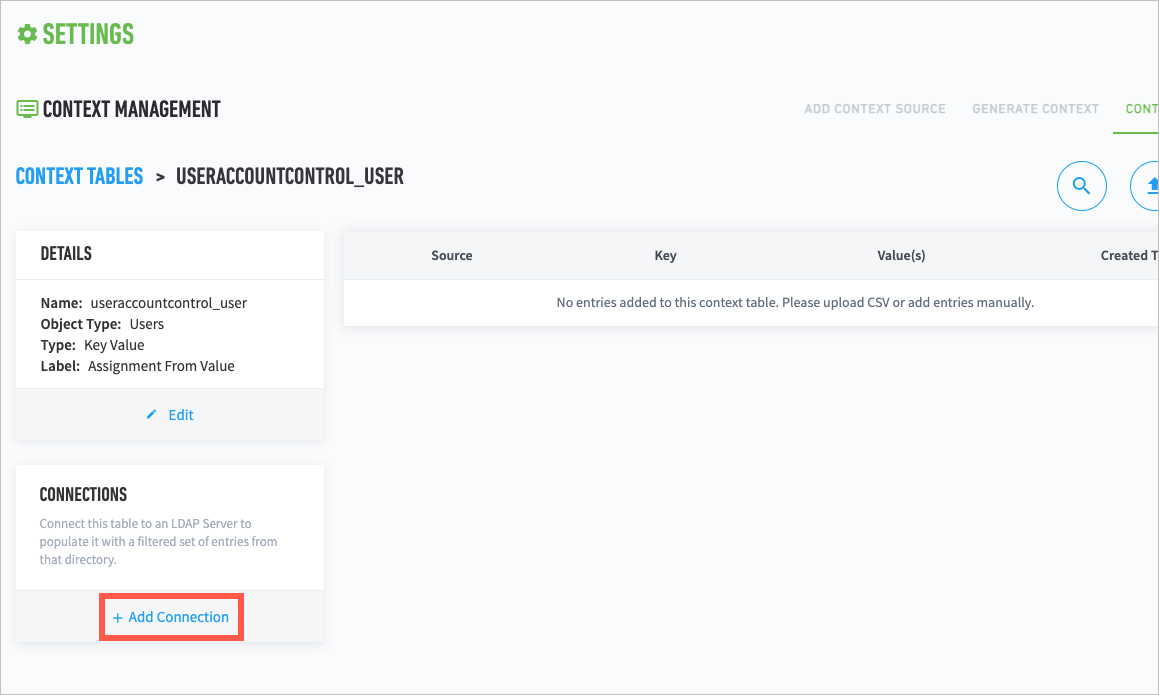

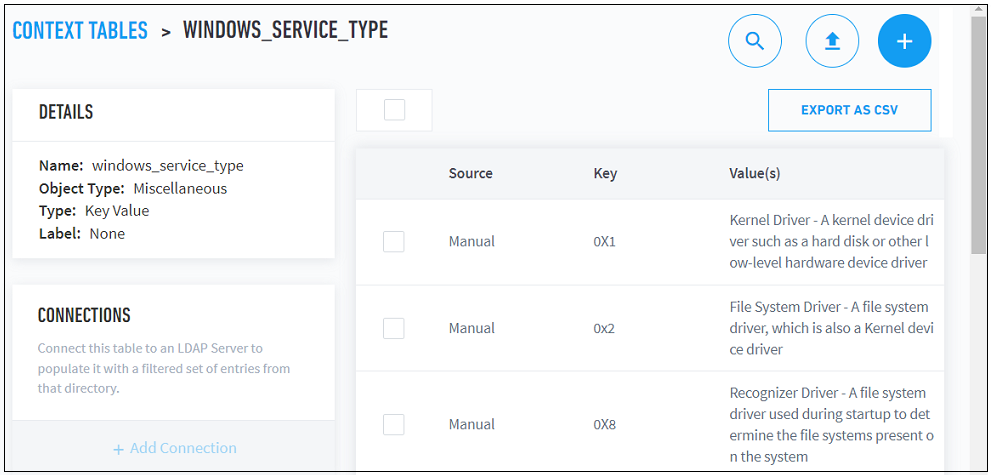

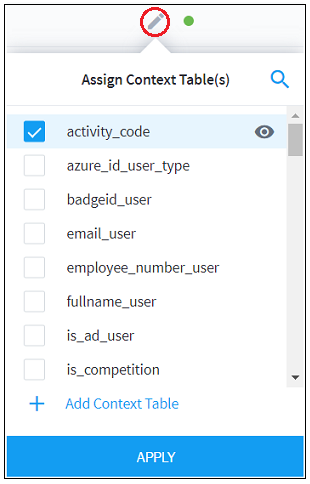

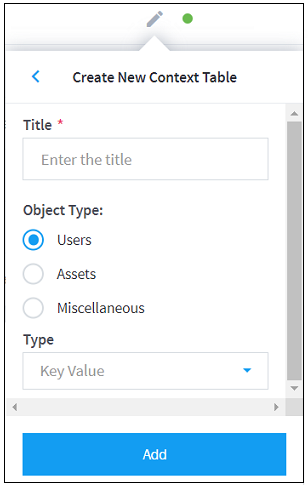

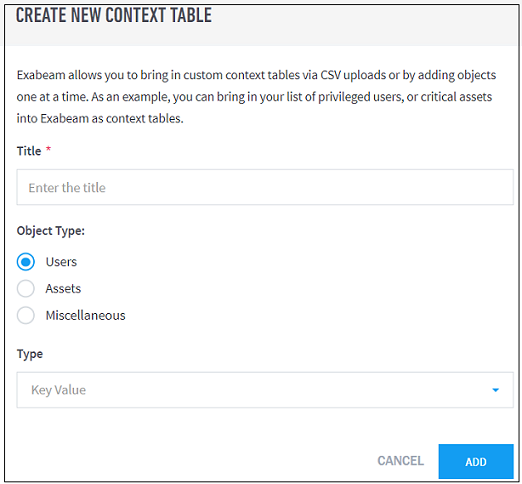

- Custom Context Tables

- How Audit Logging Works

- Starting the Analytics Engine

- Additional Configurations

- Configure Static Mappings of Hosts to/from IP Addresses

- Associate Machine Oriented Log Events to User Sessions

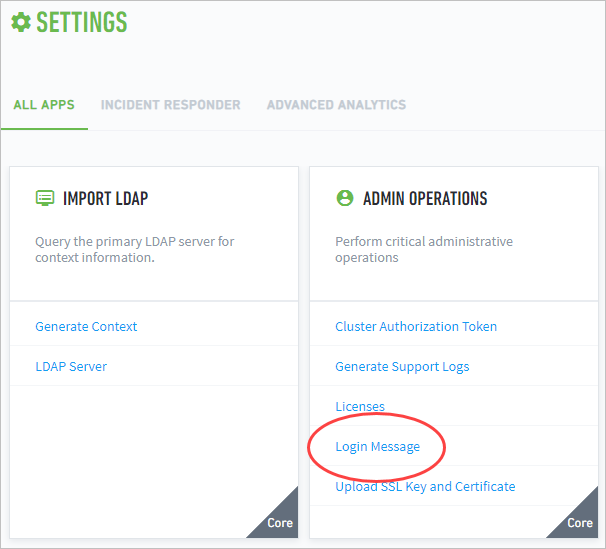

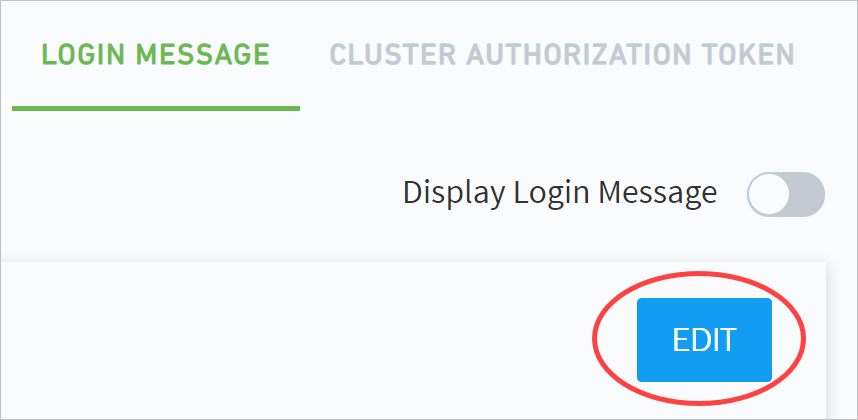

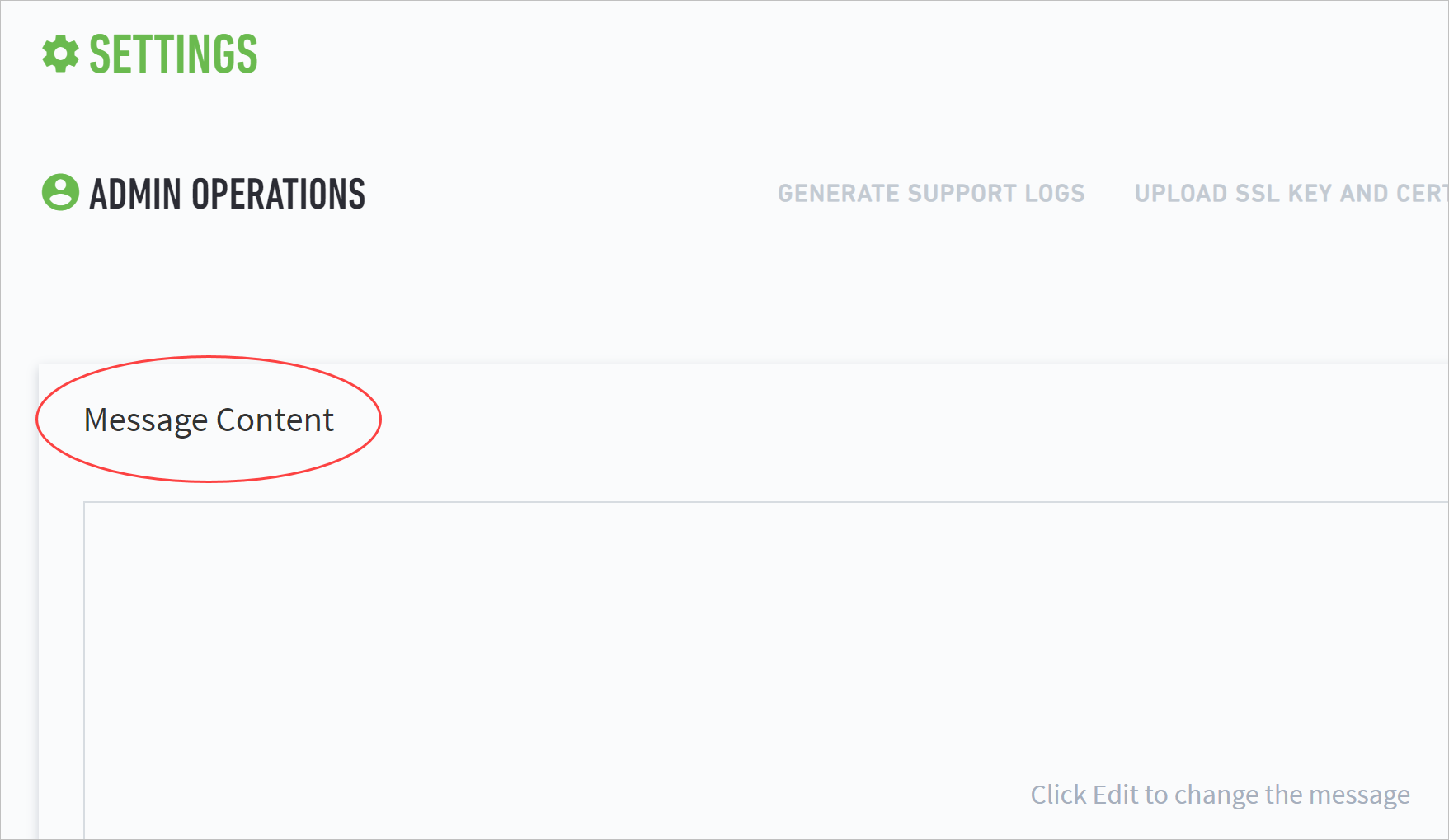

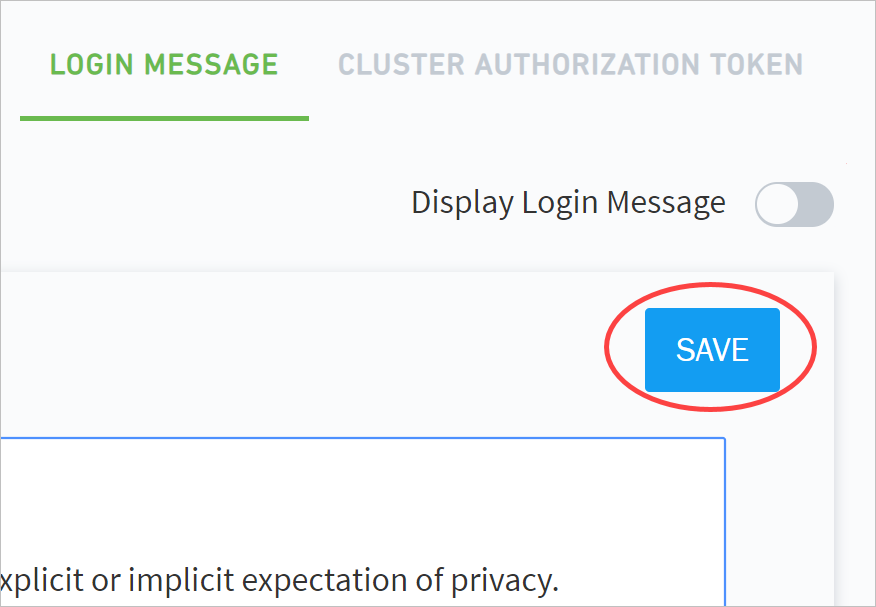

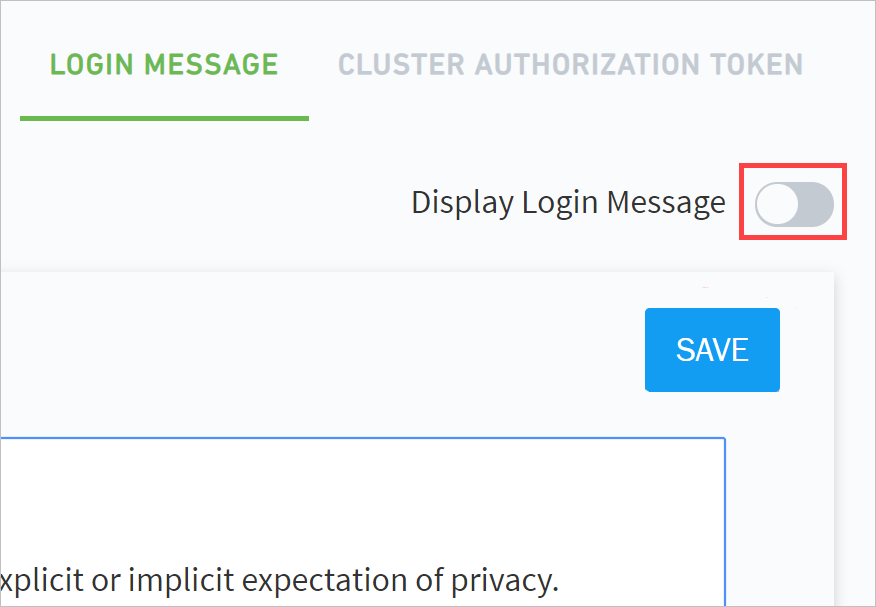

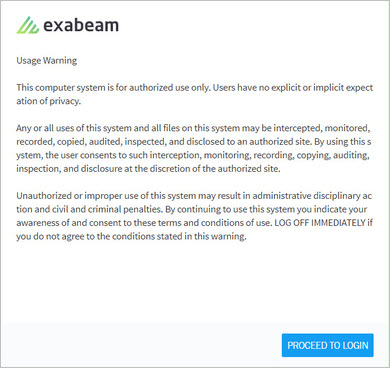

- Display a Custom Login Message

- Configure Threat Hunter Maximum Search Result Limit

- Change Date and Time Formats

- Set Up Machine Learning Algorithms (Beta)

- Detect Phishing

- Restart the Analytics Engine

- Restart Log Ingestion and Messaging Engine (LIME)

- Custom Configuration Validation

- Advanced Analytics Transaction Log and Configuration Backup and Restore

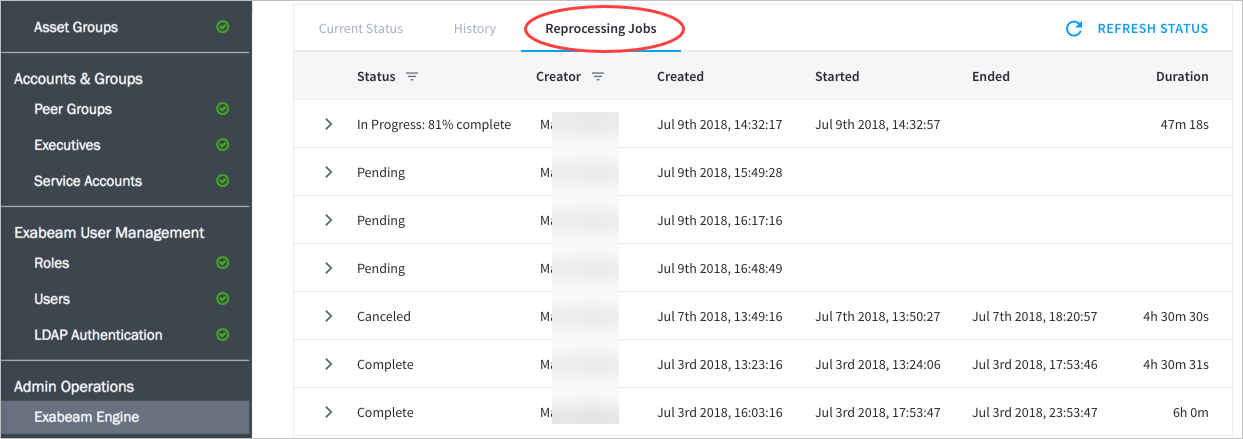

- Reprocess Jobs

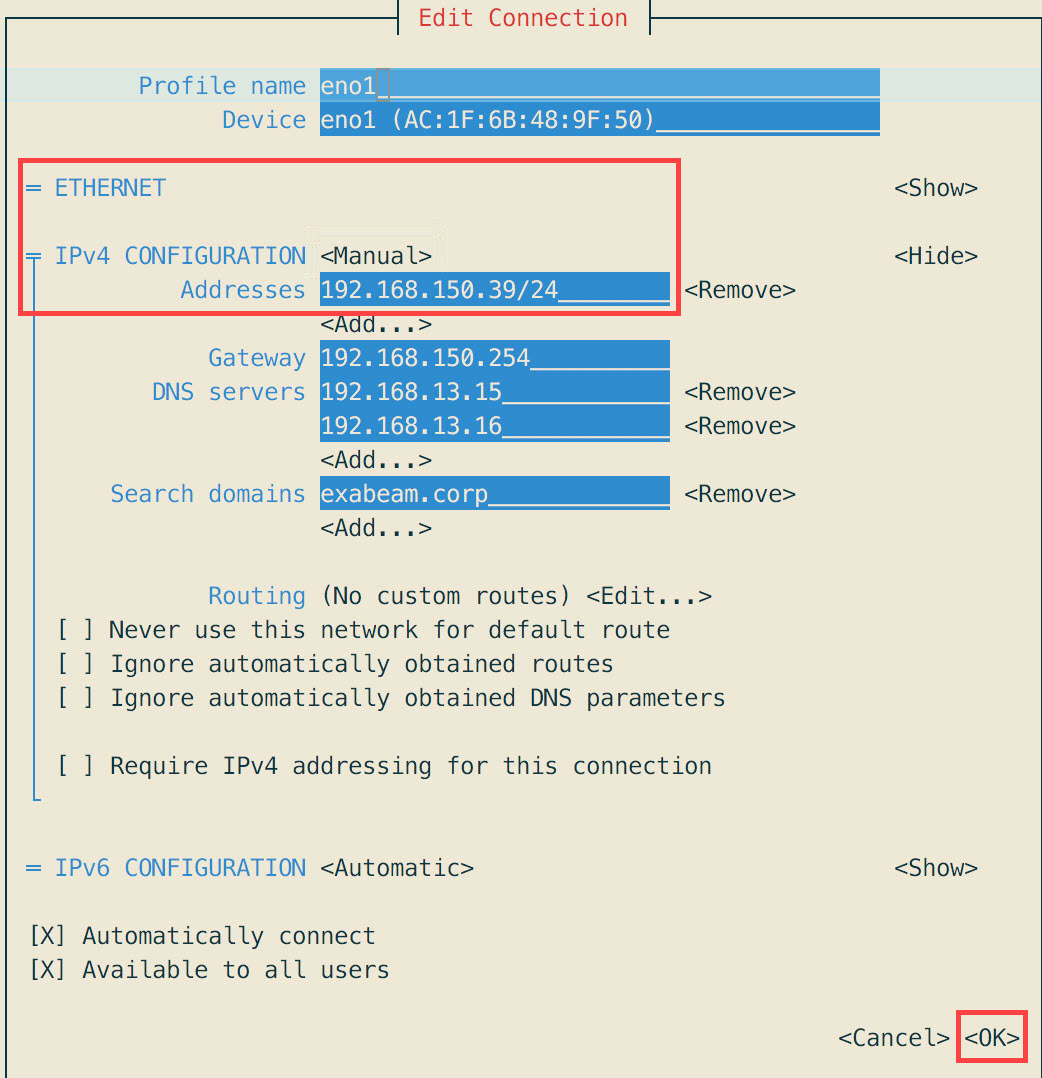

- Re-Assign to a New IP (Appliance Only)

- Hadoop Distributed File System (HDFS) Namenode Storage Redundancy

- User Engagement Analytics Policy

- Configure Settings to Search for Data Lake Logs in Advanced Analytics

- Enable Settings to Detect Email Sent to Personal Accounts

- Configure Smart Timeline™ to Display More Accurate Times for When Rules Triggered

- Configure Rules

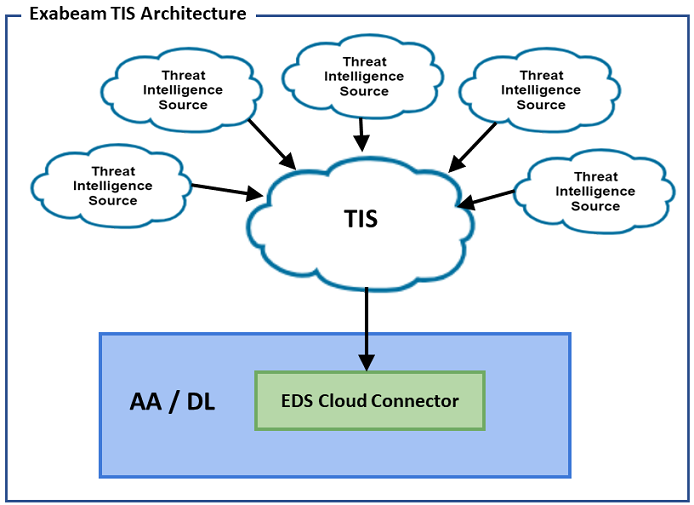

- Exabeam Threat Intelligence Service

- Threat Intelligence Service Prerequisites

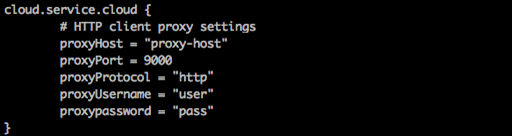

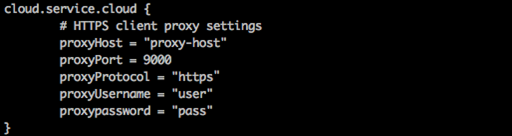

- Connect to Threat Intelligence Service through a Proxy

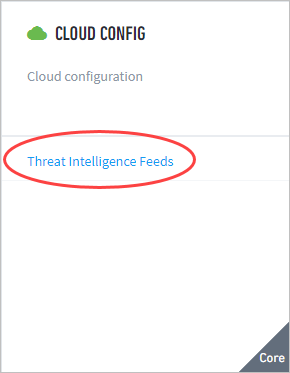

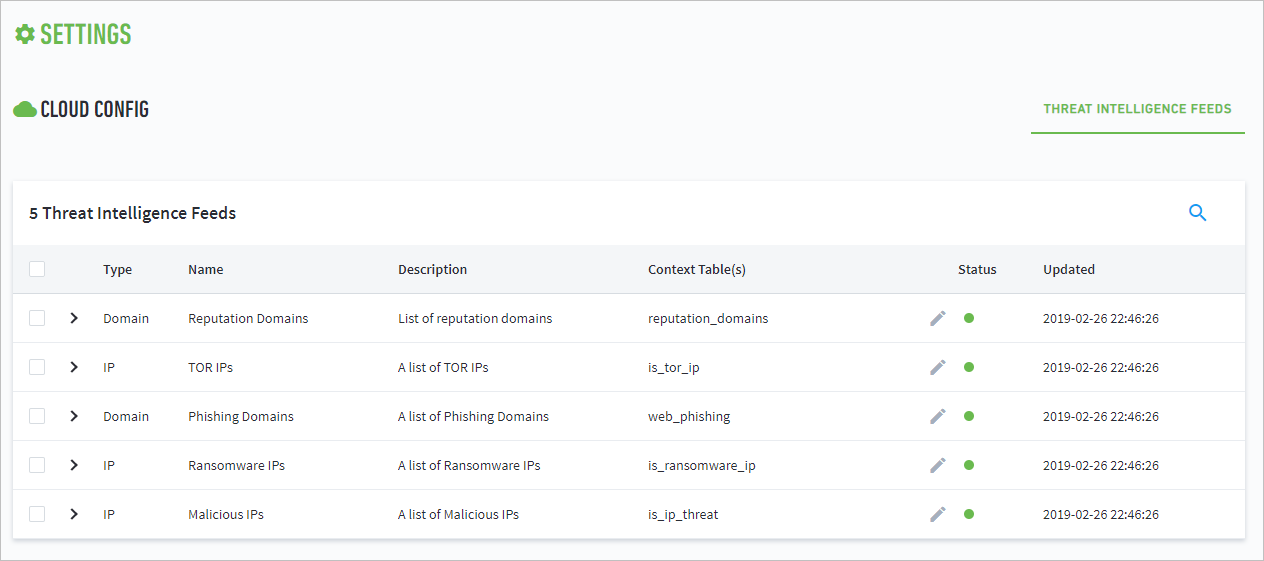

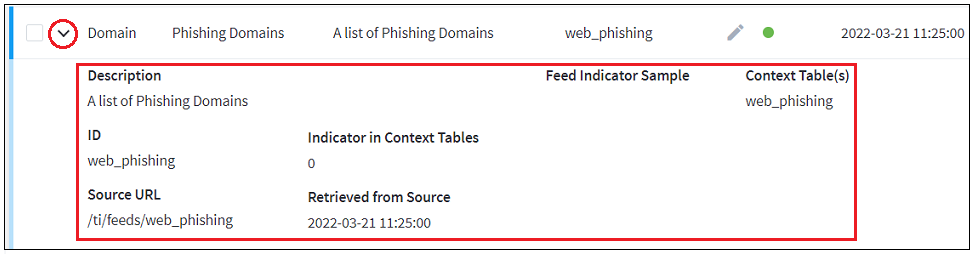

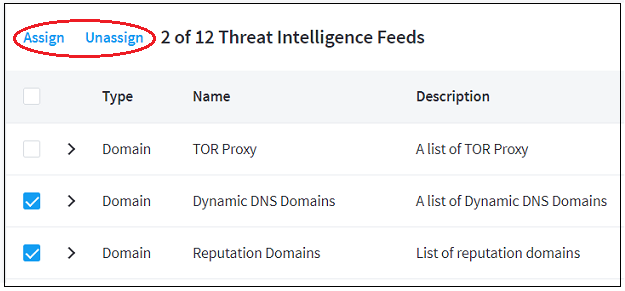

- View Threat Intelligence Feeds

- Threat Intelligence Context Tables

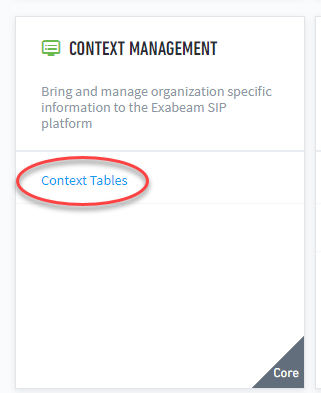

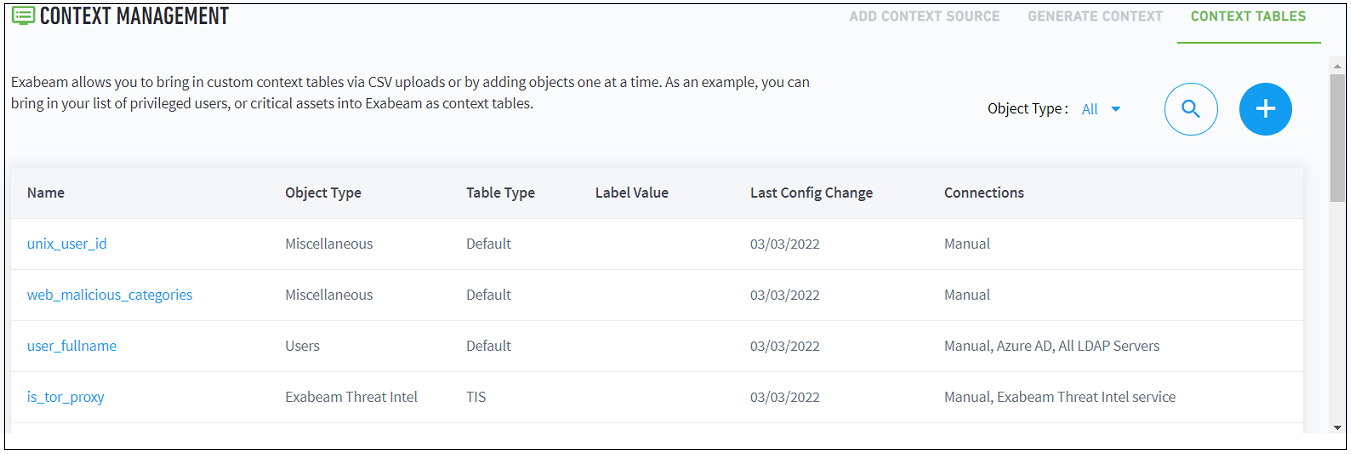

- View Threat Intelligence Context Tables

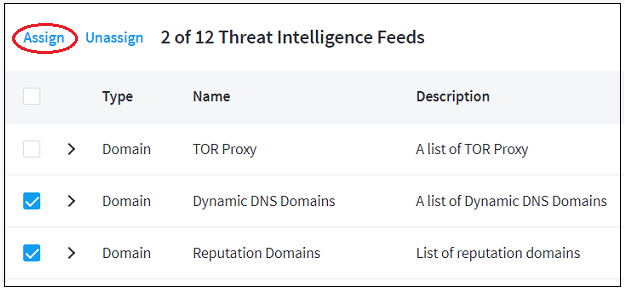

- Assign a Threat Intelligence Feed to a New Context Table

- Create a New Context Table from a Threat Intelligence Feed

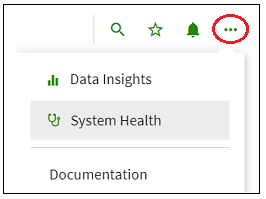

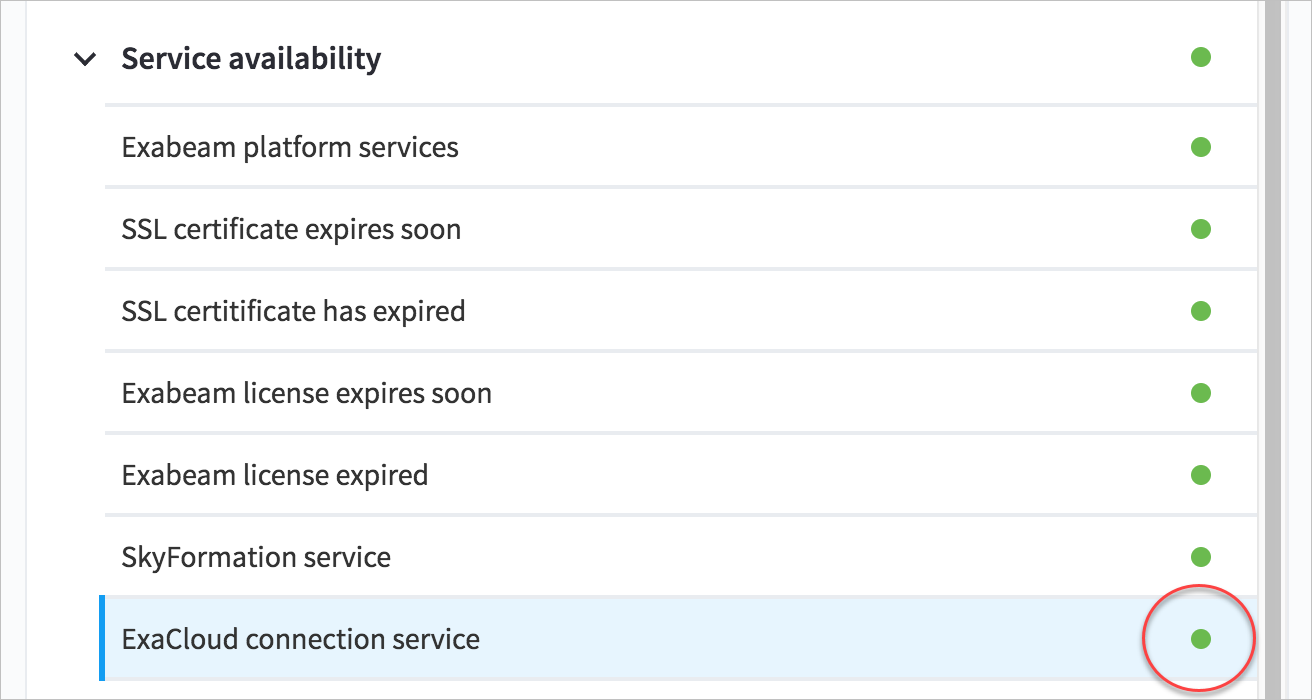

- Check ExaCloud Connector Service Health Status

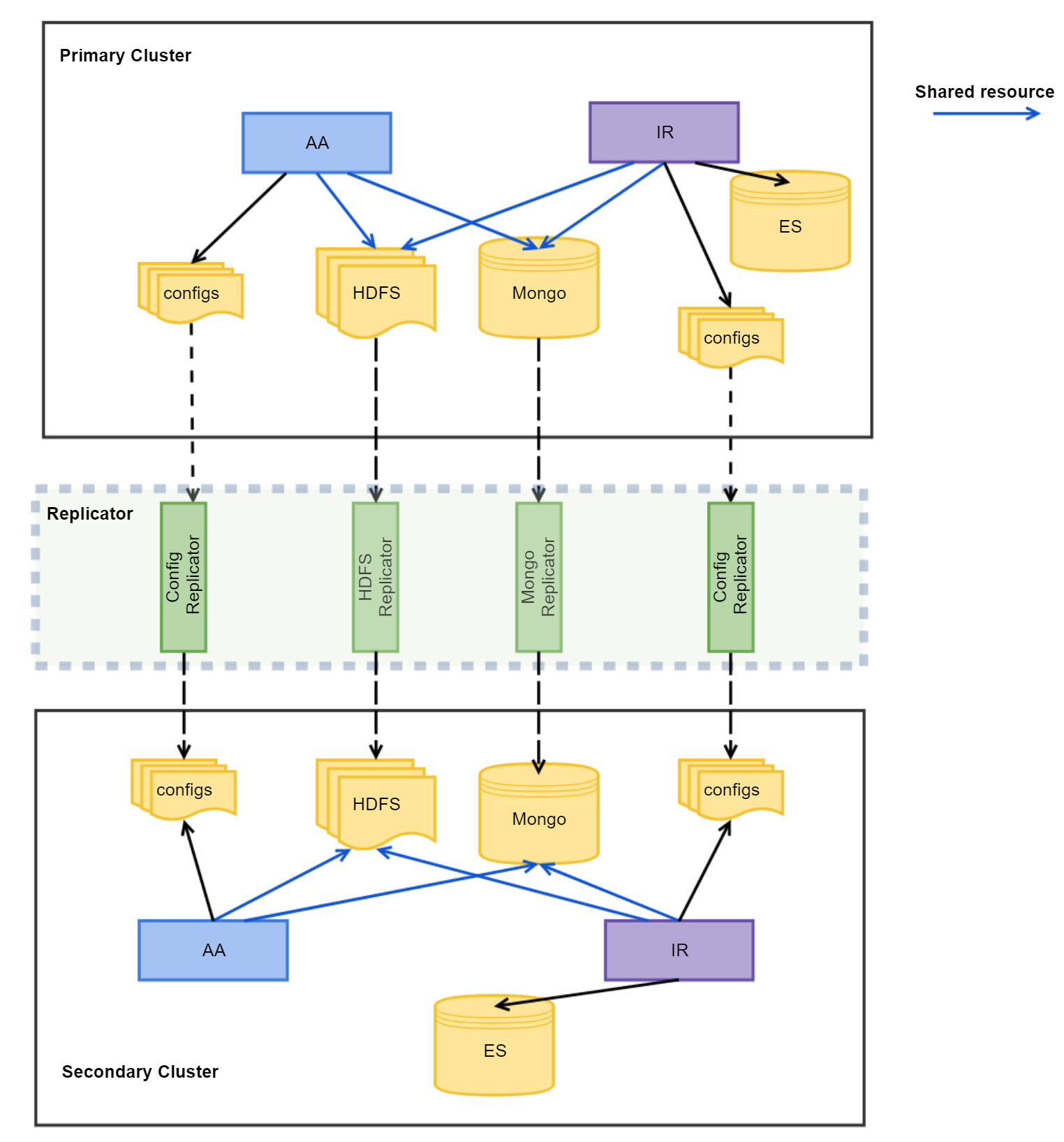

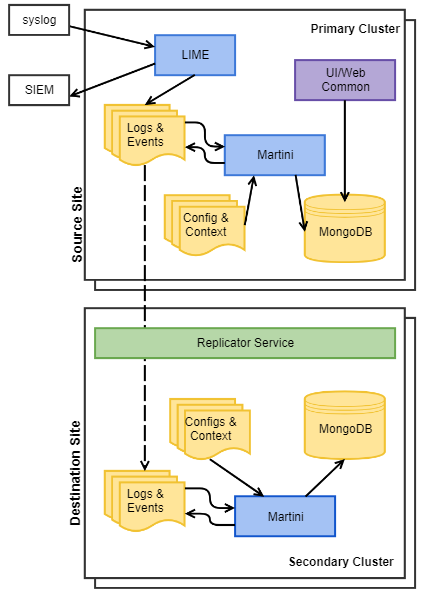

- Disaster Recovery

- Manage Security Content in Advanced Analytics

- Exabeam Hardening

- Set Up Admin Operations

- Health Status Page

- Troubleshoot Advanced Analytics Data Ingestion Issues

- Generate a Support File

- View Version Information

- Syslog Notifications Key-Value Pair Definitions

Configure Advanced Analytics

This section includes everything administrators need to know for setting up and operating Advanced Analytics.

Set Up Admin Operations

Everything administrators need to know about setting up and operating Advanced Analytics.

Access Exabeam Advanced Analytics

Navigate, log in, and authenticate into your Advanced Analytics environment.

If you have an hardware or virtual deployment of Advanced Analytics, enter the IP address of the server and port number 8484:

https://<IP_address]:8484 or https://<IP_address>:8484/uba

If you have the SaaS deployment of Advanced Analytics, navigate to https://[company].aa.exabeam.com.

Use your organization credentials to log into your Advanced Analytics product.

These login credentials were established when Advanced Analytics was installed. You can authenticate into Advanced Analytics using LDAP, SAML, CAC, or SSO through Okta. To configure and enable these authentication types, contact your Technical Account Manager.

If you work for a federal agency, you can authenticate into Advanced Analytics using Common Access Card (CAC). United States government personnel use the CAC to access physical spaces, computer networks, and systems. You have readers on your workstations that read your Personal Identity Verification (PIV) and authenticate you into various network resources.

You can authenticate into Advanced Analytics using CAC combined with another authentication mechanism. To configure and and enable other authentication mechanisms, contact your Technical Account Manager.

Set Up Log Management

Note

The log management setup description in this section applies to Advanced Analytics versions i60–i62.

Large enterprise environments generally include many server, network, and security technologies that can provide useful activity logs to trace who is doing what and where. Log ingestion can be coupled with your Data Lake data repository, that can forward syslogs to Advanced Analytics. (See Data Lake Administration Guide > Syslog Forwarding to Advanced Analytics.)

Use the Log Ingestion Settings page to configure the following log sources:

Note

The Syslog destination is your site collector IP/FQDN, and only TLS connections are accepted in port TCP/515.

|

|

View Insights About Syslog Ingested Logs

Advanced Analytics has the ability to test the data pipeline of logs coming in via Syslog.

Note

This option is only available if the Enable Syslog Ingestion button is toggled on.

Click the Syslog Stats button to view the number of logs fetched, the number of events parsed, and the number of events created. A warning will also appear that lists any event types that were not created within the Syslog feed that was analyzed.

In this step you can also select Options to limit the time range and number of log events tested.

Ingest Logs from Google Cloud Pub/Sub into Advanced Analytics

To create events from Google Cloud Pub/Sub topics, configure Google Pub/Sub as an Advanced Analytics log source.

Create a Google Cloud service account with Pub/Sub Publisher and Pub/Sub Subscriber permissions.

Create and download a JSON-type service account key. You use this JSON file later.

Create a Google Cloud Pub/Sub topic with Google-managed key encryption.

For the Google Cloud Pub/Sub topic you created, create a subscription with specific settings:

Delivery type – Select Pull.

Subscription expiration – Select Never expire.

Retry policy – Select Retry immediately.

Save the subscription ID to use later.

In the navigation bar, click the menu

, select Settings, then select Analytics.

, select Settings, then select Analytics.Under Log Management, select Log Ingestion Settings.

Click ADD, then from the Source Type list, select Google Cloud Pub/Sub.

Enter information about your Google Cloud Pub/Sub topic:

Description – Describe the topic, what kinds of logs you're ingesting, or any other information helpful for you to identify this as a log source.

Service key – Upload the Google Cloud service account key JSON file you downloaded.

Subscriptions

Subscription name – Enter the Google Cloud Pub/Sub subscription ID you created.

Description – Describe the subscription, to which Google Cloud Pub/Sub topic it was created, or what messages it receives.

To verify the connection to your Google Cloud Pub/Sub topic, click TEST CONNECTION. If you see an error, verify the information you entered then retest the connection.

Click SAVE.

Restart Log Ingestion and Messaging Engine (LIME).

To ingest specific logs from your Google Cloud Pub/Sub topic, configure a log feed.

Set Up Training & Scoring

To build a baseline, Advanced Analytics extensively profiles the people, asset usage, and sessions. For example, in a typical deployment, Advanced Analytics begins by examining typically 60-90 days of an organization's logs. After the initial baseline analysis is done, Advanced Analytics begins assigning scores to each session based on the amount and type of anomalies in the session.

Set Up Log Feeds

Note

The log feed setup information in this section applies to Advanced Analytics versions i60–i62.

Advanced Analytics can be configured to fetch log data from a SIEM. Administrators can configure log feeds that can be queried during ingestion. Exabeam provides out-of-the-box queries for various log sources; or you can edit them and apply your own.

Once the log feed is setup, you can perform a test query that fetches a small sample of logs from the log management system. You can also parse the sample logs to make sure that Advanced Analytics is able to normalize the logs. If the system is unable to parse the logs, reach out to Customer Success and the Exabeam team will create a parser for those logs.

Draft/Published Modes for Log Feeds

Note

The information in this section applies to Advanced Analytics versions i60–i62.

There are two different modes when it comes to adding log feeds under Settings > Log Feeds. When you create a new log feed and complete the workflow you will be asked if you would like to publish the feed. Publishing the feed lets the Analytics Processing Engine know that the respected feed is ready for consumption.

If you choose to not publish the feed then it will be left in draft mode and will not be picked up by the processing engine. You can always publish a feed that is in draft mode at a later time.

This allows you to add multiple feeds and test queries without worrying about the feed being picked up by the processing engine or having the processing engine encounter errors when a draft feed is deleted.

Once a feed is in published mode it will be picked up by the processing engine at the top of the hour.

Advanced Analytics Transaction Log and Configuration Backup and Restore

Hardware and Virtual Deployments Only

Rebuilding a failed worker node host (from a failed disk for on on-premise appliance) or shifting a worker node host to new resources takes significant planning. One of the more complex steps and most prone to error is migrating the configurations. Exabeam has provide a backup mechanism for layered data format (LDF) transaction log and configuration files to minimize the risk of error. To use the configuration backup and restore feature, you must have:

An active Advanced Analytics worker node

Cluster with two or more worker nodes

Have read and write permission for the credentials you will configure to access the base path at the storage destination

A scheduled task in Advanced Analytics to run backup to the storage destination

Note

To rebuild after a cluster failure, it is recommended that a cloud-based backups be used. To rebuild nodes from disk failures, backup files to a worker node or cloud-based destination.

Warning

Master nodes cannot be backed up and restored. Only worker nodes.

If you want to save the generate backup files to your first worker node, then no further configuration is needed to configure an external storage destination. A worker node destination addresses possible disk failure at the master node appliance. This is not recommended as the sole method for disaster recovery.

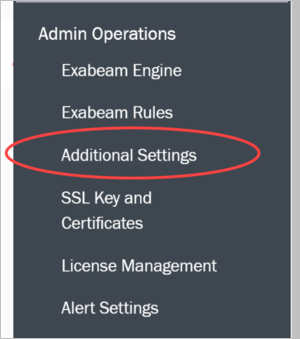

Go to Settings > Additional Settings > Admin Operations > External Storage.

Click Add.

Fill all fields and then click TEST CONNECTION to verify connection credentials.

Once a working connection is confirmed Successful, click SAVE.

Once you have a verified destination to store your files, configure and schedule a recurring backup.

Go to Settings > Additional Settings > Backup & Restore > Backups.

Click CREATE BACKUP to generate a new schedule record. If you are changing the destination, click the edit icon

on the displayed record.

on the displayed record.Fill all fields and then click SAVE to apply the configuration.

Warning

Time is given in UTC.

A successful backup will place a backup.exa file at /opt/exabeam/data/backup at the worker node. In the case that the scheduled backup fails to write files to the destination, confirm there is enough space at the destination to hold the files and that the exabeam-web-common service is running. (If exabeam-web-common is not running, review its application.log for hints as to the possible cause.)

In order to restore a node host using files store off-node, you must have:

administrator privileges to run tasks a the host

SSH access to the host

free space at the restoration partition at the master node host that is greater than 10 times the size of

backup.exabackup file

Copy the backup file,

backup.exa, from the backup location to the restoration partition. This should be a temporary work directory (<restore_path>) at the master node.Run the following to unpack the EXA file and repopulate files.

sudo /opt/exabeam/bin/tools/exa-restore <restore_path>/backup.exa

exa-restorewill stop all services, restore files, and then start all services. Monitor the console output for error messages. See Troubleshooting a Restoration ifexa-restoreis unable to run to completion.Remove

backup.exaand the temporary work directory when restoration is completed.

If restoration does not succeed, the try following below solutions. If the scenarios listed do not match your situation,

Not Enough Disk Space

Select a different partition to restore the configuration files to and try to restore again. Otherwise, review files stored in to target destination and offload files to create more space.

Restore Script Cannot Stop All Services

Use the following to manually stop all services:

source /opt/exabeam/bin/shell-environment.bash && everything-stop

Restore Script Cannot Start All Services

Use the following to manually start all services:

source /opt/exabeam/bin/shell-environment.bash && everything-start

Restore Script Could Not Restore a Particular File

Use tar to manually restore the file:

# Determine the task ID and base directory (<base_dir>) for the file restoration that failed. # Go to the <base_id>/<task_id> directory and apply following command: sudo tar -xzpvf backup.tar backup.tgz -C <baseDir> # Manually start all services. source /opt/exabeam/bin/shell-environment.bash && everything-start

Configure Advanced Analytics System Activity Notifications

Configure Advanced Analytics to send notifications to your log repository or email about system health, notable sessions, anomalies, and other important system information.

Depending on the format in which you want information about your system, send notifications to your log repository or email.

Advanced Analytics sends notifications to your log repository in a structured data format using Syslog. These notifications are formatted so machines, like your log repository, can easily understand them.

Advanced Analytics sends notifications to your email in a format that's easier to read for humans.

Send Notifications to your Log Repository, Log Ticketing System, or SIEM

Send notifications to your log repository, log ticketing system, or SIEM using the Syslog protocol.

In the navigation bar, click the menu

, select Settings, then select Core.

, select Settings, then select Core.Under NOTIFICATIONS, select Setup Notifications.

Click add

, then select Syslog Notification.

, then select Syslog Notification.Configure your notification settings:

IP / Hostname – Enter the IP or host name of your Syslog server.

Port – Enter the port your Syslog server uses.

Protocol – Select the network protocol your Syslog server uses to send messages: TCP, SSL_TCP, or UDP.

Syslog Security Level – Assign a severity level to the notification:

Informational – Normal operational events, no action needed.

Debug – Useful information for debugging, sent after an error occurs.

Error – An error has occurred and must be resolved.

Warning – Events that will lead to an error if you don't take action.

Emergency – Your system is unavailable and unusable.

Alert – Events that should be corrected immediately.

Notice – An unusual event has occured.

Critical – Some event, like a hard device error, has occurred and your system is in critical condition.

Notifications by Product – Select the events for which you want to be notified:

System Health – All system health alerts for Advanced Analytics.

Notable Sessions – A user or asset has reached a risk threshold and become notable. This notification describes which rule was triggered and contains any relevant event details, which are unique to each event type. If an event detail isn't available, it isn't included in the notification.

Anomalies – A rule has been triggered.

AA/CM/OAR Audit – An Exabeam user does something in Advanced Analytics, Case Manager, or Incident Responder that's important to know when auditing their activity history; for example, when someone modifies rule behaviour, changes log sources, or changes user roles and permissions.

Job Start – Data processing engines, like Log Ingestion and Messaging Engine (LIME) or the Analytics Engine, have started processing a log.

Job End – Data processing engines, like LIME or the Analytics Engine, have stopped processing a log.

Job Failure – Data processing engines, like LIME or the Analytics Engine, have failed to process a log.

Click ADD NOTIFICATION.

Restart the Analytics Engine.

Send Notifications to Your Email

To get human-friendly notifications, configure email notifications.

Some Incident Responder actions also send email notifications, including:

Notify User By Email Phishing

Phishing Summary Report

Send Email

Send Template Email

Send Indicators via Email

If you configure these settings correctly, Incident Responder uses IRNotificationSMTPService as the service for these actions. If you configure these settings incorrectly, these actions won't work correctly.

In the navigation bar, click the menu

, select Settings, then select Core.

, select Settings, then select Core.Under NOTIFICATIONS, select Setup Notifications.

Click add

, then select Email Notification.

, then select Email Notification.Configure your notification settings:

IP / Hostname – Enter the IP or hostname of your outgoing mail server.

Port – Enter the port number for your outgoing mail server.

SSL – Select if your mail server uses Secure Sockets Layer (SSL) protocol.

Username Required – If your mail server requires a username, select the box, then enter the username.

Password Required – If your mail server requires a password, select the box, then enter the password.

Sender Email Address – Enter the email address the email is sent from.

Recipients – List the email addresses to receive these email notifications, separated by a comma.

E-mail Signature – Enter text that's automatically added to the end of all email notifications.

Notifications by Product – Select the events for which you want to be notified.

Incident Responder:

System Health – All system health alerts for Case Manager and Incident Responder.

Advanced Analytics:

System Health – All system health alerts for Advanced Analytics.

Notable Sessions – A user or asset has reached a risk threshold and become notable. This notification describes which rule was triggered and contains any relevant event details, which are unique to each event type. If an event detail isn't available, it isn't included in the notification.

Anomalies – A rule has been triggered.

AA/CM/OAR Audit – An Exabeam user does something in Advanced Analytics, Case Manager, or Incident Responder that's important to know when auditing their activity history; for example, when someone modifies rule behaviour, changes log sources, or changes user roles and permissions.

Job Start – Data processing engines, like Log Ingestion and Messaging Engine (LIME) or the Analytics Engine, have started processing a log.

Job End – Data processing engines, like LIME or the Analytics Engine, have stopped processing a log.

Job Failure – Data processing engines, like LIME or the Analytics Engine, have failed to process a log.

Click ADD NOTIFICATION.

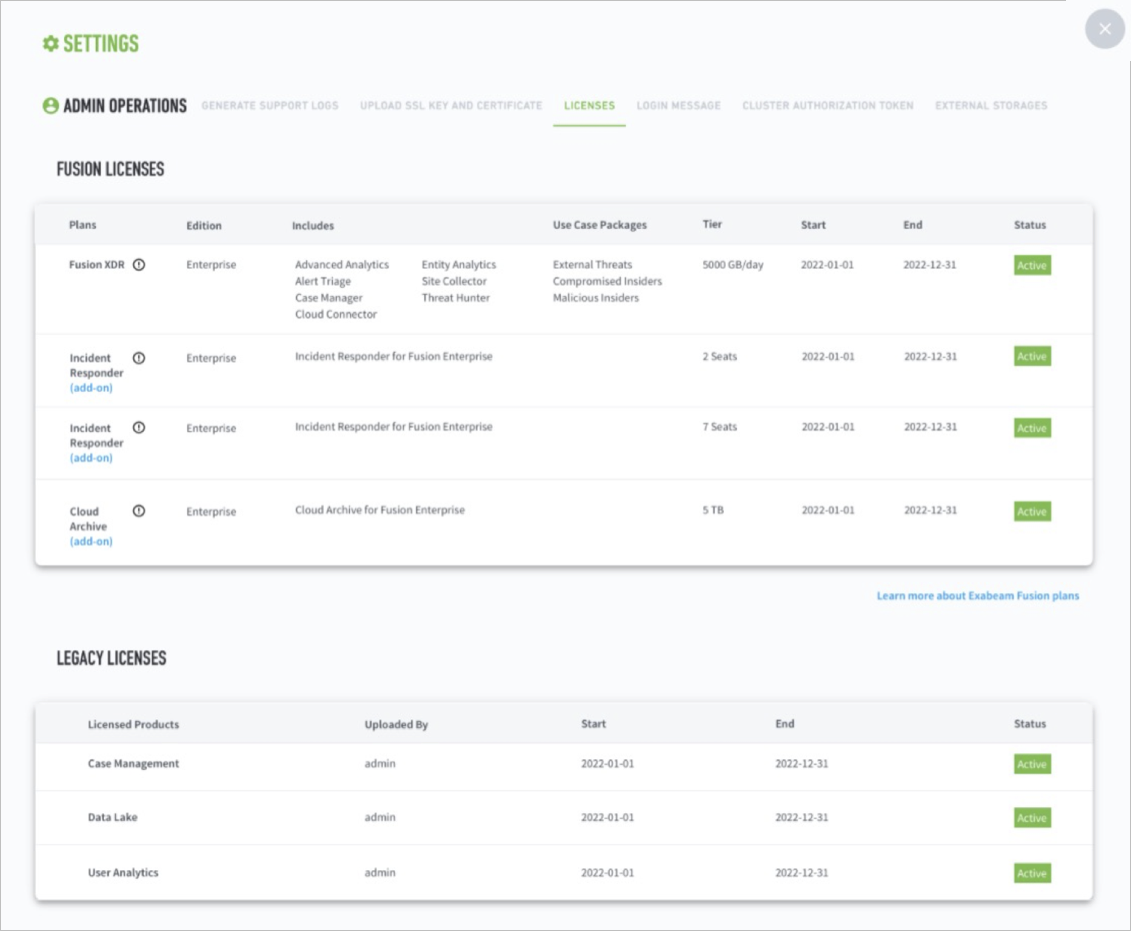

Exabeam Licenses

Exabeam products require a license in order to function. These licenses determine which Exabeam products and features you can use. You are not limited by the amount of external data you can ingest and process.

There are multiple types of Exabeam product licenses available. Exabeam bundles these licenses together and issues you one key to activate all purchased products. For more information on the different licenses, see Types of Exabeam Product Licenses.

License Lifecycle

When you first install Exabeam, the installed instance uses a 30 day grace period license. This license allows you to try out all of the features in Exabeam for 30 days.

Grace Period

Exabeam provides a 30-day grace period for expired licenses before products stop processing data. During the grace period, you will not experience any change in product functionality. There is no limit to the amount of data you can ingest and process.

When the license or grace period is 14 days away from expiring, you will receive a warning alert on the home page and an email.

You can request a new license by contacting your Exabeam account representative or by opening a support ticket.

Expiration Period

When your grace period has ended, you will start to experience limited product functionality. Please contact your Exabeam representative for a valid license and restore all product features.

For Advanced Analytics license expirations, the Log Ingestion Engine will continue to ingest data, but the Analytics Engine will stop processing. Threat Hunter and telemetry will also stop working.

You will receive a critical alert on the home page and an email.

License Alerts

License alerts are sent via an alert on the home page and in email when the license or grace period is 14 days away from expiring and when the grace period expires.

The home page alert is permanent until resolved. You must purchase a product license or renew your existing license to continue using Exabeam.

To check the status and details of your license, go to Settings  > Admin Operations > Licenses.

> Admin Operations > Licenses.

License Versions

Currently, Exabeam has three versions of our product licenses (V1, V2, and V3). License versions are not backward compatible. If you are upgrading from Advanced Analytics I41 / or earlier you must apply the V3 license version. The table below summarizes how the different license versions are designed to work:

V1 | V2 | V3 | |

|---|---|---|---|

Products Supported |

|

|

|

Product Version | Advanced Analytics I38 and below | Advanced Analytics I41 | Advanced Analytics I46 and above Data Lake I24 and above |

Uses unique customer ID | No | No | Yes |

Federal License Mode | No | No | Yes |

Available to customers through the Exabeam Community | No | No | Yes |

Licensed enforced in Advanced Analytics | Yes | Yes | Yes |

Licensed enforced in Data Lake | NA | NA | No |

Applied through the UI | No, the license must be placed in a path in Tequila | No, the license must be placed in a path in Tequila | Yes |

Note

Licenses for Advanced Analytics I46 / and later must be installed via the GUI on the license management page.

Types of Exabeam Product Licenses

Exabeam licenses specify which products you have access to and for how long. We bundle your product licenses together into one license file. All products that fall under your Exabeam platform share the same expiration dates.

Advanced Analytics product licenses:

User Analytics – This is the core product of Advanced Analytics . Exabeam’s user behavioral analytics security solution provides modern threat detection using behavioral modeling and machine learning.

Threat Hunter – Threat Hunter is a point and click advanced search function which allows for searches across a variety of dimensions, such as Activity Types, User Names, and Reasons. It comes fully integrated with User Analytics.

Exabeam Threat Intelligence Services (TIS) – TIS provides real-time actionable intelligence into potential threats to your environment by uncovering indicators of compromise (IOC). It comes fully integrated with the purchase of an Advanced Analytics V3 license. TIS also allows access to telemetry.

Entity Analytics (EA) – Entity Analytics offers analytics capabilities for internet-connected devices and entities beyond users such as hosts and IP addresses within an environment.

Entity Analytics is available as an add-on option. If you are adding Entity Analytics to your existing Advanced Analytics platform, you will be sent a new license key. Note that you may require additional nodes to process asset oriented log sources.

Incident Responder – Also known as Orchestration Automation Response. Incident Responder adds automation to your SOC to make your cyber security incident response team more productive.

Incident Responder is available as an add-on option. If you are adding Incident Responder to your existing Advanced Analytics platform, you will be sent a new license key. Note that you may require additional nodes to support automated incident responses.

Case Manager – Case Manager can fully integrate into Advanced Analytics enabling you to optimize analyst workflow by managing the life cycle of your incidents.

Case Manager is available as an add-on option. If you are adding Case Manager to your existing Advanced Analytics platform, you will be sent a new license key. Note that you may require additional nodes to support this module extension.

After you have purchased or renewed your product licenses, proceed to Download a License.

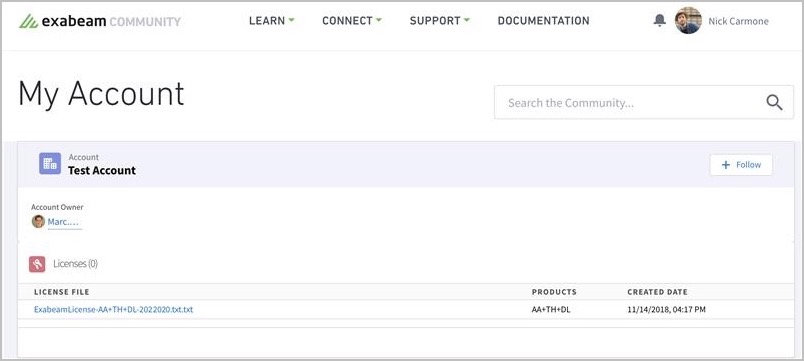

Download an On-premises or Cloud Exabeam License

You can download your unique customer license file from the Exabeam Community.

To download your Exabeam license file:

Log into the Exabeam Community with your credentials.

Click on your username.

Click on My Account.

Click on the text file under the License File section to start the download

After you have downloaded your Exabeam license, proceed to Apply a License.

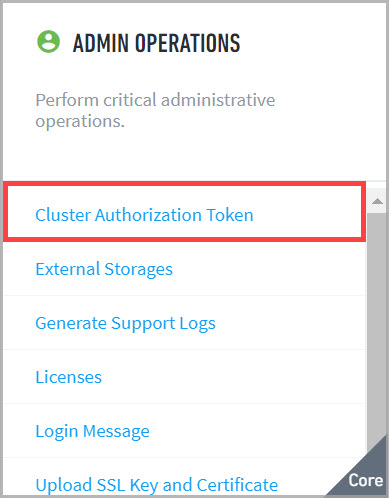

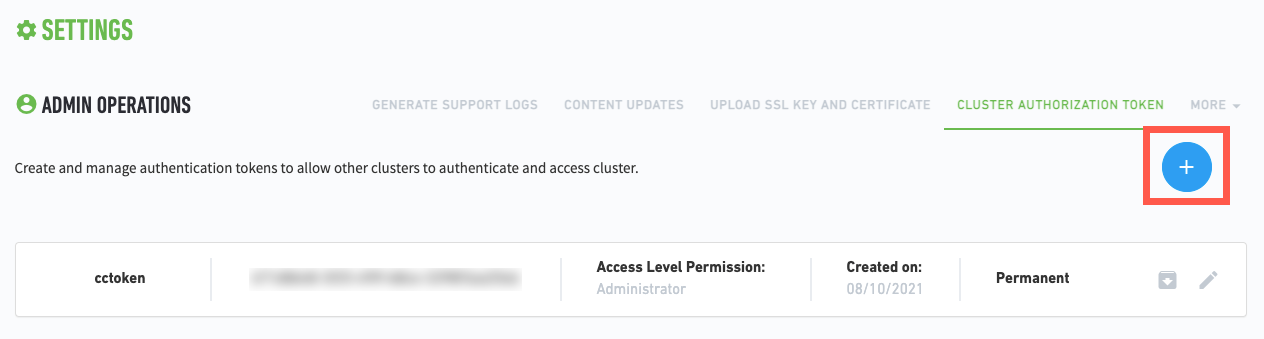

Exabeam Cluster Authentication Token

The cluster authentication token is used to verify identities between clusters that have been deployed in phases as well as HTTP-based log collectors. Each peer cluster in a query pool must have its own token. You can set expiration dates during token creation or manually revoke tokens at any time.

Note

This operation is not supported for Data Lake versions i40.2 through i40.5. For i40.6 and higher, please see the Contents of the exabeam-API-docs.zip file section of the following document: Exabeam Saas API Documentation.

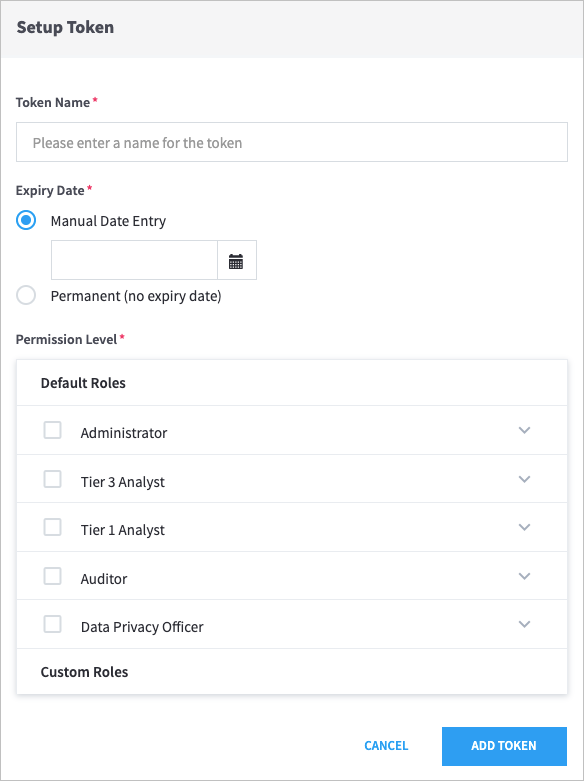

To generate a token:

Go to Settings > Core > Admin Operations > Cluster Authentication Token.

The Cluster Authorization Token page appears.

Click

.

.The Setup Token dialog box appears.

Enter a Token Name, and then select an Expiry Date.

Important

Token names can contain only letters, numbers, and spaces.

Select the Default Roles for the token.

Click Add Token.

Use this generated file to allow your API(s) to authenticate by token. Ensure that your API uses

ExaAuthTokenin its requests. For curl clients, the request structure resembles the following:curl -H "ExaAuthToken:<generated_token>" https://<external_host>:<api_port>/<api_request_path>

Set Up Authentication and Access Control

What Are Accounts & Groups?

Peer Groups

Peer groups can be a team, department, division, geographic location, etc. and are defined by the organization. Exabeam uses this information to compare a user's behavior to that of their peers. For example, when a user logs into an application for the first time Exabeam can evaluate if it is normal for a member of their peer group to access that application. When Dynamic Peer Grouping is enabled, Exabeam will use machine learning to choose the best possible peer groups for a user for different activities based on the behaviors they exhibit.

Executives

Exabeam watches executive movements very closely because they are privileged and have access to sensitive and confidential information, making their credentials highly desirable for account takeover. Identifying executives allows the system to model executive assets, thereby prioritizing anomalous behaviors associated with them. For example, we will place a higher score for an anomaly triggered by a non-executive user accessing an executive workstation.

Service Accounts

A service account is a user account that belongs to an application rather than an end user and runs a particular piece of software. During the setup process, we work with an organization to identify patterns in service account labels and uses this information to classify accounts as service accounts based on their behavior. Exabeam also adds or removes points from sessions based on service account activity. For example, if a service account logs into an application interactively, we will add points to the session because service accounts should not typically log in to applications.

What Are Assets & Networks?

Workstations & Servers

Assets are computer devices such as servers, workstations, and printers. During the setup process, we will ask you to review and confirm asset labels. It is important for Exabeam to understand the asset types within the organization - are they Domain Controllers, Exchange Servers, Database Servers or workstations? This adds further context to what Exabeam sees within the logs. For example, if a user performs interactive logons to an Exchange Server on a daily basis, the user is likely an Exchange Administrator. Exabeam automatically pulls in assets from the LDAP server and categorizes them as servers or workstations based on the OS property or the Organizational Units they belong to. In this step, we ask you to review whether the assets tagged by Exabeam are accurate. In addition to configuration of assets during setup, Exabeam also runs an ongoing classifier that classifies assets as workstations or servers based on their behavior.

Network Zones

Network zones are internal network locations defined by the organization rather than a physical place. Zones can be cities, business units, buildings, or even specific rooms. For example, "Atlanta" can refer to a network zone within an organization rather than the city itself (all according to an organization's preference). Administrators can upload information regarding network zones for their internal assets via CSV or add manually one at a time.

Asset Groups

Asset Groups are a collection of assets that perform the same function in the organization and need to be treated as a single entity from an anomaly detection perspective. An example of an asset group would be a collection of Exchange Servers. Grouping them this way is useful to our modeling processing because it allows us to treat an asset group as a single entity, reducing the amount of false positives that are generated when users connect to multiple servers within that group. As a concrete example, if a user regularly connects to email exchange server #1 then Exabeam builds a baseline that says this is their normal behavior. But exchange servers are often load-balanced, and if the user then connects to email exchange server #2 we can say that this is still normal behavior for them because the exchange servers are one Asset Group. Other examples of asset groups are SharePoint farms, or Virtual Desktop Infrastructure (VDI).

Common Access Card (CAC) Authentication

Exabeam supports Common Access Card (CAC) authentication. CAC is the principal card used to enable physical spaces, and it provides access to computer networks and systems. Analysts have CAC readers on their workstations to read their Personal Identity Verification (PIV) credentials and authenticate them to use various network resources.

Note the following restrictions:

Configure CAC users that are authorized to access Exabeam from the Exabeam User Management page.

During the user provisioning, the CAC analysts must be assigned roles. The roles associated with a CAC user will be used for authorization when they login.

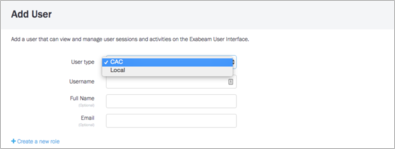

Figure 1. Add User menu

Figure 1. Add User menu

Configure Client Certificates

Retrieve your

ca.pemfile to/home/exabeamdirectory at the master node.Run the following commands on the master node (note that an alias of

cacbundleis applied to the certificate being installed):source /opt/exabeam/bin/shell-environment.bash docker cp ca.pem exabeam-web-common:/ docker exec exabeam-web-common:/ keytool -import -trustcacerts -alias cacbundle -file ca.pem -keystore /opt/exabeam/web-common/config/custom/truststore.jks -storepass changeit -noprompt

Note

With

docker exec exabeam-web-common,exabeam-web-commondoes not resolve to the docker container. As a result, you must query docker ps and find the container ID or use–name exabeam-web-common.Note

If you need to remove the alias, use the following command:

docker exec -it exabeam-web-common keytool -delete -alias cacbundle

Located in

/opt/exabeam/config/common/web/custom/application.conf, thesslClientAuthflag must be set totrue, as shown in the following example:webcommon { service { interface = "0.0.0.0" #hostname = "<hostname>" port = 8484 https = true sslKeystore = "$EXABEAM_HOME/config/custom/keystore.jks" sslKeypass = "password" # The following property enables Two-Way Client SSL Authentication sslClientAuth = trueTo install client certificates for CAC, add the client certificate bundle to the trust store on the master host.

To verify the contents of the trust store on the master host, run the following:

# For Exabeam Data Lake sudo docker exec exabeam-web-common-host1 /bin/bash -c "keytool -list -v -keystore /opt/exabeam/config/custom/truststore.jks -storepass changeit" # For Exabeam Advanced Analytics sudo docker exec exabeam-web-common /bin/bash -c "keytool -list -v -keystore /opt/exabeam/config/custom/truststore.jks -storepass changeit"

When you have completed the configuration changes, restart

web-common.source /opt/exabeam/bin/shell-environment.bash; web-common-restart

Configure a CAC User

To associate the credentials to a login, create a CAC user by navigating to Settings > Core > User Management > Users > Add User and select CAC in User type.

Ensure that the

usernamematches theCNattribute of the CAC user.If LDAP authentication is enabled, use LDAP group mapping to enable the users.

Configure an LDAP Server for CAC Authentication

To configure an Active Directory server for CAC authentication, follow the instructions in Set Up LDAP Server and Set Up LDAP Authentication for using Active Directory servers to manage CAC user access.

After LDAP is configured, the identity held by the Active Directory server is used to grant or deny CAC card access to Exabeam.

Delete a CAC User Account

CAC user accounts are deleted by removing the users from the Mongo database.

As the Exabeam user, source the environment.

$ sos

Find the user that you want to delete by running the following command (replacing

<userid>with the user's ID):mongo --quiet exabeam_user_db --eval 'db.exabeam_user_collection.find({_id:"<userid>"})'The output is similar to the following:

{ "_id" : "johndoe", "email" : "", "password" : "6008c8a26014989270343e9bb40548360a400a425523cc3636954dac33f", "passwordReset" : false, "roles" : [ ], "passwordLastChanged" : NumberLong("1427907776669"), "lastLoginAt" : NumberLong(0), "failedLoginCount" : 0, "fromLDAP" : false, "passwordHistory" : [ { "hashAlgorithm" : "sha256", "password" : "6008c8a26014989270343e9bb40548360a400a425523cc3636954dac33f", "salt" : "[B@3bd37f64" } ], "salt" : "[B@3bd37f64", "hashAlgorithm" : "sha256" }Note

If you do not receive output, it indicates that the user does not exist in the database. Make sure that you entered the ID correctly and run the command again.

To delete the user, run the following command:

mongo --quiet exabeam_user_db --eval 'db.exabeam_user_collection.remove({_id:"johndoe"})'If the user is successfully deleted, the output is as follows:

WriteResult({ "nRemoved" : 1 })Note

If you do not receive output, the user was not successfully deleted. Make sure that you entered the ID correctly and run the command again. Refresh the page in the UI to confirm that the user is deleted from the user list.

Role-Based Access Control

You can control the responsibilities and activities of your SOC team members with Role-Based Access Control (RBAC). To tailor access, you can assign local users, LDAP users, or SAML authenticated users one or more roles within Exabeam.

The responsibilities of those roles are determined by the permissions the role allows. If users are assigned more than one role, that user receives the permissions of each role.

Note

If a user is assigned multiple roles with conflicting permissions, Exabeam enforces the role having more permission. For example, if a role with read-only permissions and a role with full permission are both assigned to a user, then the user will have full permission.

To access the Roles page, navigate to Settings > User Management > Roles.

Out-of-the-Box Roles

Advanced Analytics provides five out-of-the-box pre-configured roles:

This role is intended for administrative access to Exabeam. Users assigned to this role can perform administrative operations on Exabeam, such as configuring the appliance to fetch logs from the SIEM, connecting to Active Directory to pull in contextual information, and restarting the analytics engine. The default admin credential belongs to this role. This is a predefined role provided by Exabeam and cannot be deleted.

Default permissions include:

Permission | Description |

|---|---|

Manage Users and Context Sources | Manage users and roles in the Exabeam Security Intelligence Platform, as well as the context sources used to enhanced the logs ingested (e.g. assets, peer groups, service accounts, executives). |

Manage context tables | Manage users, assets or other objects within Context Tables. |

Manage Content Packages | Users add/remove/configure content packages for automatic installation. |

View Metrics | View the IR Metrics page. |

Manage Data Ingest | Configure log sources and feeds and email-based ingest. |

Add IR comments | Add IR comments. |

Upload Custom Services | Upload custom actions or services. |

Create incidents | Create incidents. |

Delete incidents | Delete incidents. |

Manage Custom Services and Packages | User can manage custom services and related packages |

Manage Data Ingest | Configure log sources and feeds and email-based ingest. |

Manage ingest rules | Add, edit, or delete rules for how incidents are assigned, restricted, and prioritized on ingest. |

Manage Queues | Create, edit, delete, and assign membership to queues |

Manage Templates | Create, edit, or delete playbook templates. |

Manage Triggers | Create, update, or delete playbook triggers. |

Run Actions | Launch individual actions from the user interface. |

Manage Bi-directional Communication | Configure inbound and outbound settings for Bi-Directional Communications. |

Manage Incident Configuration | Users can manage the Incident Configurations including Incident Types, Fields, Layouts, and Checklists. |

Manage Playbooks | Create, update, or delete playbooks. |

Manage Services | Configure, edit, or delete services (3rd party integrations). |

Run Playbooks | Run a playbook manually from the workbench. |

Reset Incident Workbench | User can reset incident workbench |

All Admin Ops | Perform all Exabeam administrative operations such as configuring the appliance, connecting to the log repository and Active Directory, setting up log feeds, managing users and roles that access the Exabeam UI, and performing system health checks. |

View comments | View comments. |

View health | View health. |

View Raw Logs | View the raw logs that are used to built the events on AA timeline |

View Rules | View configured rules that determine how security events are handled |

View API | View API. |

View incidents | View incidents. |

View Metrics | View the IR Metrics page. |

Edit incidents | Edit an incident's fields, edit tasks, entities & artifacts. |

Manage Rules | Create/Edit/Reload rules that determine how security events are handled |

Bulk edit | Users can edit multiple incidents at the same time. |

Search Incidents | Can search keywords in IR via the search bar. |

Basic Search | Perform basic search on the Exabeam homepage. Basic search allows you to search for a specific user, asset, session, or a security alert. |

Users assigned to this role have only view privileges within the Exabeam UI. They can view all activities within the Exabeam UI, but cannot make any changes such as add comments or approve sessions. This is a predefined role provided by Exabeam.

Default permissions include:

Permission | Description |

|---|---|

View Comments | View comments |

View Activities | View all notable users, assets, sessions, and related risk reasons in the organization. |

View Global Insights | View the organizational models built by Exabeam. The histograms that show the normal behavior for all entities in the organization can be viewed. |

View Executive Info | View the risk reasons and the timeline of the executive users in the organization. You will be able to see the activities performed by executive users along with the associated anomalies. |

View Incidents | View incidents. |

View Infographics | View all the infographics built by Exabeam. You will be able to see the overall trends for the organization. |

View Insights | View the normal behaviors for specific entities within the organization. The histograms for specific users and assets can be viewed. |

Search Incidents | Can search keywords in Incident Responder via the search bar. |

Basic Search | Perform basic search on the Exabeam homepage. Basic search allows you to search for a specific user, asset, session, or a security alert. |

View Search Library | View the Search Library provided by Exabeam and the corresponding search results associated with the filters. |

Threat Hunting | Perform threat hunting on Exabeam. Threat hunting allows you to query the platform across a variety of dimension such as find all users whose sessions contain data exfiltration activities or a malware on their asset. |

Users assigned to this role are junior security analysts or incident desk responders who supports the day-to-day enterprise security operation and monitoring. This type of role will not be authorized to make any changes to Exabeam system except for making user, session and lockout comments. Users in this role cannot approve sessions or lockout activities. This is a predefined role provided by Exabeam.

Default permissions include:

Permission | Description |

|---|---|

Add Advanced Analytics Comments | Add comments for the various entities (users, assets and sessions) within Exabeam. |

Add Incident Responder Comments | Add Incident Responder comments. |

Create Incidents | Create incidents. |

Run Playbooks | Run a playbook manually from the workbench. |

Run Actions | Launch individual actions from the user interface. |

View comments | View comments. |

View Global Insights | View the organizational models built by Exabeam. The histograms that show the normal behavior for all entities in the organization can be viewed. |

View incidents | View incidents. |

View Infographics | View all the infographics built by Exabeam. You will be able to see the overall trends for the organization. |

View Activities | View all notable users, assets, sessions and related risk reasons in the organization. |

View Executive Info | View the risk reasons and the timeline of the executive users in the organization. You will be able to see the activities performed by executive users along with the associated anomalies. |

View Insights | View the normal behaviors for specific entities within the organization. The histograms for specific users and assets can be viewed. |

Users assigned to this role will be performing more complex investigations and remediation plans. They can review user sessions, account lockouts, add comments, approve activities and perform threat hunting. This is a predefined role provided by Exabeam and cannot be deleted.

Default permissions include:

Permission | Description |

|---|---|

Add Advanced Analytics Comments | Add comments for the various entities (users, assets and sessions) within Exabeam. |

Add Incident Responder Comments | Add Incident Responder comments. |

Upload Custom Services | Upload custom actions or services. |

Create incidents | Create incidents. |

Delete incidents | Delete incidents. |

Manage Playbooks | Create, update, or delete playbooks. |

Manage Queues | Create, edit, delete, and assign membership to queues |

Manage Services | Configure, edit, or delete services (3rd party integrations). |

Manage Triggers | Create, update, or delete playbook triggers. |

Run Actions | Launch individual actions from the user interface. |

Manage Bi-directional Communication | Configure inbound and outbound settings for Bi-Directional Communications. |

Manage Data Ingest | Configure log sources and feeds and email-based ingest. |

Manage ingest rules | Add, edit, or delete rules for how incidents are assigned, restricted, and prioritized on ingest. |

Manage Templates | Create, edit, or delete playbook templates. |

Run Playbooks | Run a playbook manually from the workbench. |

View Activities | View all notable users, assets, sessions and related risk reasons in the organization. |

View Comments | View comments. |

View Executive Info | View the risk reasons and the timeline of the executive users in the organization. You will be able to see the activities performed by executive users along with the associated anomalies. |

View Global Insights | View the organizational models built by Exabeam. The histograms that show the normal behavior for all entities in the organization can be viewed. |

View Infographics | View all the infographics built by Exabeam. You will be able to see the overall trends for the organization. |

View Rules | View configured rules that determine how security events are handled. |

View Insights | View the normal behaviors for specific entities within the organization. The histograms for specific users and assets can be viewed. |

Bulk Edit | Users can edit multiple incidents at the same time. |

Delete entities and artifacts | Users can delete entities and artifacts. |

Manage Rules | Create/Edit/Reload rules that determine how security events are handled |

Manage Watchlist | Add or remove users from the Watchlist. Users that have been added to the Watchlist are always listed on the Exabeam homepage, allowing them to be scrutinized closely. |

Approve Lockouts | Accept account lockout activities for users. Accepting lockouts indicates to Exabeam that the specific set of behaviors for that lockout activity sequence are whitelisted and are deemed normal for that user. |

Accept Sessions | Accept sessions for users. Accepting sessions indicates to Exabeam that the specific set of behaviors for that session are whitelisted and are deemed normal for that user. WarningThis permission should be given only sparingly, if at all. Accepting sessions is not recommended. The best practice for eliminating unwanted alerts is through tuning the rules and/or models. |

Edit incidents | Edit an incident's fields, edit entities & artifacts. |

Sending incidents to Incident Responder | Send incidents to Incident Responder. |

Basic Search | Perform basic search on the Exabeam homepage. Basic search allows you to search for a specific user, asset, session, or a security alert. |

Search Incidents | Can search keywords in IR via the search bar. |

Threat Hunting | Perform threat hunting on Exabeam. Threat hunting allows you to query the platform across a variety of dimensions such as find all users whose sessions contain data exfiltration activities or a malware on their asset. |

Manage Search Library | Create saved searches as well as edit them. |

View Search Library | View the Search Library provided by Exabeam and the corresponding search results associated with the filters. |

This role is needed only when the data masking feature is turned on within Exabeam. Users assigned to this role are the only users that can view personally identifiable information (PII) in an unmasked form. They can review user sessions, account lockouts, add comments, approve activities and perform threat hunting. This is a predefined role provided by Exabeam.

See the section in this document titled Mask Data Within the Advanced Analytics UI on the next page for more information on this feature.

Default permissions include:

Permission | Description |

|---|---|

Add Advanced Analytics Comments | Add comments for the various entities (users, assets and sessions) within Exabeam. |

View comments | View comments. |

View Global Insights | View the organizational models built by Exabeam. The histograms that show the normal behavior for all entities in the organization can be viewed. |

View incidents | View incidents. |

View Infographics | View all the infographics built by Exabeam. You will be able to see the overall trends for the organization. |

View Activities | View all notable users, assets, sessions and related risk reasons in the organization. |

View Executive Info | View the risk reasons and the timeline of the executive users in the organization. You will be able to see the activities performed by executive users along with the associated anomalies. |

View Insights | View the normal behaviors for specific entities within the organization. The histograms for specific users and assets can be viewed. |

Manage Watchlist | Add or remove users from the Watchlist. Users that have been added to the Watchlist are always listed on the Exabeam homepage, allowing them to be scrutinized closely. |

Sending incidents to Incident Responder | Sending incidents to Incident Responder. |

Accept Sessions | Accept sessions for users. Accepting sessions indicates to Exabeam that the specific set of behaviors for that session are whitelisted and are deemed normal for that user. |

Basic Search | Perform basic search on the Exabeam homepage. Basic search allows you to search for a specific user, asset, session, or a security alert. |

Threat Hunting | Perform threat hunting on Exabeam. Threat hunting allows you to query the platform across a variety of dimensions such as find all users whose sessions contain data exfiltration activities or a malware on their asset. |

Manage Search Library | Create saved searches as well as edit them |

View Search Library | View the Search Library provided by Exabeam and the corresponding search results associated with the filters. |

View Unmasked Data (PII) | Show all personally identifiable information (PII) in a clear text form. When data masking is enabled within Exabeam, this permission should be enabled only for select users that need to see PII in a clear text form. |

Mask Data Within the Advanced Analytics UI

Note

To enable or disable and configure data masking, contact your Exabeam technical representative.

Note

Data masking is not supported in Case Management or Incident Responder modules.

Data masking within the UI ensures that personal data cannot be read, copied, modified, or removed without authorization during processing or use. With data masking enabled, the only user able to see a user's personal information will be users assigned to the permission "View Clear Text Data". The default role "Data Privacy Officer" is assigned this permission out of the box. Data masking is a configurable setting and is turned off by default.

To enable data masking in the UI, open /

opt/exabeam/config/tequila/custom/application.conf, and setdataMaskingEnabledtotrue.If your

application.confis empty, copy the following text and paste it into the file:tequila { PII { # Globally enable/disable data masking on all the PII configured fields. Default value is false. dataMaskingEnabled = true } }

You're able to fully customize which PII data is masked or shown in your deployment. The following fields are available when configuring PII data masking:

Default: This is the standard list of PII values controlled by Exabeam. If data masking is enabled, all of these fields are encrypted.

Custom: Encrypt additional fields beyond the default list by adding them to this custom list. The default is empty.

Excluded: Do not encrypt these fields. Adds that are in the default list to expose their values in your deployment. The default is empty.

For example, if you want to mask all default fields other than "task name" and also want to mask the "address" field, then you would configure the lists as shown below:

PII {

# Globally enable/disable data masking on all the PII configured fields. Default value is false.

dataMaskingEnabled = true

dataMaskingSuffix = ":M"

encryptedFields = {

#encrypt fields

event {

default = [

#EventFieldName

"user",

"account",

...

"task_name"

]

custom=["address"]

excluded=["task_name"]

}

...

}

}Mask Data for Notifications

You can configure Advanced Analytics to mask specific fields when sending notable sessions and/or anomalous rules via email, Splunk, and QRadar. This prevents exposure of sensitive data when viewing alerts sent to external destinations.

Note

Advanced Analytics activity log data is not masked or obfuscated when sent via Syslog. It is your responsibility to upload the data to a dedicated index which is available only to users with appropriate privileges.

Before proceeding through the steps below, ensure your deployment has:

Enabled data masking (instructions below)

Configured a destination for Notable Sessions notifications sent from Advanced Analytics via Incident Notifications

By default, all fields in a notification are unmasked. To enable data masking for notifications, the Enabled field needs to be set to true. This is located in the application.conf file in the path /opt/exabeam/config/tequila/custom.

NotificationRouter {

...

Masking {

Enabled = true

Types = []

NotableSessionFields = []

AnomaliesRulesFields = []

}

}Use the Types field to add the notification destinations (Syslog, Email, QRadar, and/or Splunk). Then, use the NotableSessionFields and AnomaliesRulesFields to mask specific fields included in a notification.

For example, if you want to mask the user, source host and IP, and destination host and IP for notifications sent via syslog and Splunk, then you would configure the lists as shown below:

NotificationRouter {

...

Masking {

Enabled = true

Types = [Syslog, Splunk]

NotableSessionFields = ["user", "src_host", "src_ip", "dest_host", "dest_ip"]

}

}Set Up User Management

Users are the analysts that have access to the Exabeam UI to review and investigate activity. These analysts also have the ability to accept sessions. Exabeam supports local authentication or authentication against an LDAP server.

Roles

Exabeam supports role-based access control. Under Default Roles are the roles that Exabeam has created; these cannot be deleted or modified. Selecting a role displays the permissions associated with that role.

Users can also create custom roles by selecting Create a New Role. In this dialogue box you will be asked to name the role and select the permissions associated with it.

Add a User Role

Exabeam's default roles include Administrator, Auditor, and Tier (1 and 3) Analyst. If you do not want to use these default roles or edit their permissions, create ones that best suit your organization.

To add a new role:

Navigate to Settings > Exabeam User Management > Roles.

Click Create Role.

Fill the Create a new role fields and click SAVE. The search box allows you to search for specific permissions.

Your newly created role should appear in the Roles UI under Custom Roles and can be assigned to any analyst.

To start assigning users to the role, select the role and click Next, which will direct you to the Users UI to edit user settings. Edit the configuration for the users you wish to add the role to and click Next to apply the changes.

Supported Permissions

Administration

All Admin Ops: Perform all Exabeam administrative operations such as configuring the appliance, connecting to the log repository and Active Directory, setting up log feeds, managing users and roles that access the Exabeam UI, and performing system health checks.

Manage Users and Context Sources: Manage users and roles in the Exabeam Security Intelligence Platform, as well as the context sources used to enhanced the logs ingested (e.g. assets, peer groups, service accounts, executives)

Manage context tables: Manage users, assets or other objects within Context Tables.

Comments

Add Advanced Analytics Comments: Add comments for the various entities (users, assets and sessions) within Exabeam.

Add Incident Responder Comments

Create

Create incidents

Upload Custom Services: Upload custom actions or services.

Delete

Delete incidents

Manage

Manage Custom Services and Packages: User can manage custom services and related packages

Manage Data Ingest: Configure log sources and feeds and email-based ingest.

Manage ingest rules: Add, edit, or delete rules for how incidents are assigned, restricted, and prioritized on ingest.

Manage Queues: Create, edit, delete, and assign membership to queues

Manage Playbook Templates: Create, edit, or delete playbook templates.

Manage Triggers: Create, update, or delete playbook triggers.

Run Actions: Launch individual actions from the user interface.

Manage Bi-directional Communication: Configure inbound and outbound settings for Bi-Directional Communications.

Manage Incident Configuration: Users can manage the Incident Configurations including Incident Types, Fields, Layouts, Case Manager Notifications and Checklists.

Manage Playbooks: Create, update, or delete playbooks.

Manage Services: Configure, edit, or delete services (3rd party integrations).

Run Playbooks: Run a playbook manually from the workbench.

Reset Incident Workbench: User can reset incident workbench

View

Manage Incident Configs: Manage Incident Incident Responder Configs

View API

View Executive Info: View the risk reasons and the timeline of the executive users in the organization. You will be able to see the activities performed by executive users along with the associated anomalies.

View health

View Raw Logs: View the raw logs that are used to built the events on AA timeline.

View Infographics: View all the infographics built by Exabeam. You will be able to see the overall trends for the organization.

View Metrics: View the Incident Responder Metrics page.

View Activities: View all notable users, assets, sessions and related risk reasons in the organization.

View comments

View Global Insights: View the organizational models built by Exabeam. The histograms that show the normal behavior for all entities in the organization can be viewed.

View incidents

View Insights: View the normal behaviors for specific entities within the organization. The histograms for specific users and assets can be viewed.

View Rules: View configured rules that determine how security events are handled

Edit & Approve

Approve Lockouts: Accept account lockout activities for users. Accepting lockouts indicates to Exabeam that the specific set of behaviors for that lockout activity sequence are whitelisted and are deemed normal for that user.

Bulk Edit: Users can edit multiple incidents at the same time.

Edit incidents: Edit an incident's fields, edit entities & artifacts.

Manage Watchlist: Add or remove users from the Watchlist. Users that have been added to the Watchlist are always listed on the Exabeam homepage, allowing them to be scrutinized closely.

Accept Sessions: Accept sessions for users. Accepting sessions indicates to Exabeam that the specific set of behaviors for that session are whitelisted and are deemed normal for that user.

Delete entities and artifacts: Users can delete entities and artifacts.

Manage Rules: Create/Edit/Reload rules that determine how security events are handled

Sending incidents to Incident Responder

Search

Manage Search Library: Create saved searches as well as edit them.

Basic Search: Perform basic search on the Exabeam homepage. Basic search allows you to search for a specific user, asset, session, or a security alert.

Threat Hunting: Perform thread hunting on Exabeam. Query the platform across a variety of dimensions such as find all users whose sessions contain data exfiltration activities or a malware on their asset.

Manage Threat Hunting Public searches: Create, update, delete saved public searches

Search Incidents: Can search keywords in Incident Responder via the search bar.

View Search Library: View the Search Library provided by Exabeam and the corresponding search results associated with the filters.

Data Privacy

View Unmasked Data (PII): Show all personally identifiable information (PII) in a clear text form. When data masking is enabled within Exabeam, this permission should be enabled only for select users that need to see PII in a clear text form.

Manage Users

Understand the difference between Roles and Users. Configure the analysts that have access to the Exabeam User Interface, add the analyst's information, assign them roles, and set up user permissions and access based on your organization's needs.

Users

Users are the analysts that have access to the Exabeam UI to review and investigate activity. These analysts have specific roles, permissions, and can be assigned Exabeam objects within the platform. They also have the ability to accept sessions. Exabeam supports local authentication or authentication against an LDAP server.

Add an Exabeam User

Navigate to Settings > Exabeam User Management > Users.

Click Add User.

Fill the new user fields and select role(s), and then click SAVE.

Your newly created user should appear in the Users UI.

User Password Policies

Exabeam users must adhere to the following default password security requirements:

Passwords must:

Be between 8 to 32 characters

Contain at least one uppercase, lowercase, numeric, and special character

Contain no blank space

User must change password every 90 days

New passwords cannot match last 5 passwords

SHA256 hashing is applied to store passwords

Only administrators can reset passwords and unblock users who have been locked out due to too many consecutive failed logins

The management policies that are adjustable:

Strong password policy can be changed by editing the

webcommonblock in/opt/exabeam/config/common/web/custom/application.conf.webcommon { ... auth { defaultAdmin { username = "admin" password = "changeme" } ... passwordConstraints { minLength = 8 maxLength = 32 lowerCaseCount = 1 upperCaseCount = 1 numericCount = 1 specialCharCount = 1 spacesAllowed = false passwordHistoryCount = 5 # 0 to disable password history checking } failedLoginLockout = 0 # 0 to disable loginLockout passwordExpirationDays = 90 # 0 to disable password expiration passwordHashing = "sha256" # accept either sha256 or bcrypt as options } ... }Default idle session timeout is 4 hours. Edit the

silhouette.authenticator.cookieIdleTimeoutvalue (in seconds) in/opt/exabeam/config/common/web/custom/application.conf.silhouette.authenticator.cookieIdleTimeout = 14400

Set Up LDAP Server

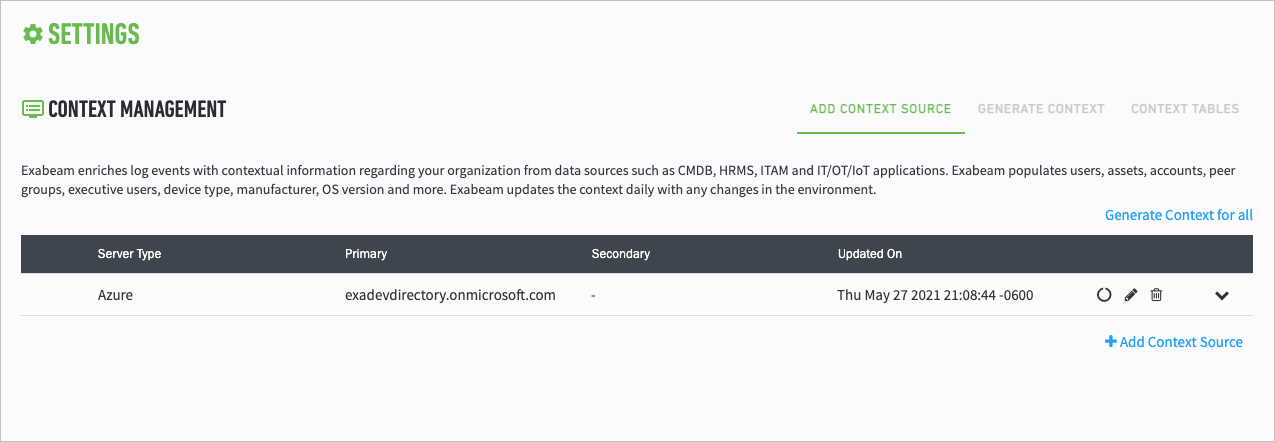

If you are adding an LDAP server for the first time, then the ADD CONTEXT SOURCE page displays when you reach the CONTEXT MANAGEMENT settings page. Otherwise, a list of LDAP Server appears, click Add Context Source to add more.

Select a Source Type:

Microsoft Active Directory

NetIQ eDirectory

Microsoft Azure Active Directory

The add/edit CONTEXT MANAGEMENT page displays the fields necessary to query and pull context information from your LDAP server(s), depending on the source chosen.

For Microsoft Active Directory:

Primary IP Address or Hostname – Enter the LDAP IP address or hostname for the primary server of the given server type.

Note

For context retrieval in Microsoft Active Directory environments, we recommend pointing to a Global Catalog server. To list Global Catalog servers, enter the following command in a Windows command prompt window:

nslookup -querytype=srv gc.tcp.acme.local. Replaceacme.localwith your company's domain name.Secondary IP Address or Hostname – If the primary LDAP server is unavailable, Exabeam falls back to the secondary LDAP server if configured.

TCP Port – Enter the TCP port of the LDAP server. Optionally, select Enable SSL (LDAPS) and/or Global Catalog to auto-populate the TCP port information accordingly.

Bind DN – Enter the bind domain name, or leave blank for anonymous bind.

Bind Password – Enter the bind password, if applicable.

LDAP attributes for Account Name – This field auto-populated with the value

sAMAccountName. Please modify the value if your AD deployment uses a different value.

For NetIQ eDirectory:

Primary IP Address or Hostname – Enter the LDAP IP address or hostname for the primary server of the given server type.

Secondary IP Address or Hostname – If the primary LDAP server is unavailable, Exabeam falls back to the secondary LDAP server if configured.

TCP Port – Enter the TCP port of the LDAP server. Optionally, select Enable SSL (LDAPS) and/or Global Catalog to auto-populate the TCP port information accordingly.

Bind DN – Enter the bind domain name, or leave blank for anonymous bind.

Bind Password – Enter the bind password, if applicable.

Base DN – .

LDAP Attributes – The list of all attributes to be queried by the Exabeam Directory Service (EDS) component is required. When testing the connection to the eDirectory server, EDS will collect from the server a list of the available attributes and display that list as a drop down menu. Select the name of the attribute from that list or provide a name of your own. Only names for the LDAP attributes you want EDS to poll are required (i.e., not necessarily the full list). Additionally, EDS does not support other types of attributes, therefore you cannot add “new attributes” on the list below.

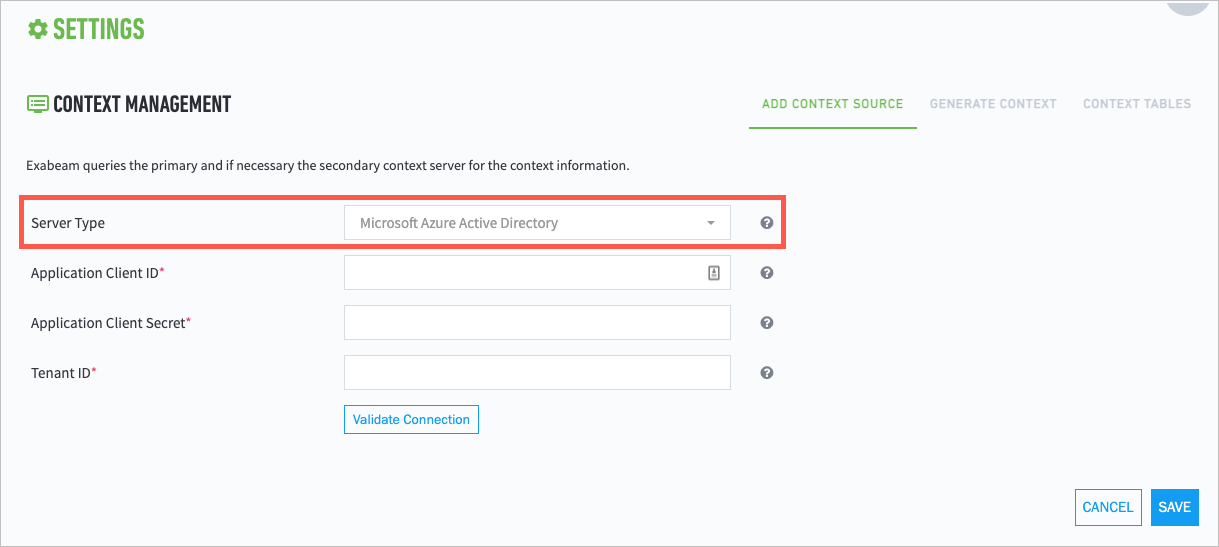

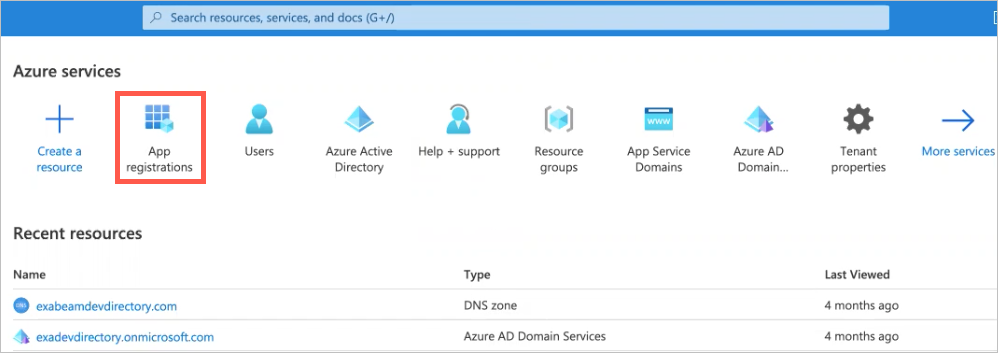

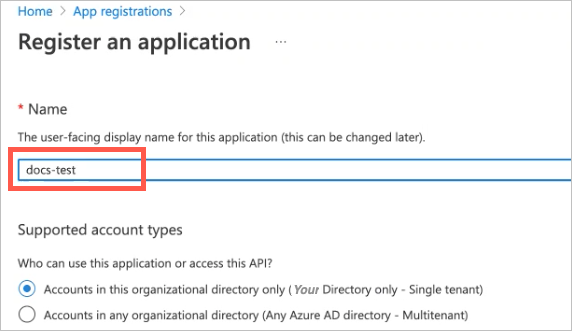

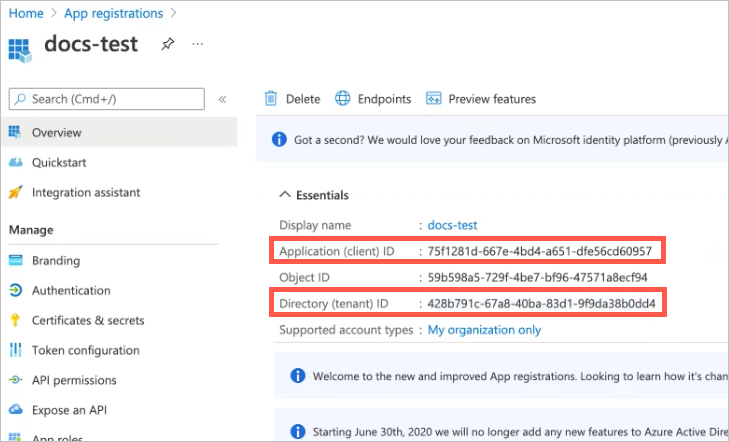

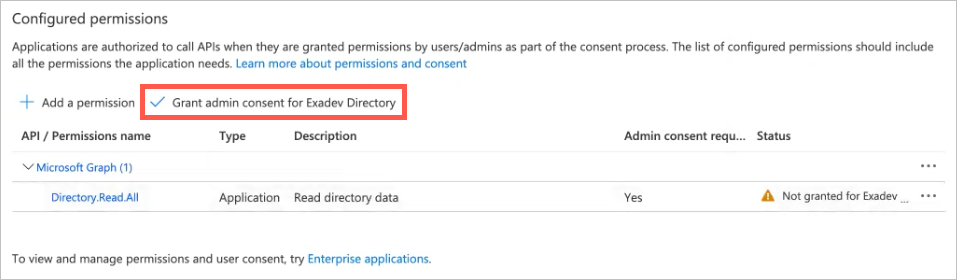

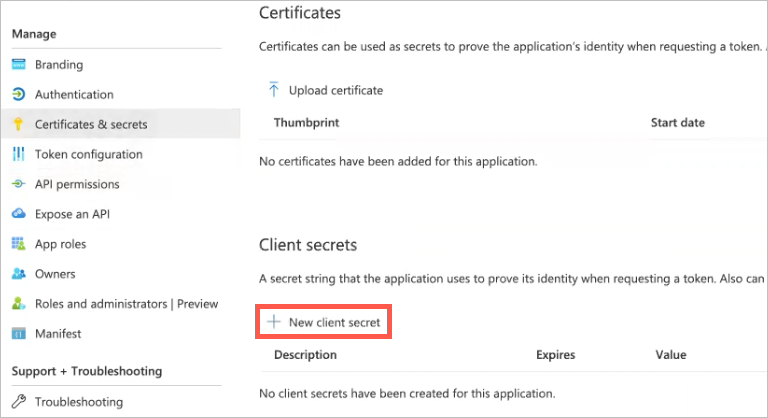

For Microsoft Azure Active Directory:

Application Client ID — In App Registration in Azure Active Directory, select the application and copy the Application ID in the Overview tab.

Application Client Secret — In App Registration in Azure Active Directory, select the application and click on Certificates & Secrets to view or create a new client secret.

Tenant ID — In App Registration in Azure Active Directory, select the application and copy the Tenant ID in the Overview tab.

Click Validate Connection to test the LDAP settings.

Note

If you selected Global Catalog for either Microsoft Active Directory or NetIQ eDirectory, this button displays as Connect & Get Domains.

Click Save to save your context source,

Set Up LDAP Authentication

In addition to local authentication Exabeam can authenticate users via an external LDAP server.

When you arrive at this page, by default the ‘Enable LDAP Authentication’ is selected and the LDAP attribute name is also populated. To change the LDAP attribute, enter the new account name and click Save. To add an LDAP group, select Add LDAP Group and enter the DN of the group you would like to add. Test Settings will tell you how many analysts Exabeam found in the group. From here you can select which role(s) to assign. It is important to note that these roles are assigned to the group and not to the individual analysts; if an analyst changes groups their role will automatically change to the role(s) associated with their new group.

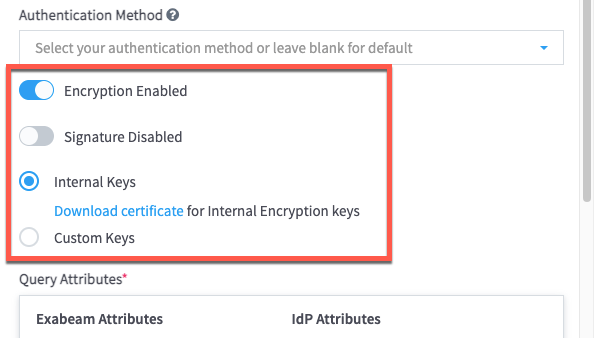

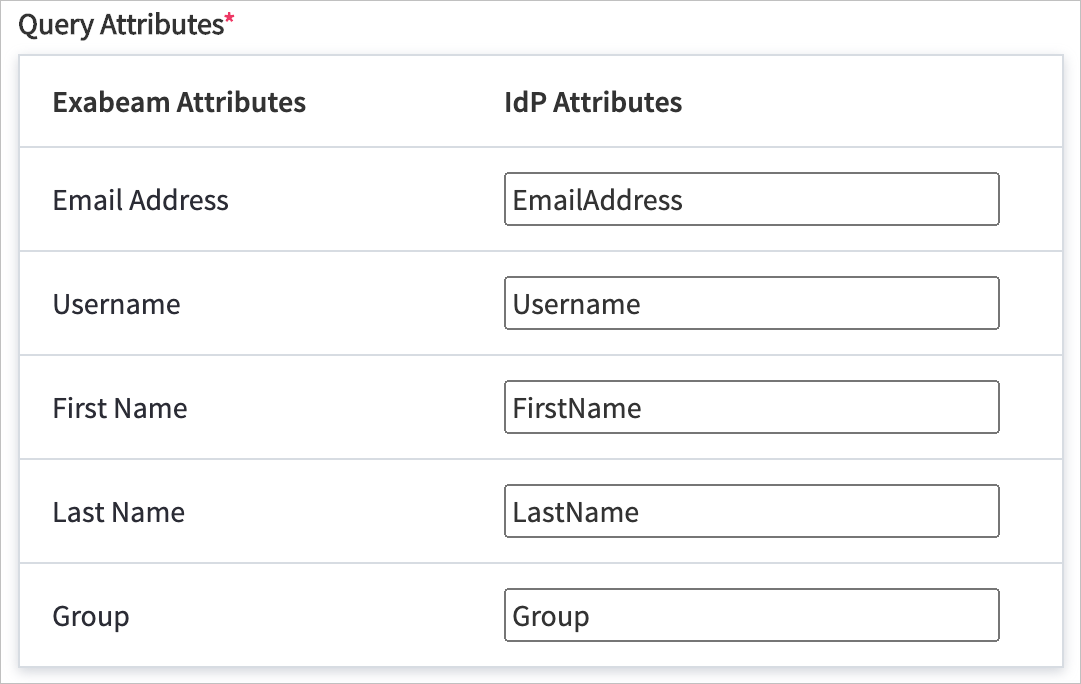

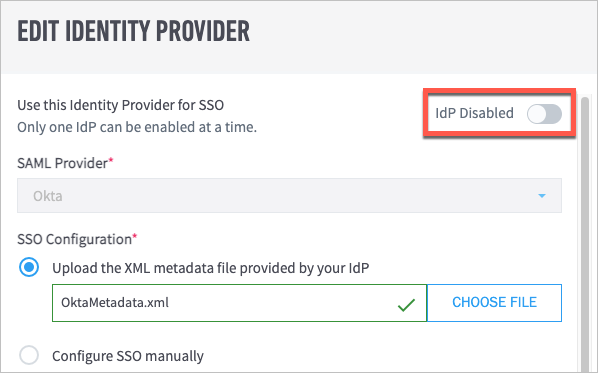

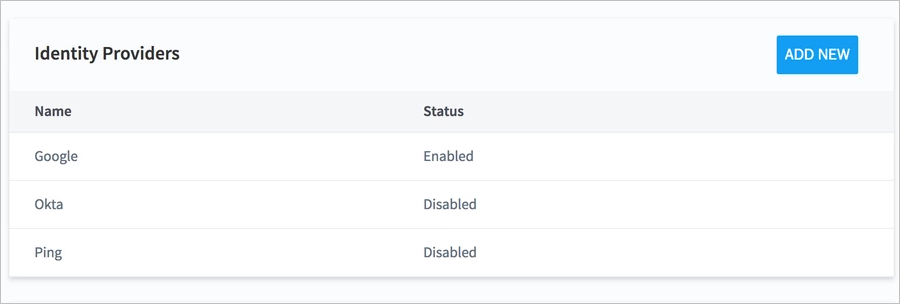

Third-Party Identity Provider Configuration

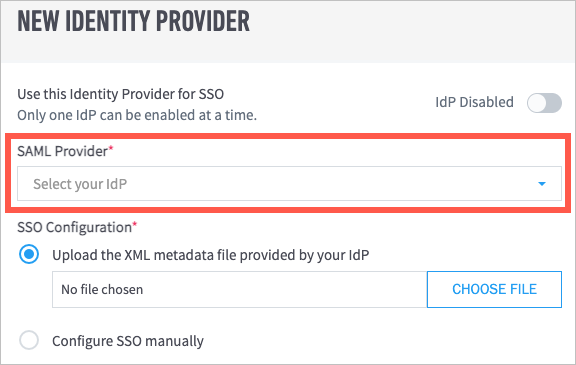

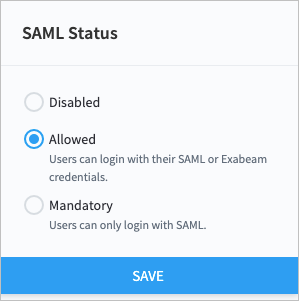

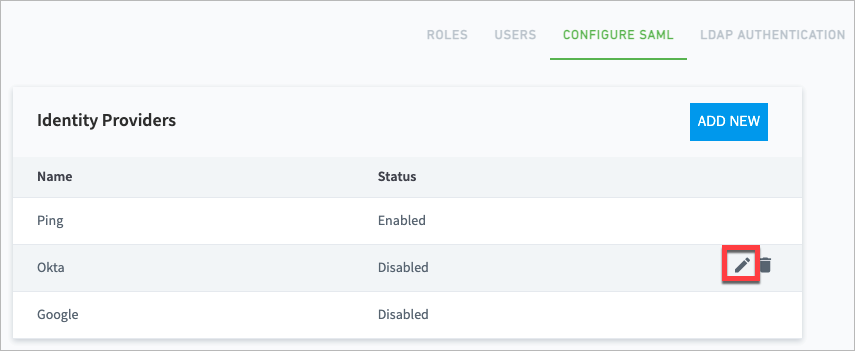

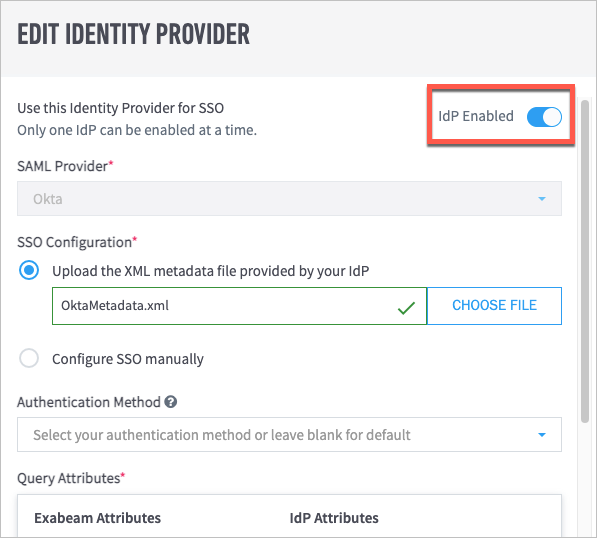

Exabeam supports integration with SAML 2.0 compliant third-party identity providers (IdPs) for single sign-on (SSO), multi-factor authentication, and access control. Once an IdP is added to your product, you can make IdP authentication mandatory for users to log in to the product, or you can allow users to log in through either the IdP or local authentication.

Note

You can add multiple IdPs to your Exabeam product, but only one IdP can be enabled at a time.

Add Exabeam to Your SAML Identity Provider

This section provides instructions for adding Exabeam to your SAML 2.0 compliant identity provider (IdP). For detailed instructions, refer to your IdP's user guide.

The exact procedures for configuring IdPs to integrate with Exabeam vary between vendors, but the general tasks that need to be completed include the following (not necessarily in the same order):

Begin the procedure to add a new application in your IdP for Exabeam (if needed, refer to your IdP's user guide for instructions).

In the appropriate configuration fields, enter the Exabeam Entity ID and the Assertion Consumer Service (ACS) URL as shown in the following:

Entity ID:

https://<exabeam_primary_host>:8484/api/auth/saml2/<identity_provider>/login

ACS URL:

https://<exabeam_primary_host>:8484/api/auth/saml2/<identity_provider>/handle-assertion

Important

Make sure that you replace

<exabeam_primary_host>with the IP address or domain name of your primary host. The only acceptable values for <identity_provider> are the following:adfsgooglepingoktaothers

If you are using Microsoft AD FS, Google IdP, Ping Identity, or Okta, enter the corresponding value from the preceding list. For all other IdPs, enter

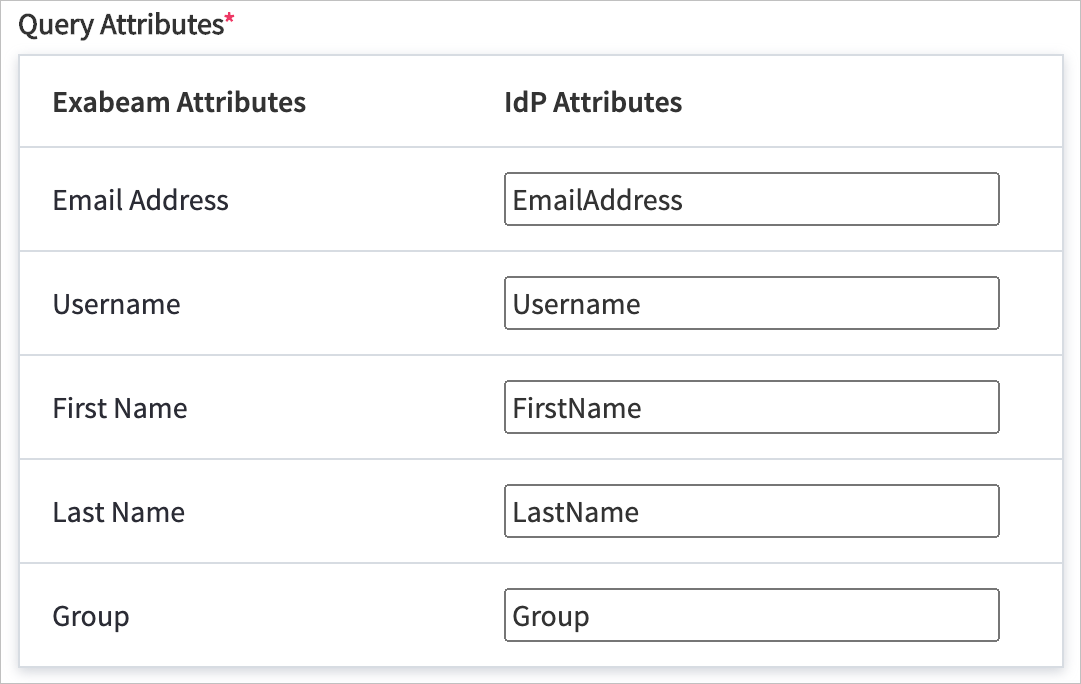

others. All of the values are case sensitive.In the attribute mapping section, enter descriptive values for the following IdP user attributes:

Email address

First name

Last name

Group

Username (this attribute is optional)

Note

The actual names of these user attributes may vary between the different IdPs, but each IdP should have the corresponding attributes.

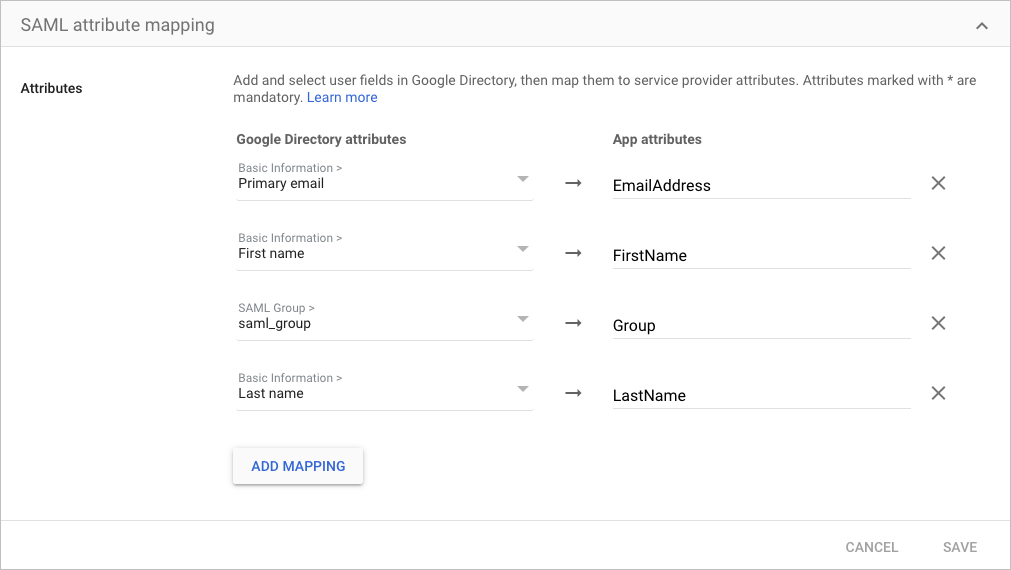

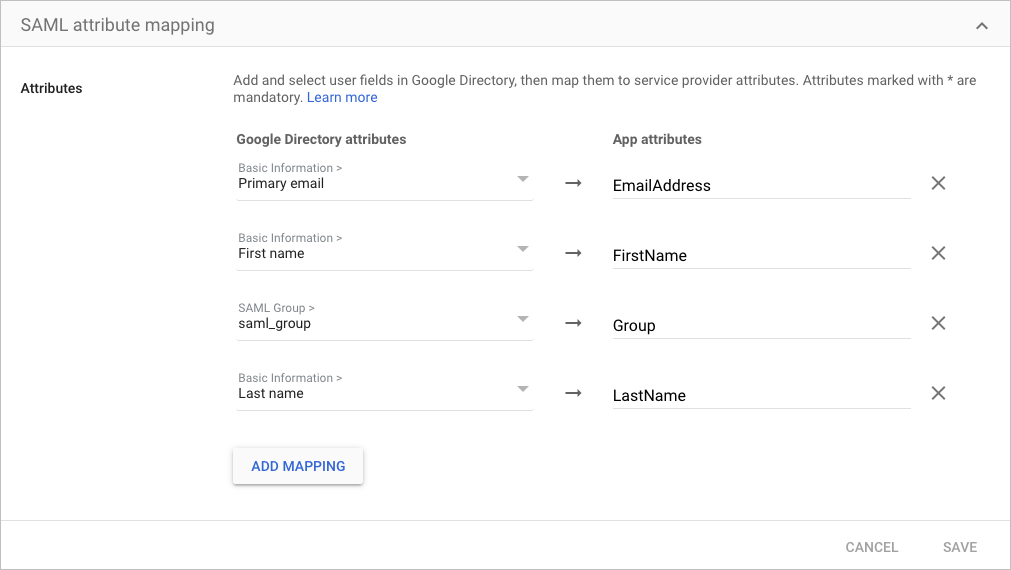

For example, if Primary email is the user email attribute in your IdP, you could enter

EmailAddressas the descriptive value. The following is an example of a completed attribute map in Google IdP:

Important

When you Configure Exabeam for SAML Authentication, you need to use the same descriptive values to map the Exabeam query attributes with the corresponding IdP user attributes.

Complete any additional steps in your IdP that are necessary to finish the configuration. Refer to your IdP user guide for details.

Copy the IdP's connection details and download the IdP certificate or, if available, download the SAML metadata file.

Note

You need either the connection details and the IdP certificate or the SAML metadata file to complete the integration in Exabeam.

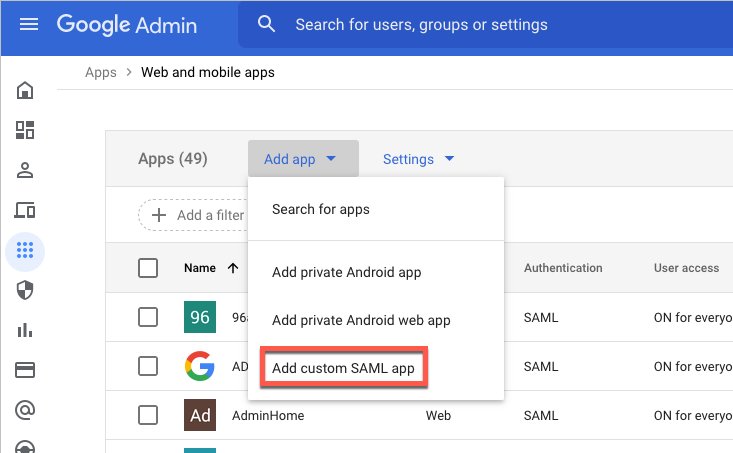

From the main menu on the left, select Apps and then click Web and mobile apps.

From the Add app drop-down menu, click Add custom SAML app.

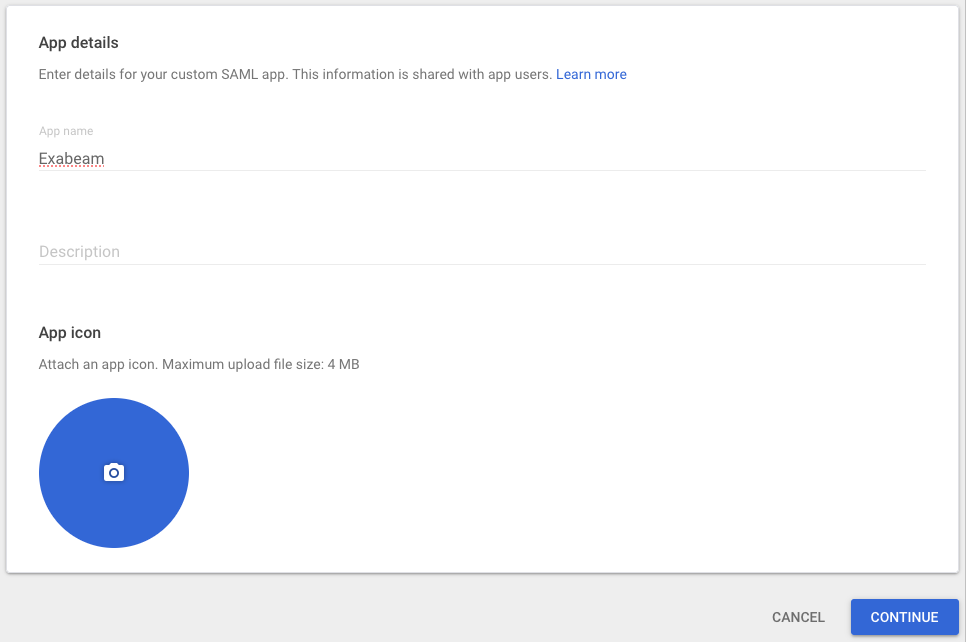

The App Details section opens.

In the App name field, enter a name.

Under App icon, click the blue circle, navigate to an image file that can be used as an icon and click to upload it.

Click Continue.

The Google Identity Provider Details section opens.

Click Download IdP Metadata.

Note

The IdP metadata file needs to be uploaded to Exabeam when you Configure Exabeam for SAML Authentication.

Click Continue.

The Service Provider Details section opens.

Enter the ACS URL and Entity ID as shown in the following:

ACS URL:

https://<exabeam_primary_host>:8484/api/auth/saml2/google/handle-assertion

Entity ID:

https://<exabeam_primary_host>:8484/api/auth/saml2/google/login

Note

Make sure that you replace

<exabeam_primary_host>with the IP address or domain name of your primary host.Click Continue.

The Attribute Mapping section opens.

Click Add Mapping, and then from Select field drop-down menu, select Primary email.

Repeat the previous step for each of the following attributes:

Primary email

First name

Last name

Group

In the App attributes fields, enter descriptive values for the attributes.

For example, for the Primary email attribute, you could enter

EmailAddressfor the descriptive value. The following is an example of a completed attribute map:

Important

When you Configure Exabeam for SAML Authentication, you need to use the same descriptive values to map the Exabeam query attributes with the corresponding IdP user attributes.

Click Continue.

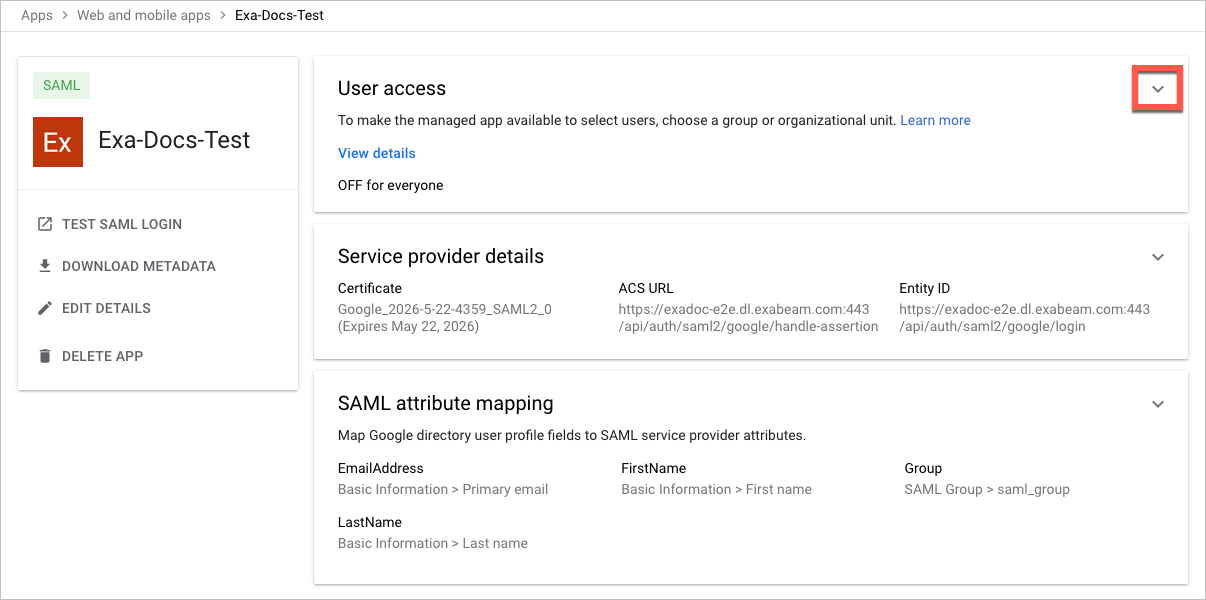

The details page opens for your Exabeam app.

In the User Access panel, click the Expand panel icon to begin assigning the appropriate organizational units and groups to your Exabeam app and manage its service status.

You are now ready to Configure Exabeam for SAML Authentication.

Note

The following instructions include procedural information for configuring both Azure AD and Exabeam to complete the IdP setup.

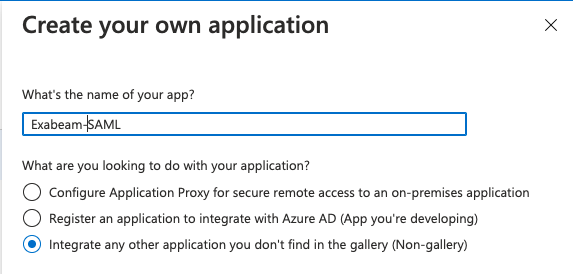

Log in to Microsoft Azure and navigate to Enterprise Applications.

Create an Exabeam enterprise application by doing the following:

Click New application, and then click Create your own application.

The Create your own application dialog box appears.

In the What's the name of your app field, type a name for the app (for example, "Exabeam-SAML").

Select Integrate any other application you don't find in the gallery (Non-gallery).

Click Create.

On the Enterprise Application page, locate and click the application that you added in step 2.

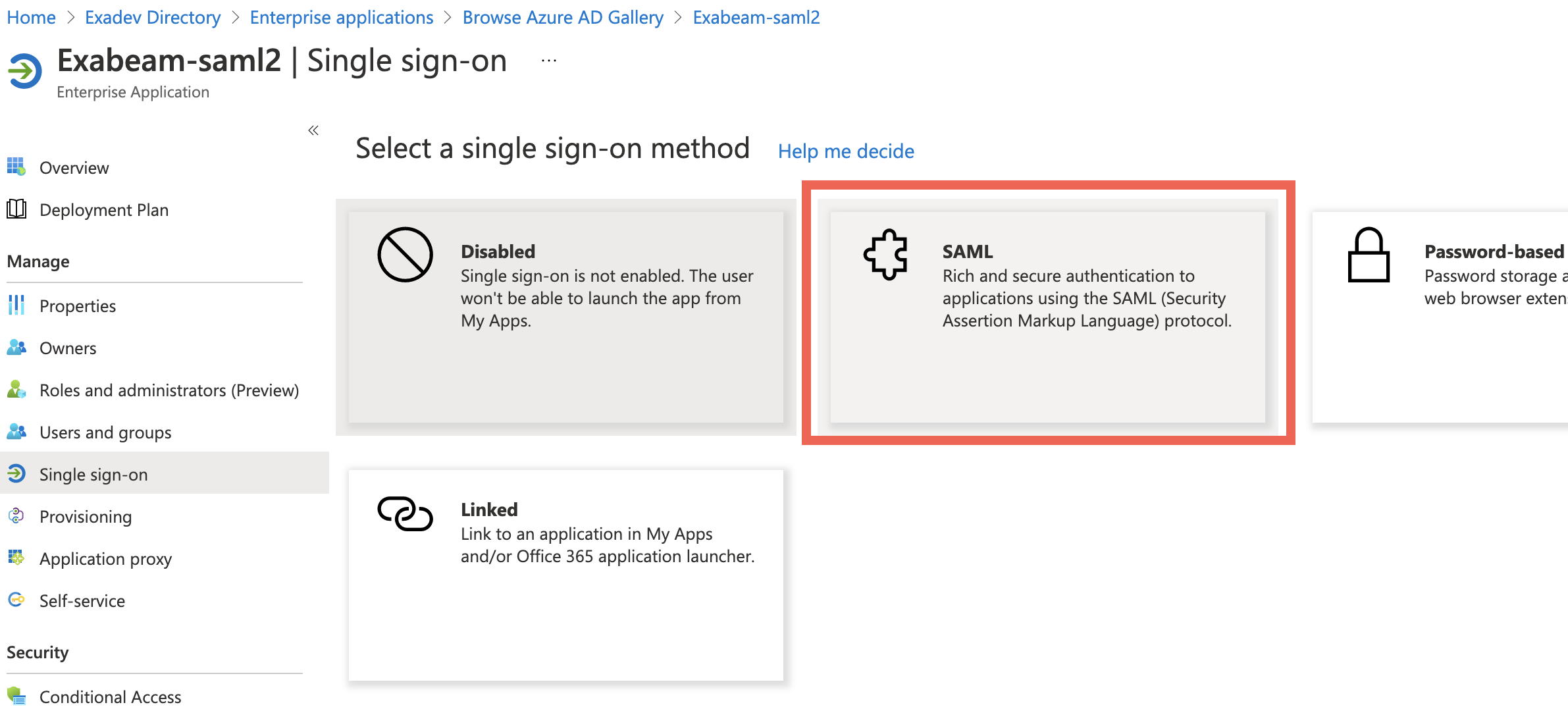

In the Manage section, click Single sign-on.

Click the SAML tile.

In the Basic SAML Configuration box (

), click Edit, and then do the following:

), click Edit, and then do the following:In the Identifier (Entity ID) field, enter the following: https://<exabeam_primary_host>:8484/api/auth/saml2/others/login

Note

Make sure that you replace

<exabeam_primary_host>with the IP address or domain name of your primary host.In the Reply URL (Assertion Consumer Service URL) field, enter the following: https://<exabeam_primary_host>:8484/api/auth/saml2/others/handle-assertion

Note

Make sure that you replace

<exabeam_primary_host>with the IP address or domain name of your primary host.Click Save.

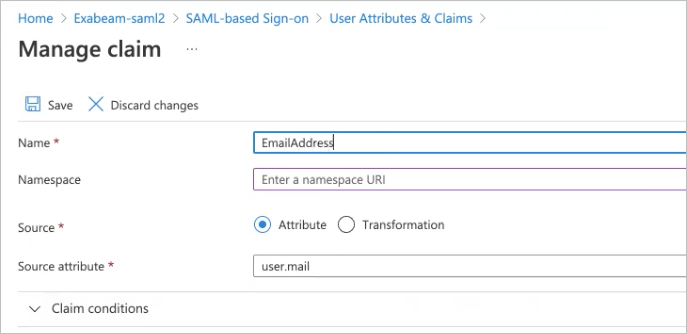

In the User Attributes & Claims box (

), click Edit, and then map the Azure objects to your Exabeam field attributes.

), click Edit, and then map the Azure objects to your Exabeam field attributes.Click the row for the user.mail claim.

The Manage claim dialog box appears.

In the Name field, type the name of the appropriate Exabeam field attribute.

If needed, clear the value in the Namespace field to leave it empty.

Click Save.

Repeat steps a through d as needed for the following claims:

user.givenname

user.userprincipalname

user.surname

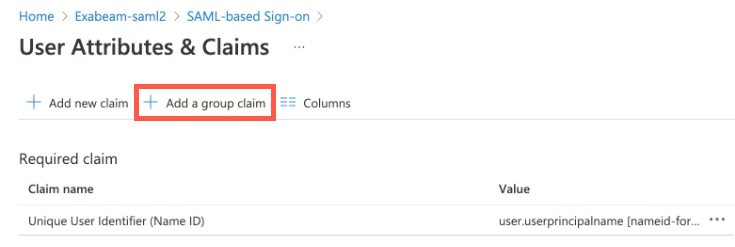

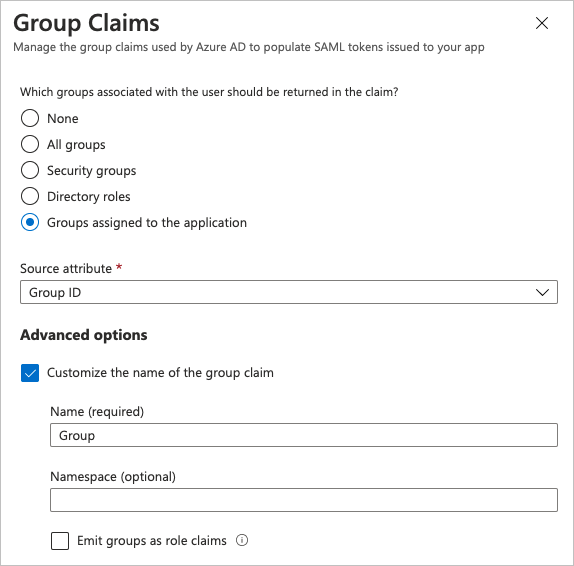

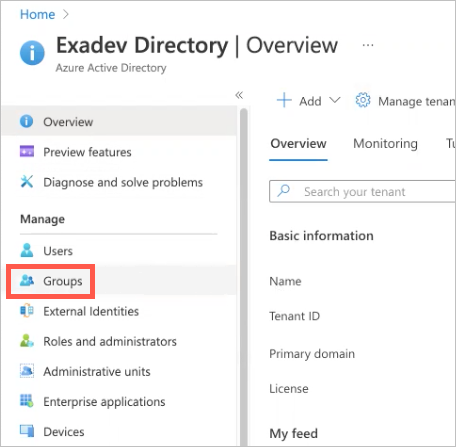

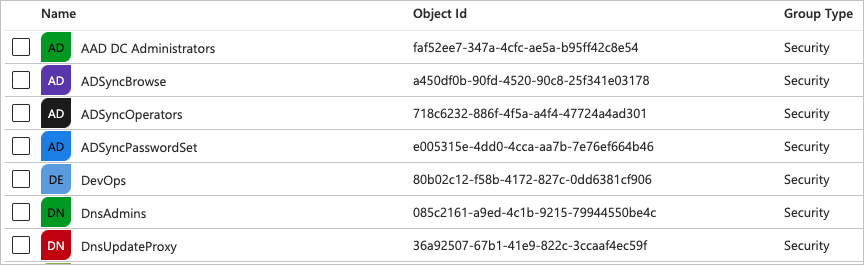

Click Add a group claim.

In the Group Claims dialog box, select Groups assigned to the application.

From the Source attribute drop-down list, select Group ID.

In the Advanced Options section, select the checkbox for Customize the name of the group claim.

In the Name (required) field, type Group.

Click Save.

The Group claim is added to the User Attributes & Claims box.

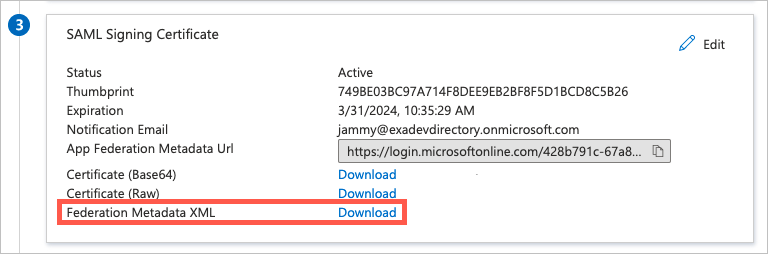

In the SAML Signing Certificate box (

), download the Federation Metadata XML certificate to upload to Exabeam.

), download the Federation Metadata XML certificate to upload to Exabeam.

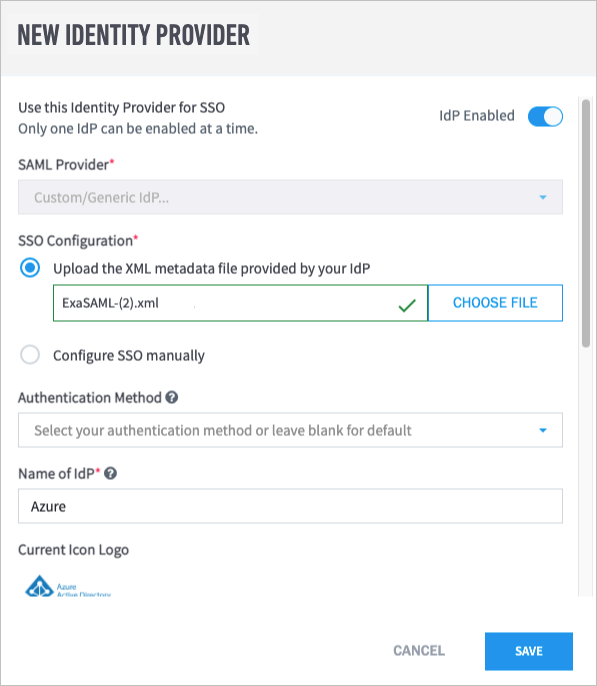

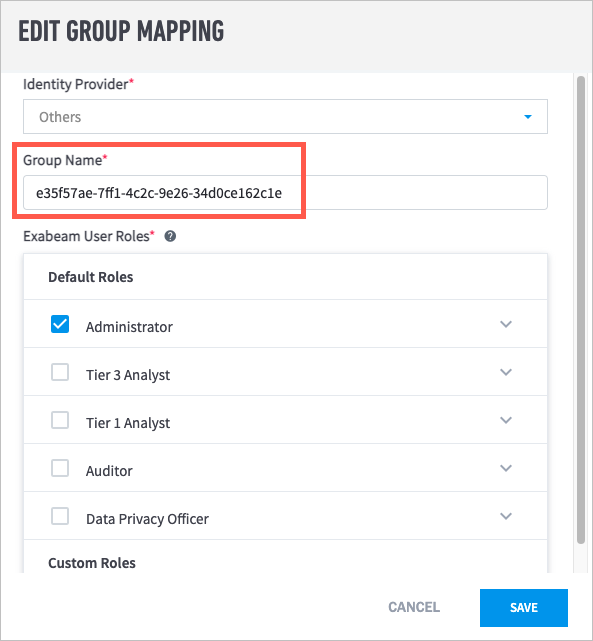

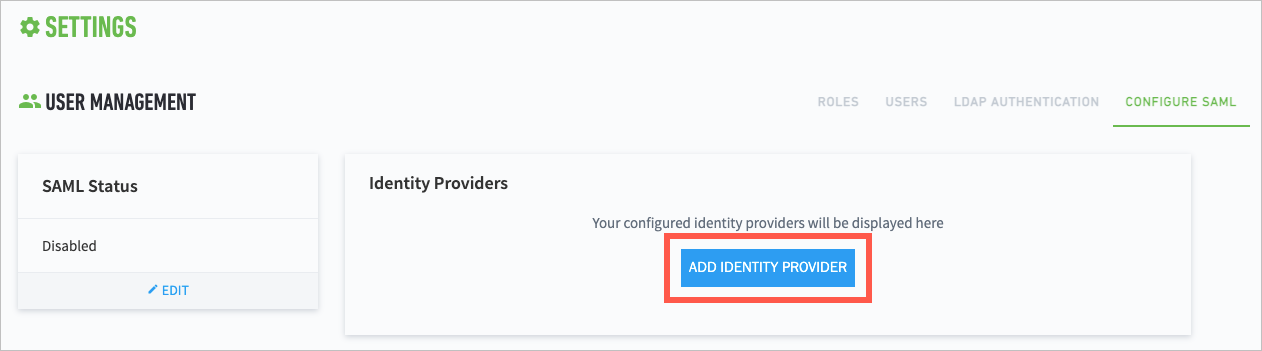

In Exabeam, navigate to Settings > User Management > Configure SAML, and then click Add Identity Provider.

The New Identity Provider dialog box appears.

From the SAML Provider drop-down list, select Custom/Generic IdP.

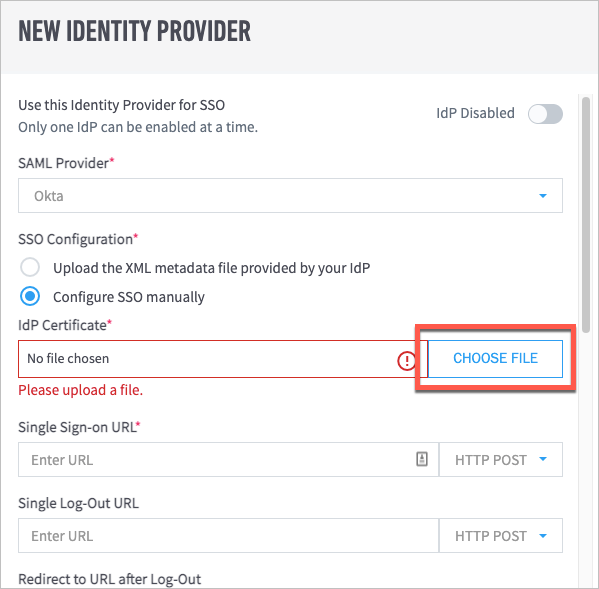

Under SSO Configuration, select Upload the XML metadata filed provided by your IdP, and then choose the Federation Metadata XML file that was downloaded in step 8.

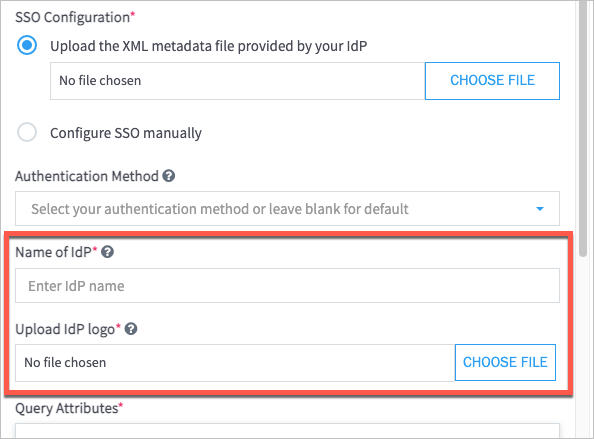

In the Name of IdP field, type a name (for example, "Azure").

In the Upload IdP logo field, click Choose File, and then select a PNG file of the logo that you want to use.

Note

The PNG logo file size cannot exceed 1 MB.

In the Query Attributes section, enter the appropriate IdP attribute values for each field that you defined in step 7.

Important

The IdP attribute values must match the values that you defined in step 7.

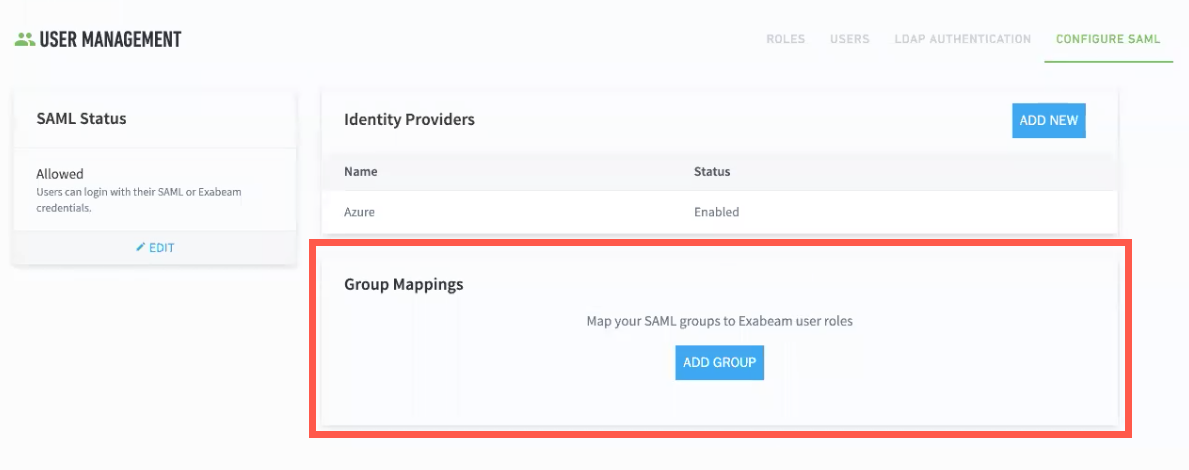

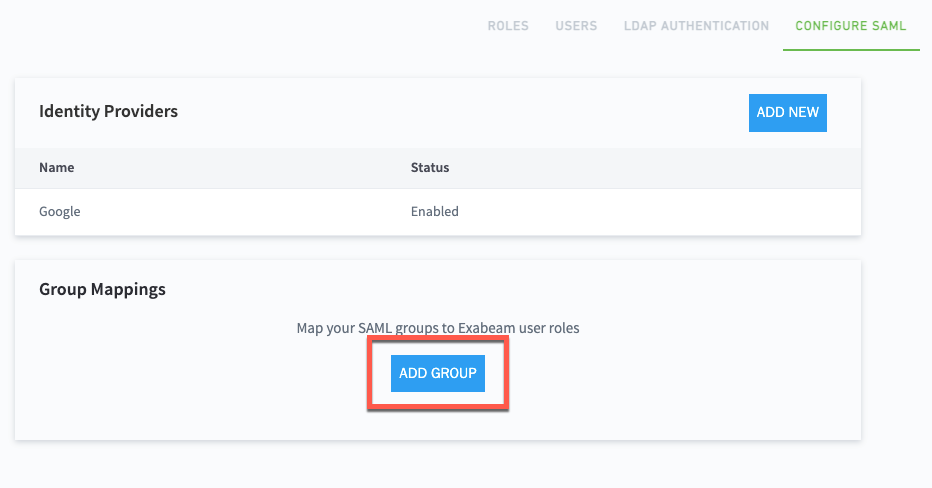

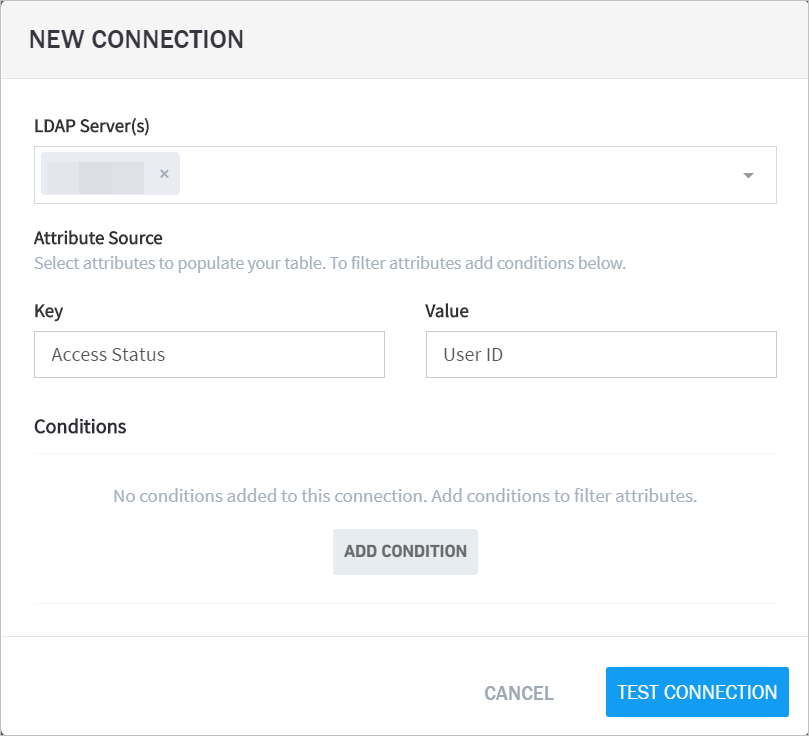

Click Save.