- Log Stream Overview

- Parser Manager

- Parsers Overview

- View Parser Details

- Create a Custom Parser

- Import Sample Logs

- Define a Subset of the Sample Logs

- Add Conditions

- Add Basic Parser Information

- Extract Event Fields

- Extract Mapped JSON Fields

- Select JSON Fields from a List of Key/Value Pairs

- Select Tokenized JSON Fields from the Values in the Sample Log

- Manually Enter JSON Path Expressions

- Reorder Mapped JSON Fields

- Review the Matching JSON Fields and Values

- Add Logic to JSON Field Extraction

- Expressions for Parser Field Extractions and Enrichment Mapping

- Array Log Sample

- Extract Fields Using Regular Expressions

- Reserved Fields

- Extract Mapped JSON Fields

- Add Event Builder Rules

- Review and Save Parser

- Manage Existing Custom Parsers

- Tokenize Non-Standard Log Files

- Customize a Default Parser

- Duplicate a Parser

- Enable or Disable Parsers

- Parser Updates

- Live Tail

- Enrichments

- Event Filtering

Tokenize Non-Standard Log Files

Some products and environments create non-standard logs. In these cases, you will need to adjust the parsing to the log's format to extract values from a non-standard format or a log utilizing uncommon delimiters.

To manually tokenize non-standard logs, create a custom parser. You can create one that works for both Advanced Analytics and Data Lake or one that works just for Data Lake.

The following procedure lists the basic steps to creating a custom parser. If you need more detail about any of the steps, see Create a Custom Parser.

Log on to the New-Scale Security Operations Platform and select the Log Stream tile.

The Log Stream homepage appears. You should be on the Parsers Overview tab.

Click +New Parser.

The New Parser page appears. you will be on the Add Log Sample stage of creating a new parser.

Select sample logs to import:

To select a log file from your file system, select Add a file, then drag and drop a file or click Select a File. You can upload a

.gzor.tgzfile that is no more than 100 MB.To copy and paste logs, select Copy and paste raw logs, then paste the content into the text box. You can enter up to 100 lines.

Click Upload Log Sample.

Click Find Matching Parsers.

Click +New Parser.

Add parser conditions:

Enter a value in the SELECT CONDITIONS bar, or in the list of raw log lines, highlight a string.

Click Add Condition.

Click Next.

The Parser Info page appears.

Add basic parser information.

Enter the Parser Name.

Under Activity Types, click Select activity types , select all of the activity types (alert or app) that apply to your custom parser, and then click Select activity types.

Under Time Format, select a format that best matches how dates and times are formatted in the sample logs.

Under Vendor, select the vendor that generated the logs you imported.

Under Product, select the product that generated the logs.

Click NEXT.

The Extract Event Fields page appears.

In this step you will either tokenize key-value pairs based on string selection, or tokenize the entire log.

To tokenize key-value pairs based on string selection:

Select a non-tokenized key-value pair from the sample log lines by highlighting it, and click Tokenize.

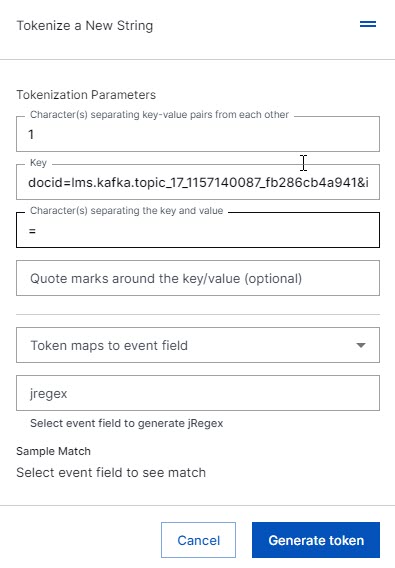

The Tokenize a New String dialog appears.

In the dialog enter:

the character(s) separating key-value pairs from each other.

Key—the value of the key.

the character(s) separating the key and value.

quote marks around the key/value—(Optional) use the quotes field to instruct the system to remove any redundant escape characters, such as

""or"""".Token maps to event field —in the drop-down menu, select the field to which you want to map the key.

The regex and value generate automatically, based on your entries.

Click Generate token.

The key-value pairs are now tokenized.

Repeat these step to tokenize other key-value pairs in the data.

To tokenize the entire log:

Click Manage tokenization.

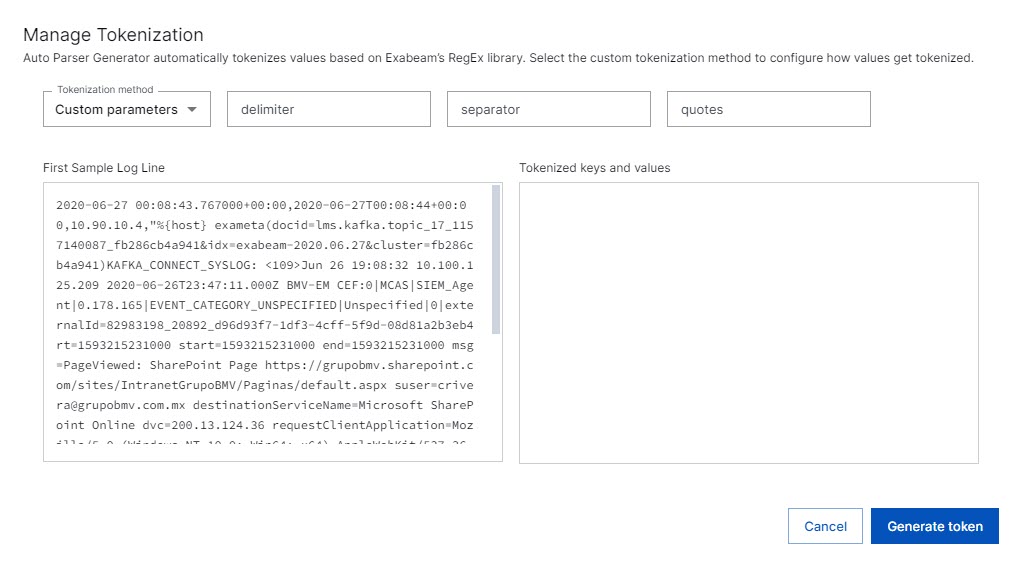

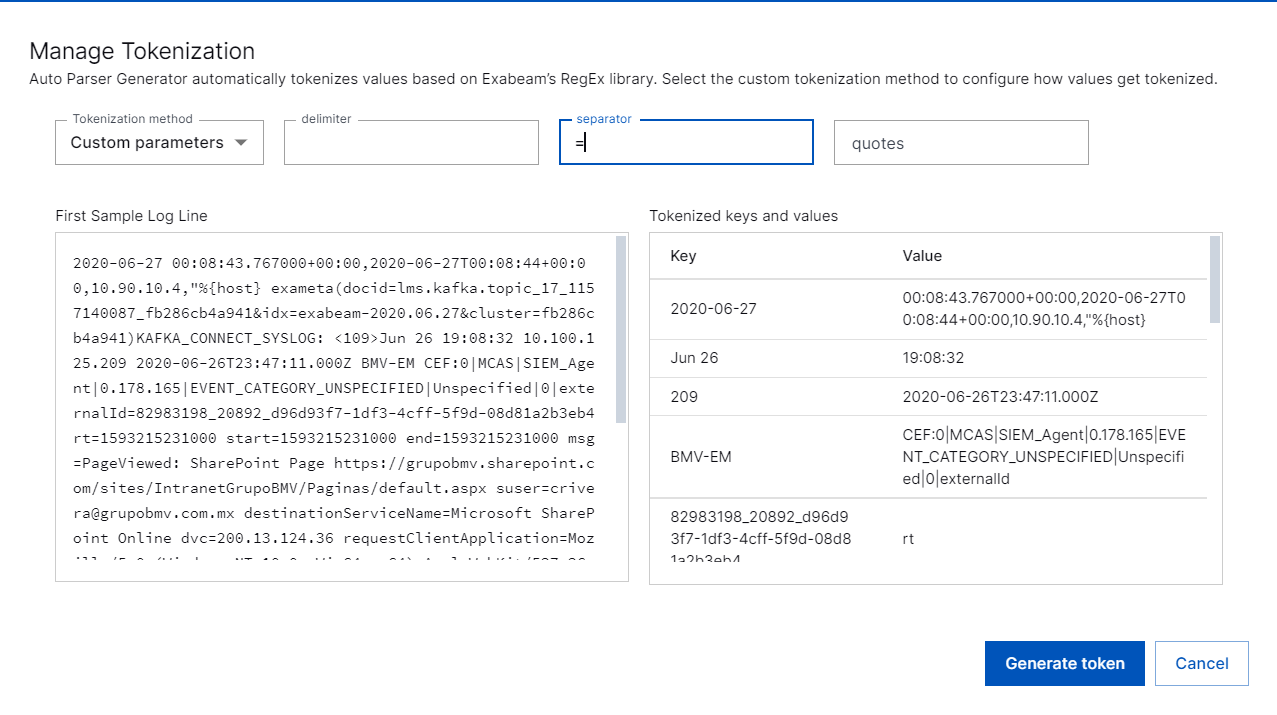

The tokenization dialog appears.

In the dialog:

In the Tokenization method drop-down menu, select Custom parameters or Default regex.

delimiter—enter the symbol used to separate the key-value pairs in the data.

separator—enter the symbol used to separate the key and value in the key-value pairs in the data.

quotes—use the quotes field to instruct the system to remove any redundant escape characters, such as

""or"""".

The system will list all of the key-value pairs identified in the log matching those definitions.

Click Generate token.

The key-value pairs are tokenized.

Repeat these steps to tokenize the entire log using a different set of delimiters and separators.

The customized tokenizations that you add are shown above the Sample Log Lines. Click the icons to edit or delete an individual tokenization.

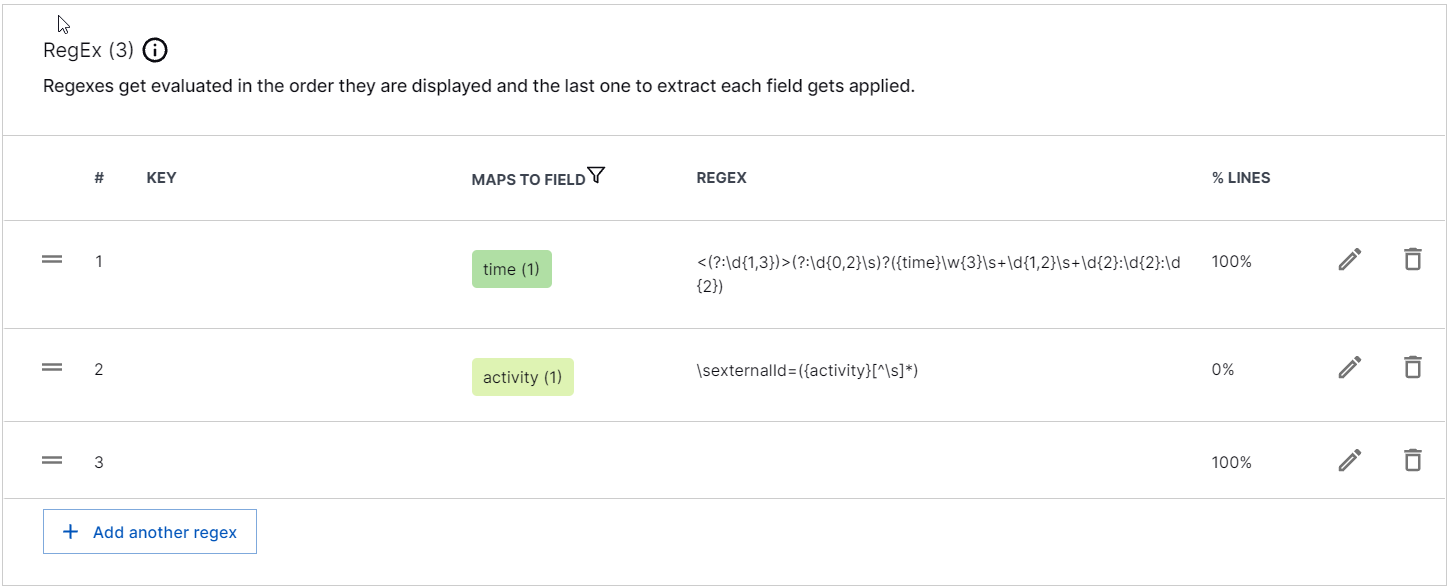

Continue with the creation of your custom parser, using the instructions found in Create a Custom Parser, starting with step 4. Extract Event Fields.