- Site Collector Overview

- Get Started with Site Collectors

- Install Site Collector

- Set Up Collectors

- Sign Up for the Early Access Program: Site Collectors

- Choose the Right Collector based on Data Sources

- Set Up Archive Windows Collector

- Set Up Archive Linux Collector

- Set Up EStreamer Collector

- Set Up Fortinet Collector

- Set Up IBM Security QRadar Collector

- Set Up Kafka Collector

- Set Up Splunk Collector

- Set Up Linux File Collector

- Set Up Microsoft SQL Collector

- Set Up MySQL Collector

- Set Up Oracle Collector

- Set Up Syslog Collector

- Set Up Windows Active Directory Collector

- Set Up Windows Event Log Collector

- Set Up Windows File Collector

- Manage Site Collectors

- Apply Antivirus Exclusions

- Migrate to the New-Scale Site Collectors Service

- Modify Collector Configuration

- Modify a Site Collector Instance

- Manage Templates

- Monitor Log Sources

- Add Filters to Set Egress Log Filtering Conditions

- New Site Collector Management Service NGSCD

- Regenerate Certificates for Collectors

- Upgrade the Site Collector

- Upgrade the Site Collector Specifications

- Vulnerability Remediation Policy

- Site Collector Monitoring

- Troubleshoot the Site Collector

- Pre-checks failed during Site Collector installation and upgrade

- Site Collector UI shows the status INSTALLATION_ERROR

- Download Support Packages for Troubleshooting

- How to reboot the Virtual Machine (VM) successfully to apply security updates?

- What information must be added while creating a support ticket to resolve an issue?

- Site Collector UI is not displaying the heartbeats

- Splunk Collector can't be set up

- Splunk Collector is set up however, logs are not reaching DL/AA

- Only a few of the installed Splunk Collectors are processing logs or EPS has dropped by 50% as compared to last hour

- The Windows Active Directory Collector (formerly known as LDAP Collector) is set up, however, the context data is not reaching DL/AA

- The Windows Active Directory Collector (formerly known as LDAP Collector) is stuck in the ‘Update’ mode after deployment

- Installation is initiated; however, the collector shows the status as ‘Setting Up’ for some time

- Data Lake and Advanced Analytics Does Not Show Context Data

- Context Data from Windows Active Directory Collector is Segmented

- Minifi Permission Denied - Logback.xml File Missing and Config File Update - Failed Error Occurred while Installing the Windows Event Log Collector

- Where should I upload proxy certificates if I am running proxy with TLS interception?

- How to upgrade Linux collector instance?

Set up AWS VM for Site Collector Installation

To set up the AWS VM for installing Site Collector:

Complete the environment requirements and prerequisites.

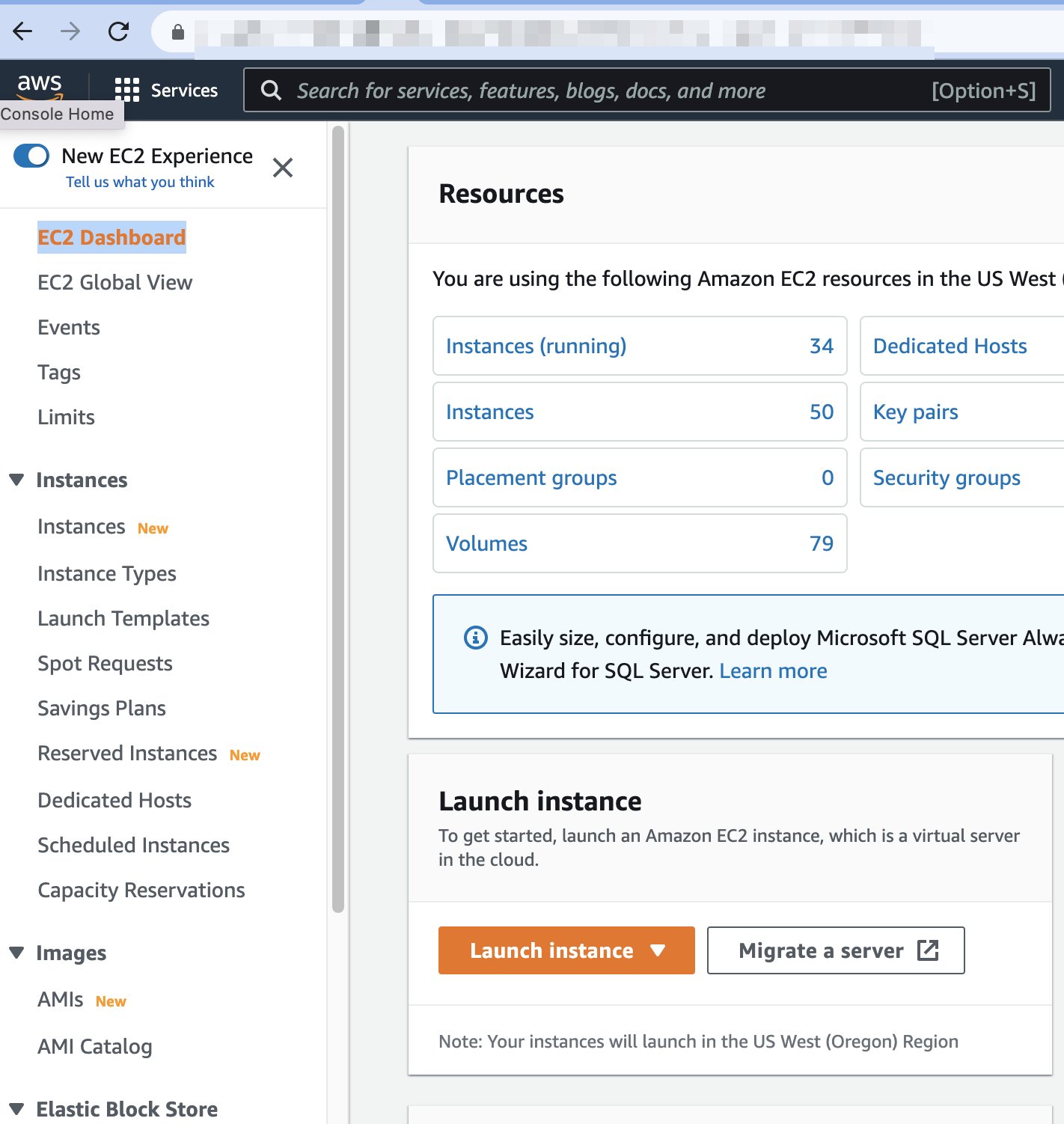

Log in to the Amazon EC2 console with your registered credentials.

Select the AWS project for which you want to create your new VM for Site Collector installation.

Click Services EC2.

Click EC2 Dashboard.

Click Launch Instance.

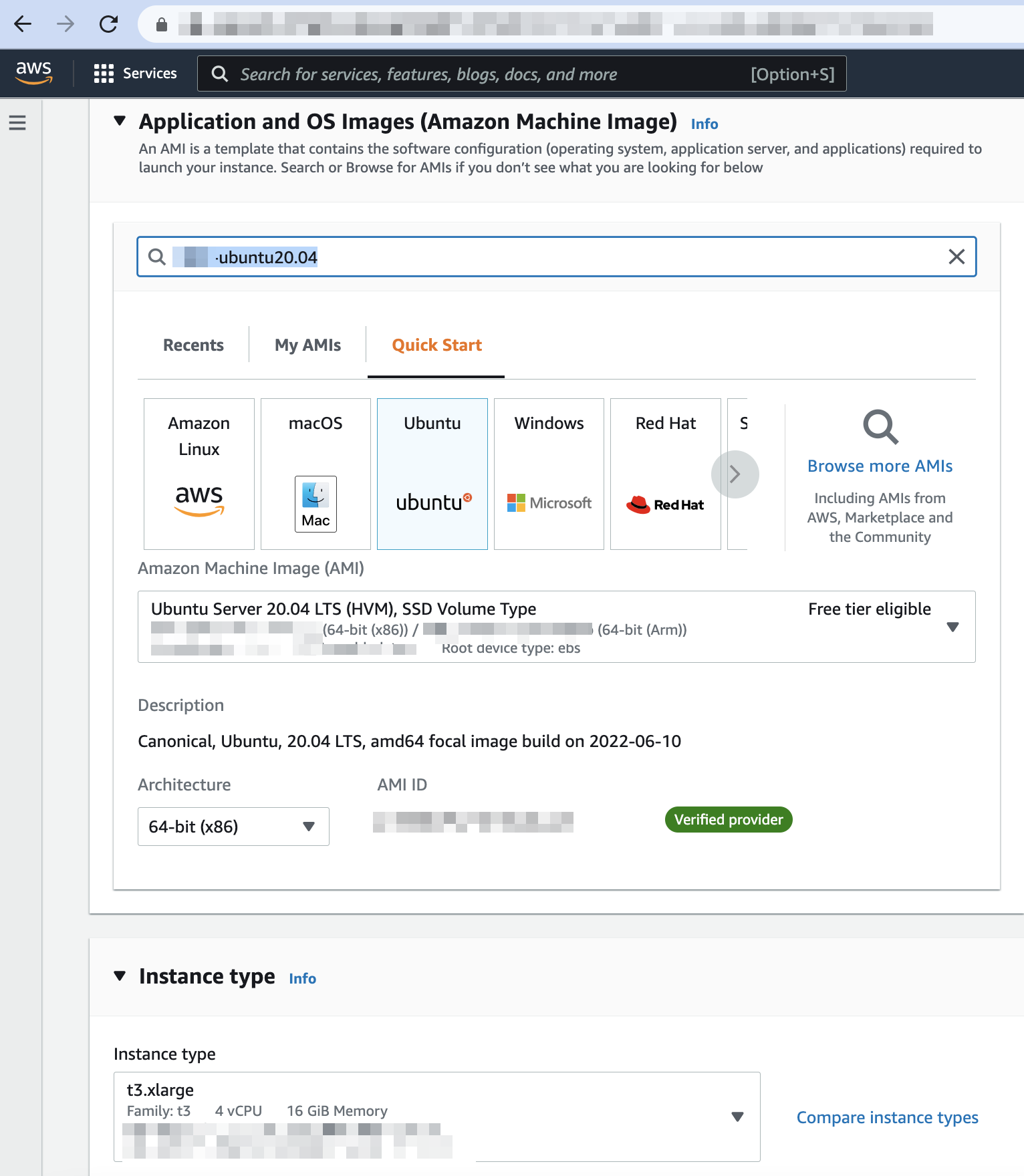

Enter a name for your VM. For example, ngsc-ubuntu20.04.

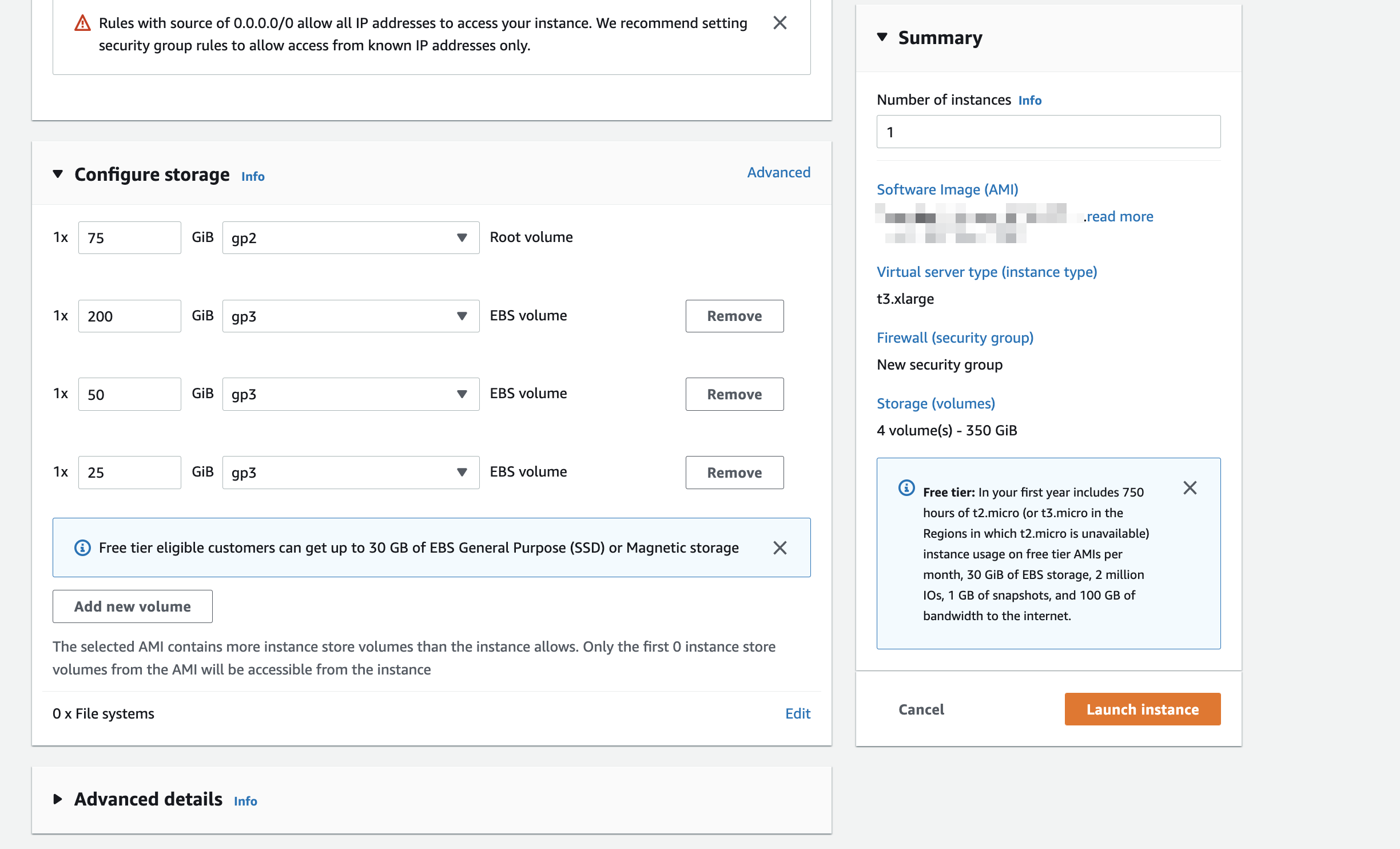

Select the operating system Ubuntu 20.04 or later and t3.xlarge instance type.

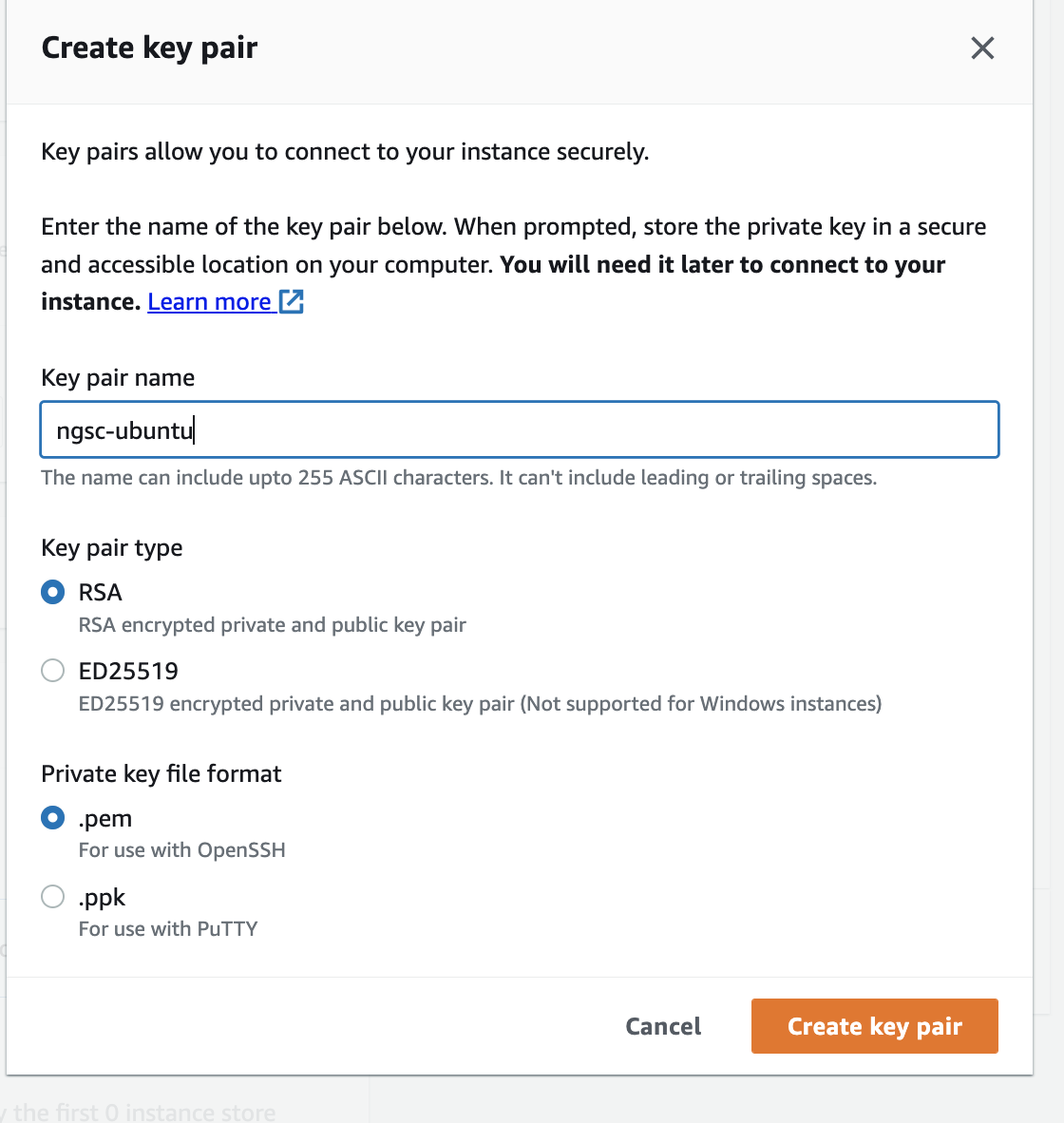

In the Create key pair section, enter a name for a new SSH key pair, and select the type and file format for the key.

Click Create key pair.

Note

To use the key to connect to the VM, save the SSH key file to the .ssh folder. Use chmod 400 ngsc-ubuntu.pem command to make it readable.

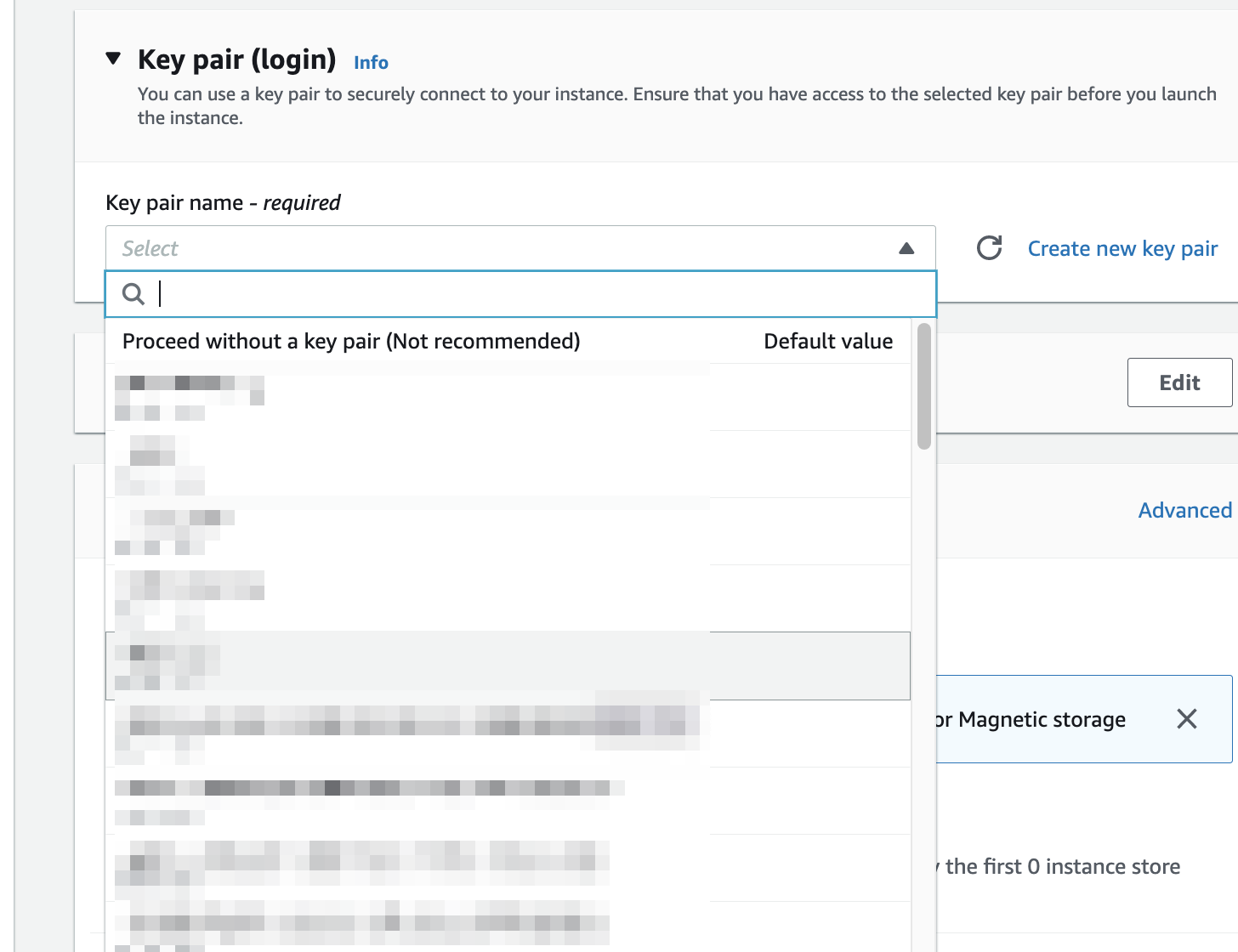

To use the key pair to securely connect to your instance, ensure that you have access to the selected key pair before you launch the instance.

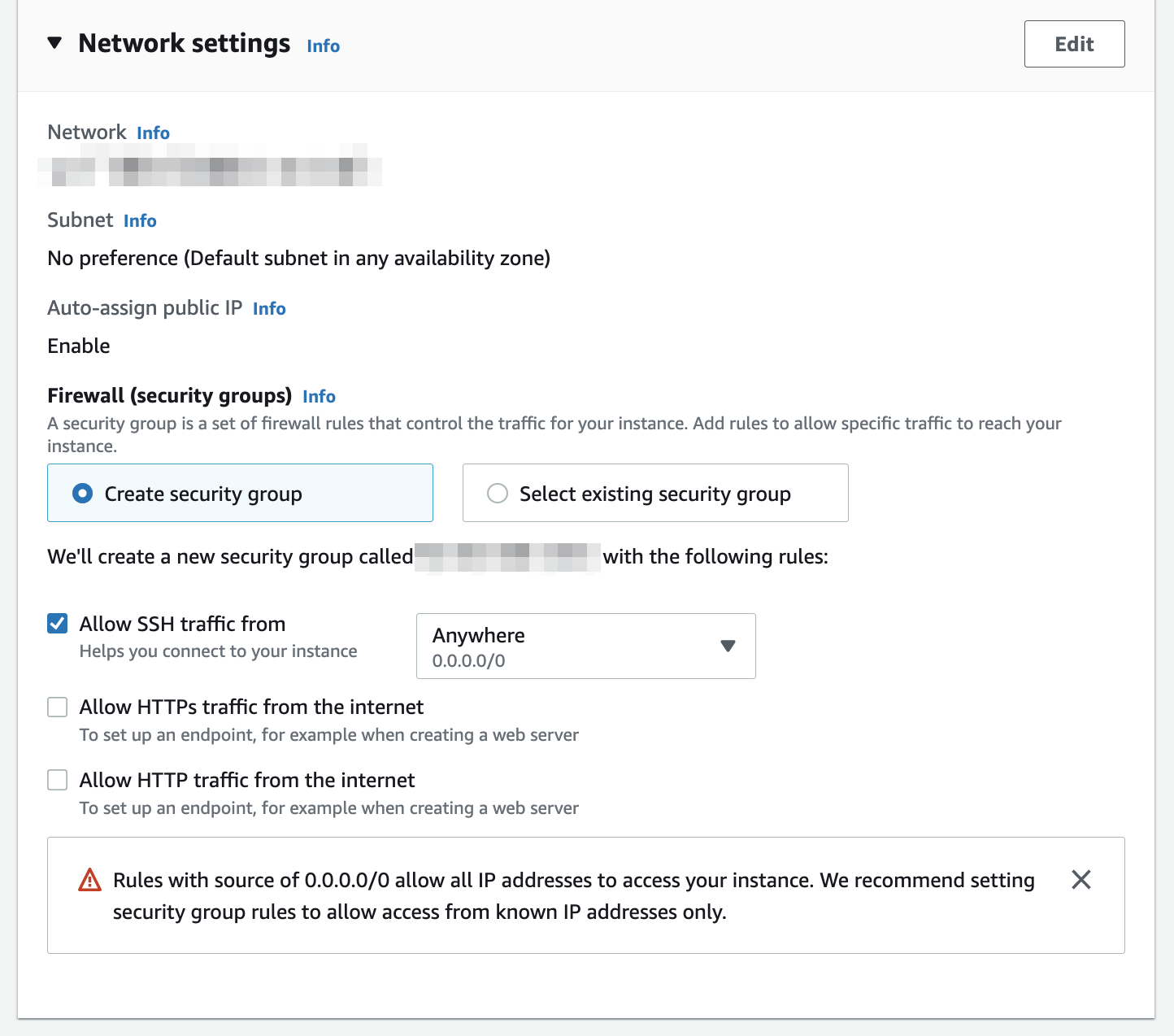

Expand the Network settings section. In the Network settings section, select VPC network, Subnet, and Create security group under Firewall (security groups). Additionally, select Allow SSH traffic from. Before using the default settings, contact your network security officer.

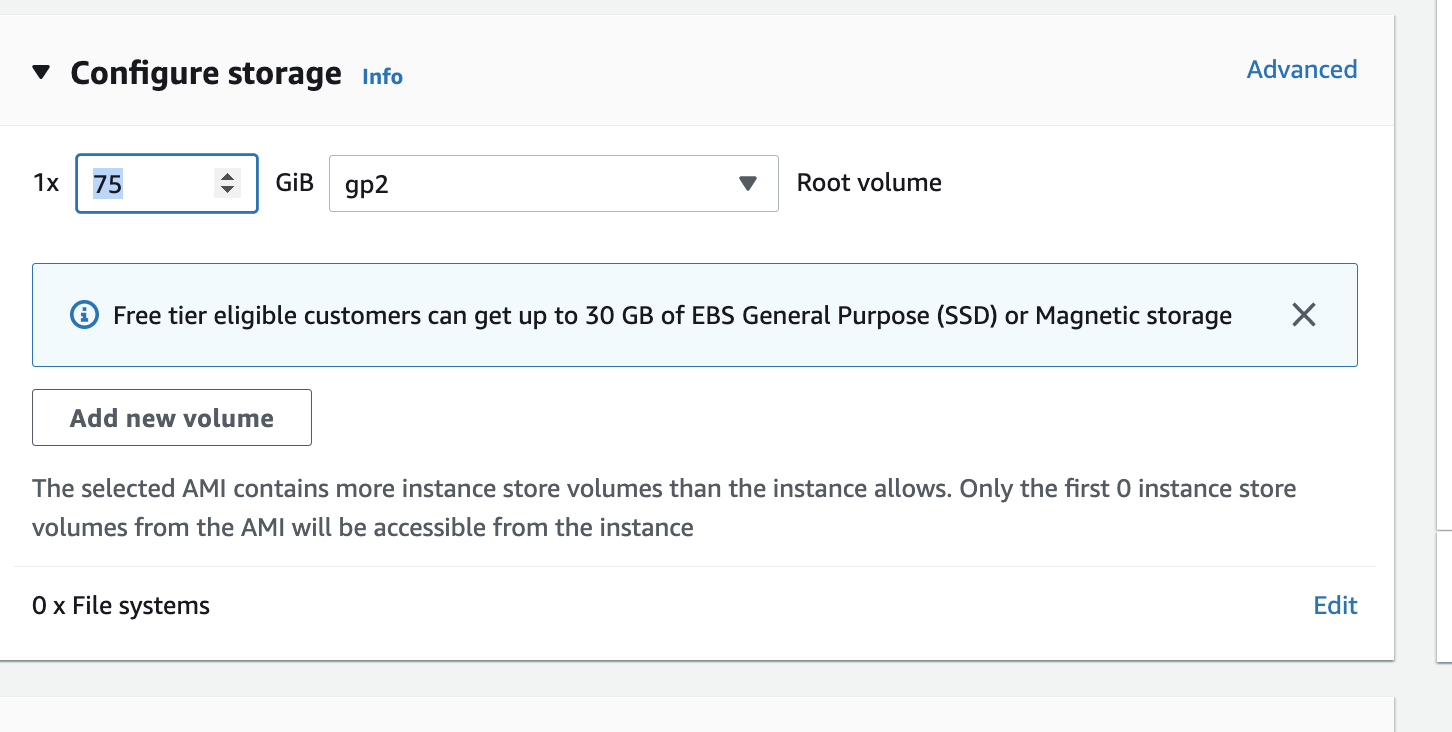

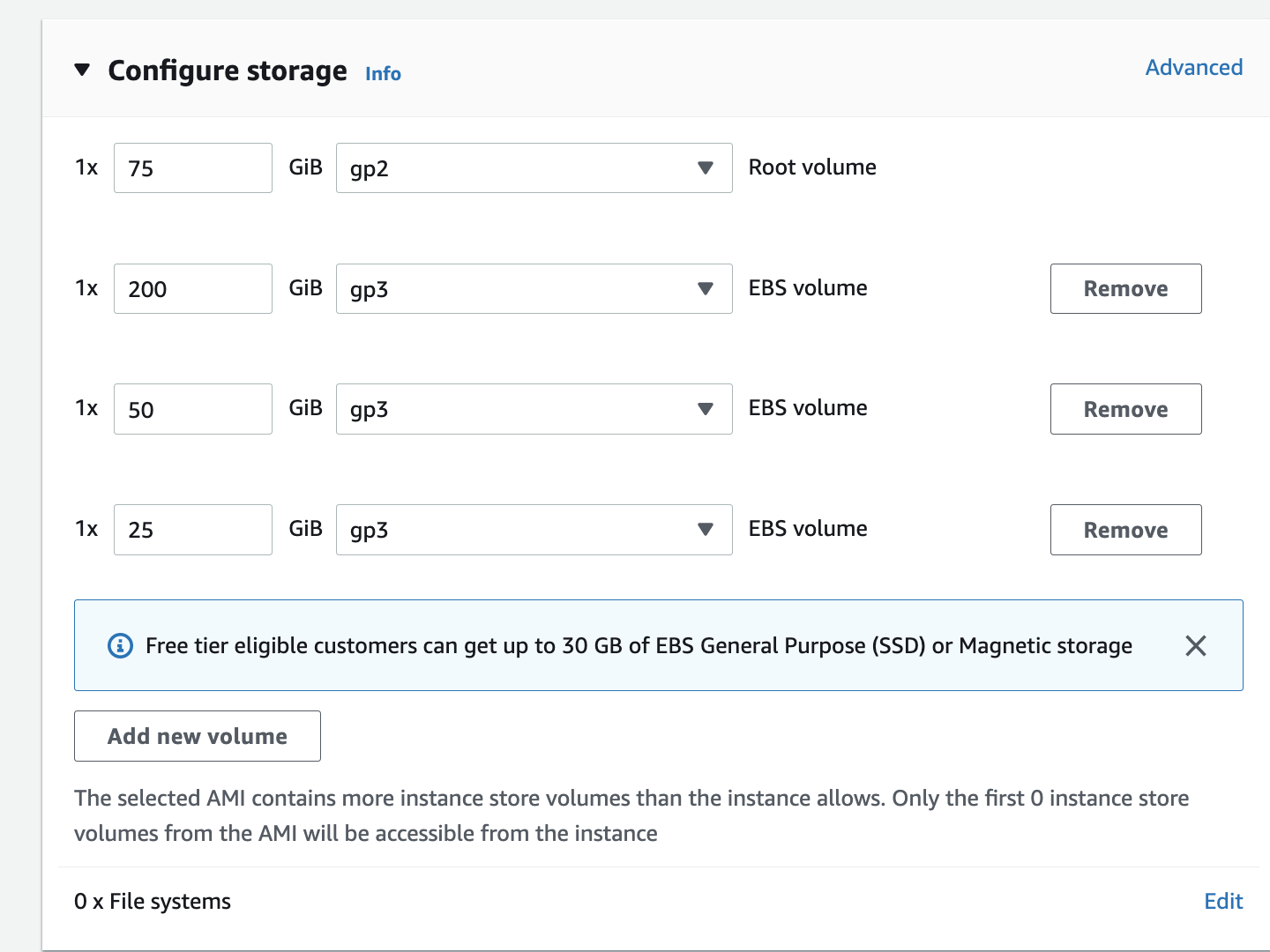

In the Configure storage section, set the size root Volume to 75 Gb.

Add three new disks that are required for Site Collector installation as follows.

/: 75 GB (root folder)

/content_repository: 200 GB

/provenance_repository: 50 GB

/flowfile_repository: 25 GB

Click Launch instance.

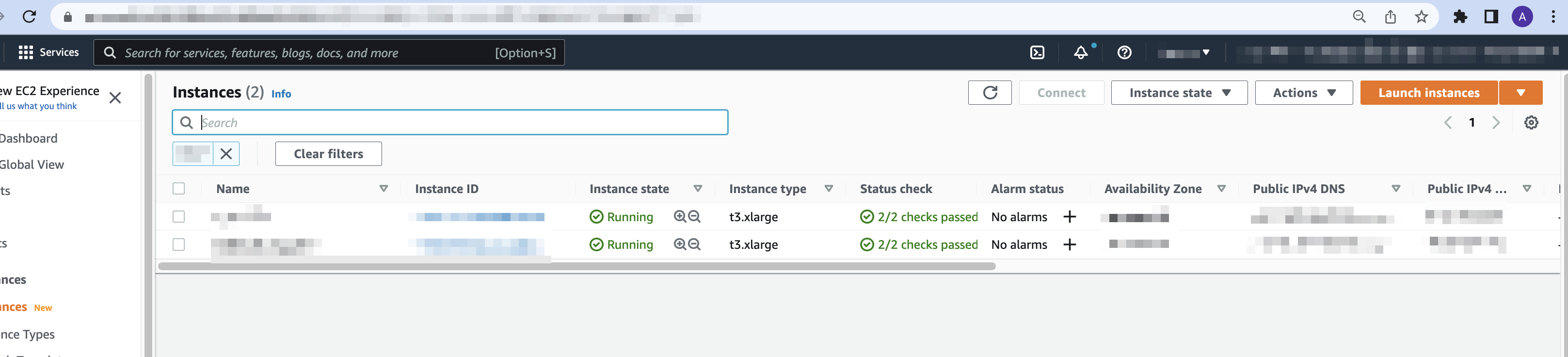

Click View all instances.

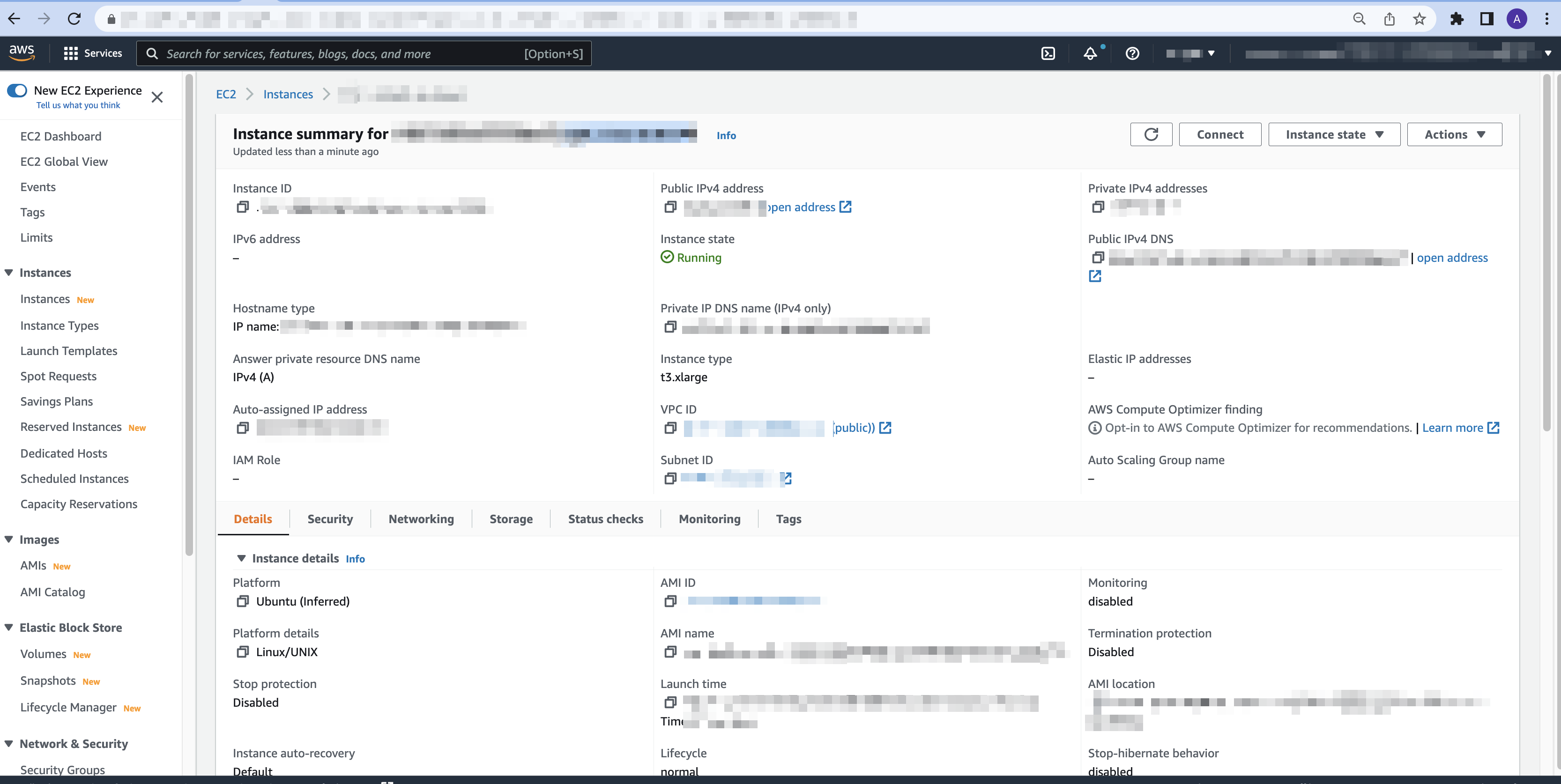

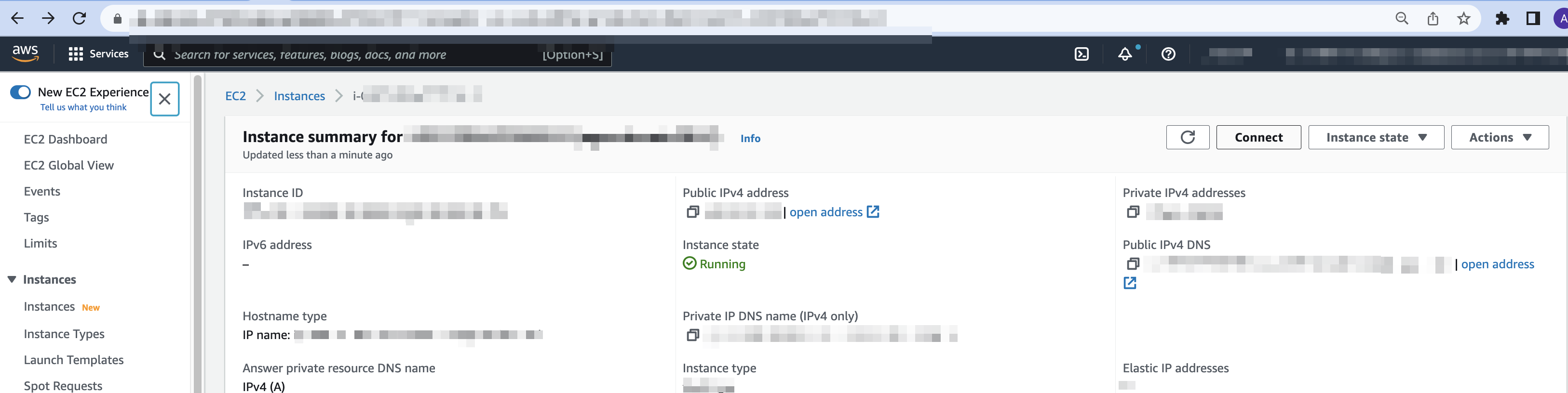

Click the instance you created. In this example the instance is ngsc-ubuntu20.04.

Copy the value for Public IPv4 DNS. In this example, it is ec2-35-87-221-36.us-west-2.compute.amazonaws.com.

In the top right corner click Connect.

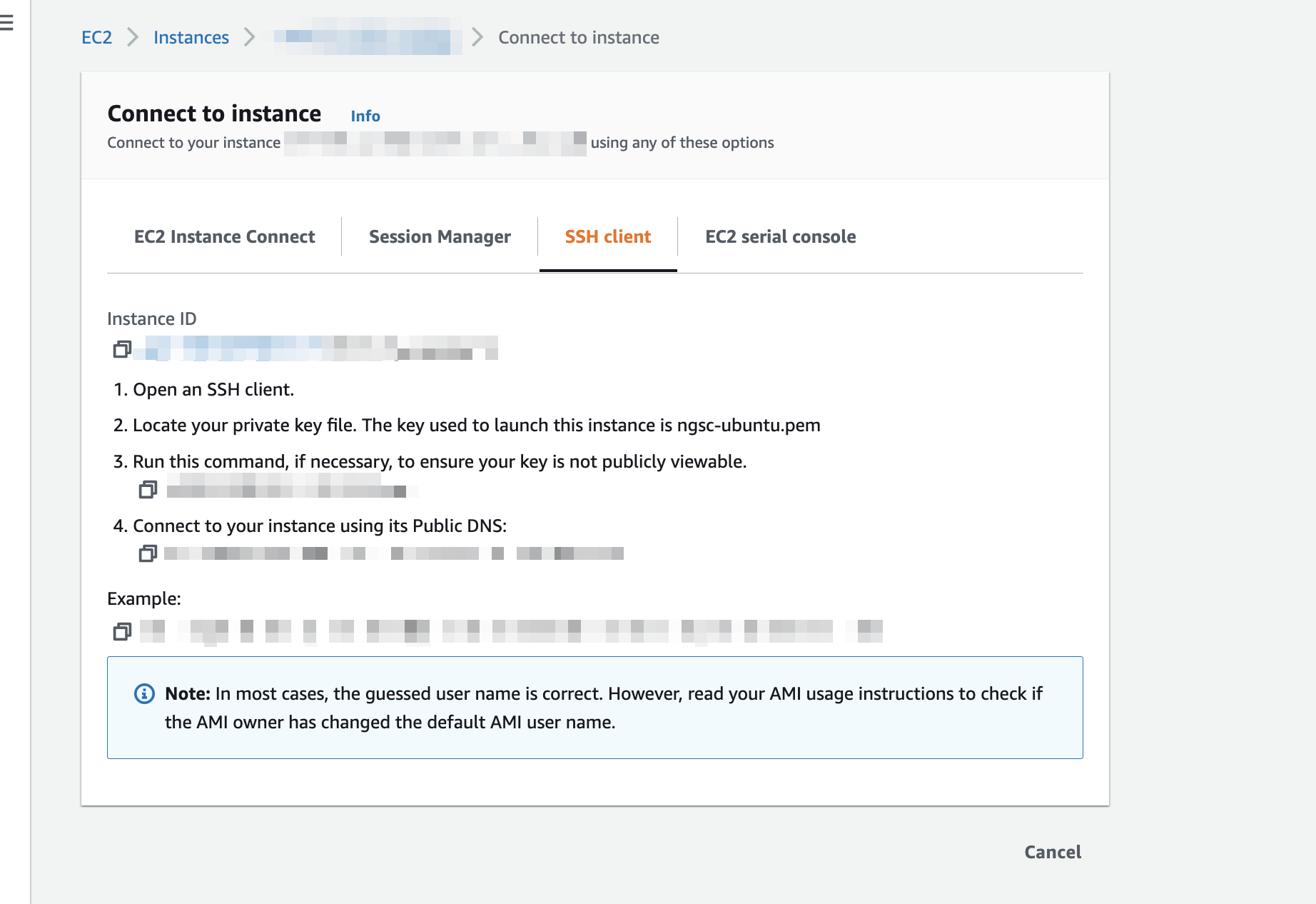

On the Connect to instance page, click the SSH client tab, and copy the SSH connect command.

Use the following command to <<>>

ssh -i "ngsc-ubuntu.pem" [email protected]

For CentOs 7, use the follwing command to ssh into the VM.

ssh -i "ngsc-ubuntu.pem" [email protected]

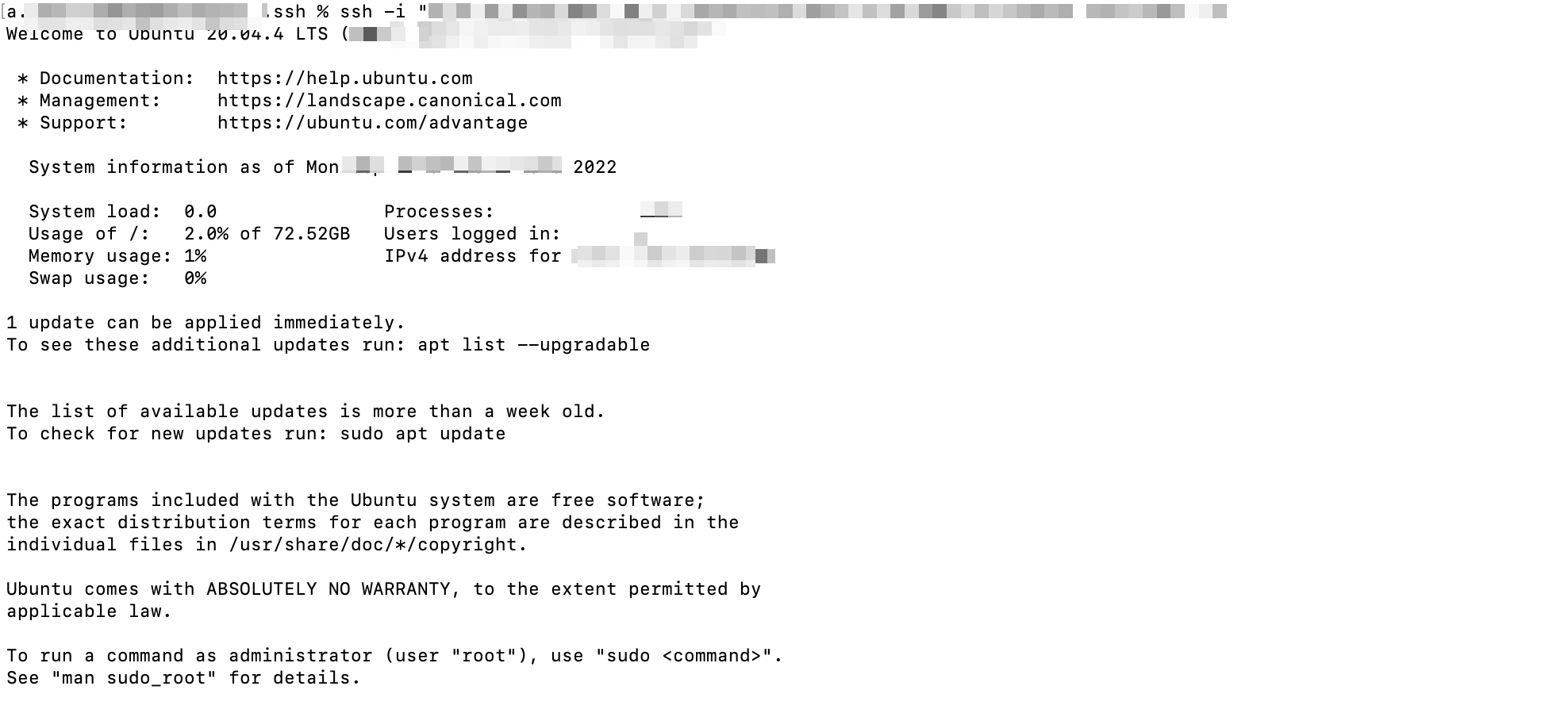

The VM is accessible.

To proceed with preparing the required environment for Site Collector installation, refer to the following steps.

Use the following commands to SSH to your VM and install the required packages for RedHat 7.

# install packages sudo yum update -y sudo yum install -y jq rsync screen curl openssl gawk ntp ntpstat # enable NTP sudo systemctl start ntpd sudo systemctl enable ntpd # install docker sudo yum install -y yum-utils sudo yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo sudo yum install -y docker-ce docker-ce-cli containerd.io docker-compose # NGSC v1.10+ will also require docker-compose-plugin sudo yum install -y docker-compose-plugin sudo systemctl start docker sudo systemctl enable docker # verify that the docker is installed correctly sudo docker run hello-world # install tmux curl --silent https://storage.googleapis.com/ngsc_update/exa-cloud-prod/tmux_install.sh | sudo bashUse the following commands to SSH to your VM and install the required packages for RedHat 8, 9, for CentOS.

# install packages sudo dnf install https://dl.fedoraproject.org/pub/epel/epel-release-latest-8.noarch.rpm sudo dnf install 'dnf-command(upgrade)' sudo yum update -y sudo yum install -y jq rsync screen curl openssl gawk sudo yum install -y chrony # install docker sudo yum install -y yum-utils sudo yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo sudo yum install -y docker-ce docker-ce-cli containerd.io # NGSC v1.10+ will also require docker-compose-plugin sudo yum install -y docker-compose-plugin sudo systemctl start docker sudo systemctl enable docker # verify that the docker is installed correctly sudo docker run hello-world # install docker-compose sudo curl -L "https://github.com/docker/compose/releases/download/1.23.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/bin/docker-compose sudo chmod +x /usr/bin/docker-compose # verify that the docker-compose is installed correctly docker-compose -v # install tmux curl --silent https://storage.googleapis.com/ngsc_update/exa-cloud-prod/tmux_install.sh | sudo bashUse the following commands to SSH to your VM and install the required packages for Ubuntu. Ensure that you change ntp to chrony in line #3 if required and change ntp to chrony in lines 6-7 if installed.

# install package sudo apt-get update sudo apt-get install -y ca-certificates curl gnupg lsb-release jq rsync screen curl openssl gawk ntp ntpstat # enable NTP sudo systemctl start ntp sudo systemctl enable ntp # install docker curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/trusted.gpg.d/docker-archive-keyring.gpg sudo add-apt-repository "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" sudo apt-get update sudo apt-get install -y docker-ce docker-ce-cli containerd.io docker-compose # NGSC v1.10+ will also require docker-compose-plugin sudo apt-get install -y docker-compose-plugin sudo systemctl start docker sudo systemctl enable docker # verify that the docker is installed correctly sudo docker run hello-world # install tmux curl --silent https://storage.googleapis.com/ngsc_update/exa-cloud-prod/tmux_install.sh | sudo bash

To check the disk names, run the following commands.

lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT loop0 7:0 0 25.1M 1 loop /snap/amazon-ssm-agent/5656 loop1 7:1 0 61.9M 1 loop /snap/core20/1518 loop2 7:2 0 67.8M 1 loop /snap/lxd/22753 loop3 7:3 0 55.5M 1 loop /snap/core18/2409 loop4 7:4 0 47M 1 loop /snap/snapd/16010 nvme0n1 259:0 0 75G 0 disk ├─nvme0n1p1 259:4 0 74.9G 0 part / ├─nvme0n1p14 259:5 0 4M 0 part └─nvme0n1p15 259:6 0 106M 0 part /boot/efi nvme1n1 259:1 0 25G 0 disk nvme2n1 259:2 0 200G 0 disk nvme3n1 259:3 0 50G 0 disk

To create partitions, use the following commands.

sudo parted -s /dev/nvme2n1 mktable gpt sudo parted -s /dev/nvme3n1 mktable gpt sudo parted -s /dev/nvme1n1 mktable gpt sudo parted -s /dev/nvme2n1 mkpart ext4 1MiB 100% sudo parted -s /dev/nvme3n1 mkpart ext4 1MiB 100% sudo parted -s /dev/nvme1n1 mkpart ext4 1MiB 100%

To create a file system, run the following commands.

sudo mkfs.ext4 /dev/nvme1n1 sudo mkfs.ext4 /dev/nvme2n1 sudo mkfs.ext4 /dev/nvme3n1

Create mount directories for NiFi and mount appropriate partitions.

Mounts according to disk sizing are as follows.

/content_repository: 200 GB

/provenance_repository: 50 GB

/flowfile_repository: 25 GB

Use the following commands to create the disks. Ensure that the mounted disks match the required capacities. The command sudo mkdir creates the directory, and the command sudo mount connects the directory to a physical disk.

sudo mkdir /content_repository sudo mkdir /provenance_repository sudo mkdir /flowfile_repository

sudo mount /dev/nvme2n1 /content_repository sudo mount /dev/nvme3n1 /provenance_repository sudo mount /dev/nvme1n1 /flowfile_repository

Following is the df output example.

df -h Filesystem Size Used Avail Use% Mounted on /dev/root 73G 2.2G 71G 3% / devtmpfs 7.8G 0 7.8G 0% /dev tmpfs 7.8G 0 7.8G 0% /dev/shm tmpfs 1.6G 888K 1.6G 1% /run tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 7.8G 0 7.8G 0% /sys/fs/cgroup /dev/loop0 26M 26M 0 100% /snap/amazon-ssm-agent/5656 /dev/loop1 62M 62M 0 100% /snap/core20/1518 /dev/nvme0n1p15 105M 5.2M 100M 5% /boot/efi /dev/loop2 68M 68M 0 100% /snap/lxd/22753 /dev/loop3 56M 56M 0 100% /snap/core18/2409 /dev/loop4 47M 47M 0 100% /snap/snapd/16010 tmpfs 1.6G 0 1.6G 0% /run/user/1000 /dev/nvme2n1 196G 61M 186G 1% /content_repository /dev/nvme3n1 49G 53M 47G 1% /provenance_repository /dev/nvme1n1 25G 45M 24G 1% /flowfile_repository

Note

Ensure that the size for

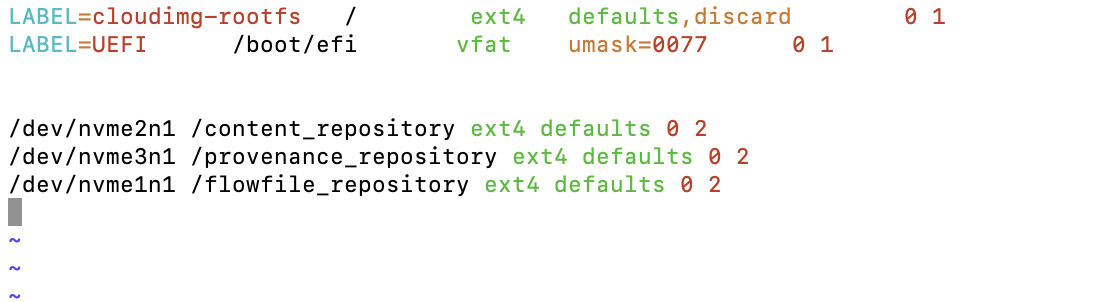

nvme2n1is less than 200G, because ext4 reserves some space for its requirements.Set up auto mount for your drivers. Edit your /etc/fstab file and add three drivers which are linked to repository folders. For example:

/content_repositoryfolder in on/dev/nvme2n1disk. In /etc/fstab set:/dev/nvme2n1 /content_repository ext4 defaults 0 2

/provenance_repositoryfolder in on/dev/nvme3n1disk. In /etc/fstab set:/dev/nvme3n1 /provenance_repository ext4 defaults 0 2

/flowfile_repositoryfolder in on/dev/nvme1n1disk. In /etc/fstab set:/dev/nvme1n1 /flowfile_repository ext4 defaults 0 2

The following screenshot shows how the FSTAB file looks like.

Restart the server.

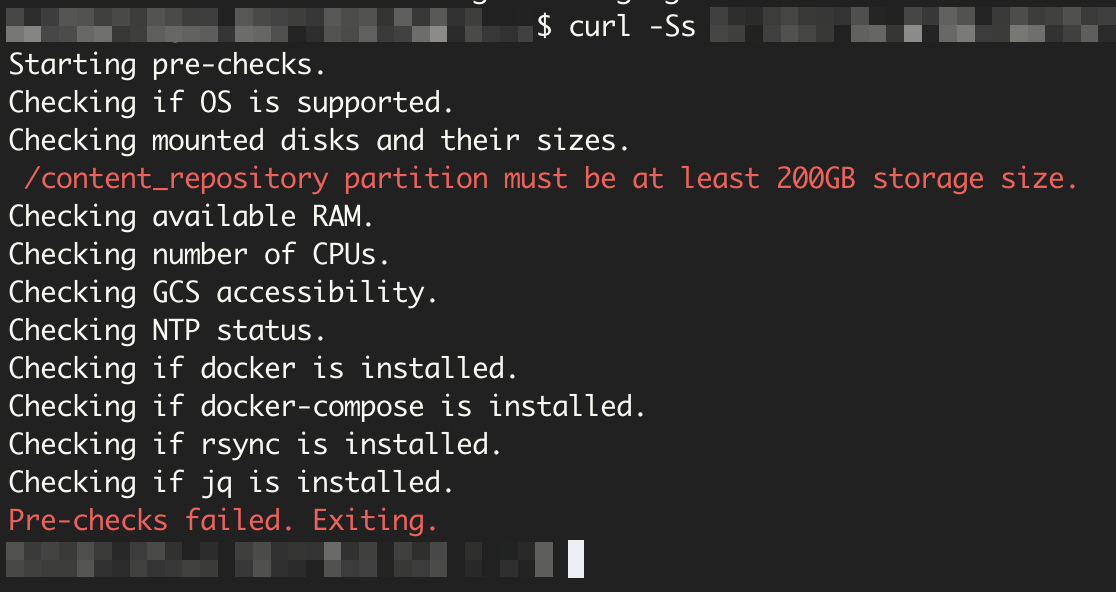

After restarting the server, run the following Site Collector pre-check script to validate the system.

curl -o ngsccli https://storage.googleapis.com/ngsc_update/exa-cloud-prod/exa-ngsc/ngsccli

The following message indicates that the system is not ready and pre-checks failed.

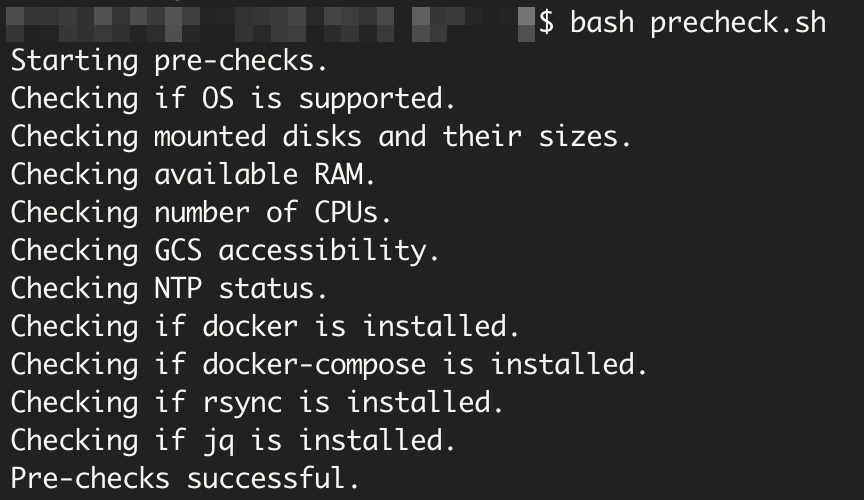

The following result indicates that the pre-checks and configuration are successful and the VM is ready for Site Collector installation.

Proceed to install Site Collector.

Manage Ports with Firewall on Ubuntu

If you use Ubuntu, use Uncomplicated Firewall (ufw) that is a frontend for iptables. To enable ufw firewall, allow ssh access, enable logging, and check the status of the firewall by using the following commands.

Before enabling the firewall for Ubuntu, use the following command to allow SSH.

sudo ufw allow ssh sudo ufw enable;sudo ufw reload;sudo ufw status

To ensure that the destination port is enabled for TCP traffic, use the following command:

sudo ufw allow exposed_port/tcp sudo ufw allow effective_port/tcp

To edit the UFW configuration, use the following command:

sudo vi /etc/ufw/before.rules

Before * filter section, insert the following commands, modify the parameters

exposed_portandeffective_portwith the port number on which you want to enable the requests from outside and port number of the port on which the service is listening respectively.*nat :PREROUTING ACCEPT [0:0] -A PREROUTING -p tcp --dport exposed_port -j REDIRECT --to-port effective_port COMMIT

To enable and reload the firewall, use the following command:

sudo ufw enable sudo ufw reload

To check the status, run

sudo ufw statusand get the expected output as follows:Status: active To Action From -- ------ ---- exposed_port/tcp ALLOW Anywhere effective_port/tcp ALLOW Anywhere exposed_port/tcp (v6) ALLOW Anywhere (v6) effective_port/tcp (v6) ALLOW Anywhere (v6)

Manage Ports with Firewall on RHEL

If you use RHEL, before enabling the firewall for RHEL, use the following command to allow SSH.

sudo firewall-cmd --permanent --add-service ssh sudo firewall-cmd --reload

You must see 'success' after you run the above commands. Following is an example of the output that you receive.

[exabeam@ngsc ~]$ sudo firewall-cmd --permanent --add-service ssh success [exabeam@ngsc ~]$ sudo firewall-cmd --reload success

To validate your changes after reloading, use the following command.

sudo firewall-cmd --list-services

You can now see ssh and any other services you have configured here. Following is an example of the output that you receive. This allows users to SSH into the VM after firewall is enabled, to execute shell command for installing Site Collector.

[exabeam@ngsc ~]$ sudo firewall-cmd --list-services ssh

To setup port forwarding, run the following commands:

sudo firewall-cmd --add-forward-port=port=514:proto=tcp:toport=1514 --permanent sudo firewall-cmd --reload