- Site Collector Overview

- Get Started with Site Collectors

- Install Site Collector

- Set Up Collectors

- Sign Up for the Early Access Program: Site Collectors

- Choose the Right Collector based on Data Sources

- Set Up Archive Windows Collector

- Set Up Archive Linux Collector

- Set Up EStreamer Collector

- Set Up Fortinet Collector

- Set Up IBM Security QRadar Collector

- Set Up Kafka Collector

- Set Up Splunk Collector

- Set Up Linux File Collector

- Set Up Microsoft SQL Collector

- Set Up MySQL Collector

- Set Up Oracle Collector

- Set Up Syslog Collector

- Set Up Windows Active Directory Collector

- Set Up Windows Event Log Collector

- Set Up Windows File Collector

- Manage Site Collectors

- Apply Antivirus Exclusions

- Migrate to the New-Scale Site Collectors Service

- Modify Collector Configuration

- Modify a Site Collector Instance

- Manage Templates

- Monitor Log Sources

- Add Filters to Set Egress Log Filtering Conditions

- New Site Collector Management Service NGSCD

- Regenerate Certificates for Collectors

- Upgrade the Site Collector

- Upgrade the Site Collector Specifications

- Vulnerability Remediation Policy

- Site Collector Monitoring

- Troubleshoot the Site Collector

- Pre-checks failed during Site Collector installation and upgrade

- Site Collector UI shows the status INSTALLATION_ERROR

- Download Support Packages for Troubleshooting

- How to reboot the Virtual Machine (VM) successfully to apply security updates?

- What information must be added while creating a support ticket to resolve an issue?

- Site Collector UI is not displaying the heartbeats

- Splunk Collector can't be set up

- Splunk Collector is set up however, logs are not reaching DL/AA

- Only a few of the installed Splunk Collectors are processing logs or EPS has dropped by 50% as compared to last hour

- The Windows Active Directory Collector (formerly known as LDAP Collector) is set up, however, the context data is not reaching DL/AA

- The Windows Active Directory Collector (formerly known as LDAP Collector) is stuck in the ‘Update’ mode after deployment

- Installation is initiated; however, the collector shows the status as ‘Setting Up’ for some time

- Data Lake and Advanced Analytics Does Not Show Context Data

- Context Data from Windows Active Directory Collector is Segmented

- Minifi Permission Denied - Logback.xml File Missing and Config File Update - Failed Error Occurred while Installing the Windows Event Log Collector

- Where should I upload proxy certificates if I am running proxy with TLS interception?

- How to upgrade Linux collector instance?

Set Up Kafka Collector

The Kafka collector is a set of Site Collector flows, pre-built processors, groups, custom processors, other components, and integrations which pulls logs in any text format from your Kafka server and pushes the logs to New-Scale Security Operations Platform. Set up the Kafka Collector to collect log data from your Kafka server with multiple brokers and topics. One Kafka collector instance pulls log data from up to five brokers and five topics.

Note

While configuring the Kafka collector, ensure that the Kafka-SSL server is reachable to the VM on which Site Collector is installed. The VM must communicate with the server over the network. If the Kafka SSL server is not reachable, you must add an entry in the /etc/hosts file specifying the IP address and host mapping of the Kafka-SSL server on the VM where Site Collector is installed.

To set up a Kafka collector:

Log in to the New-Scale Security Operations Platform with your registered credentials.

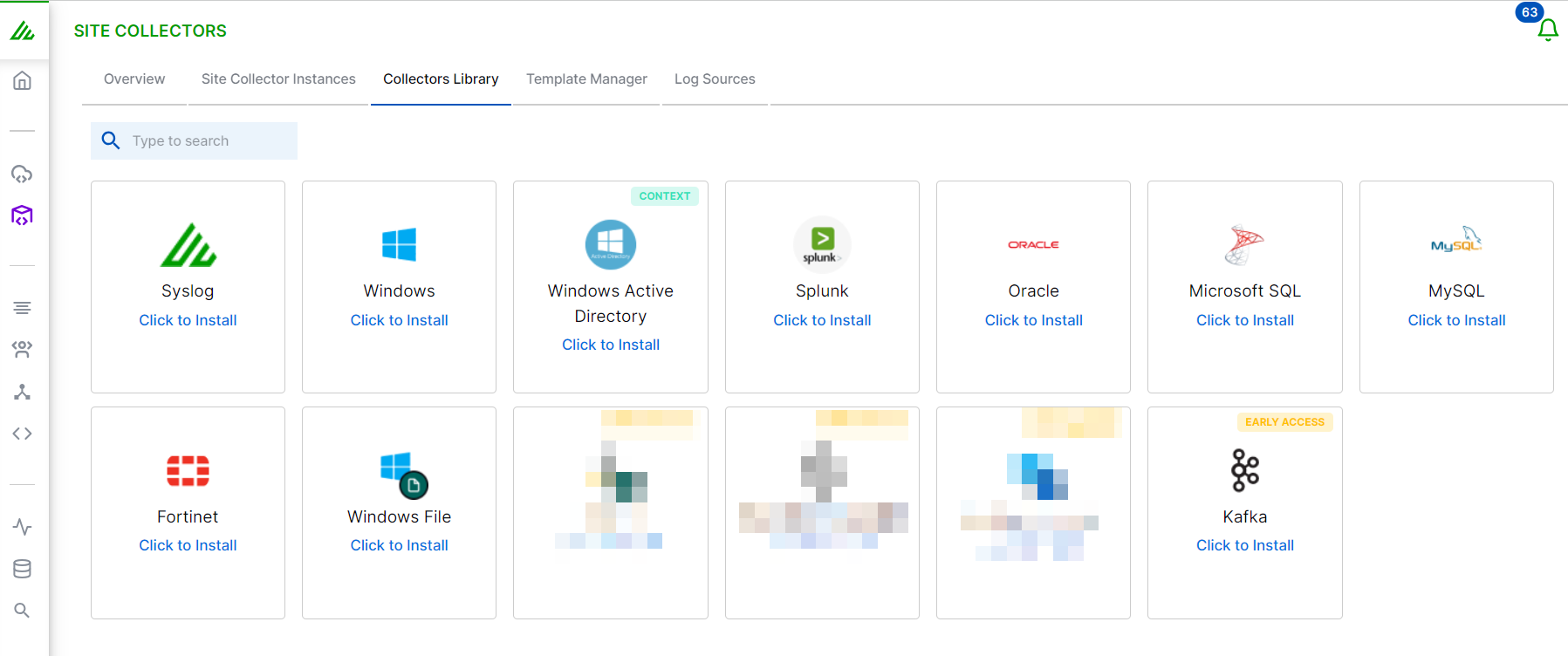

Navigate to Collectors > Site Collectors.

Ensure that Site Collector is installed and is running.

On the Site Collector page, click the Collectors Library tab, then click Kafka.

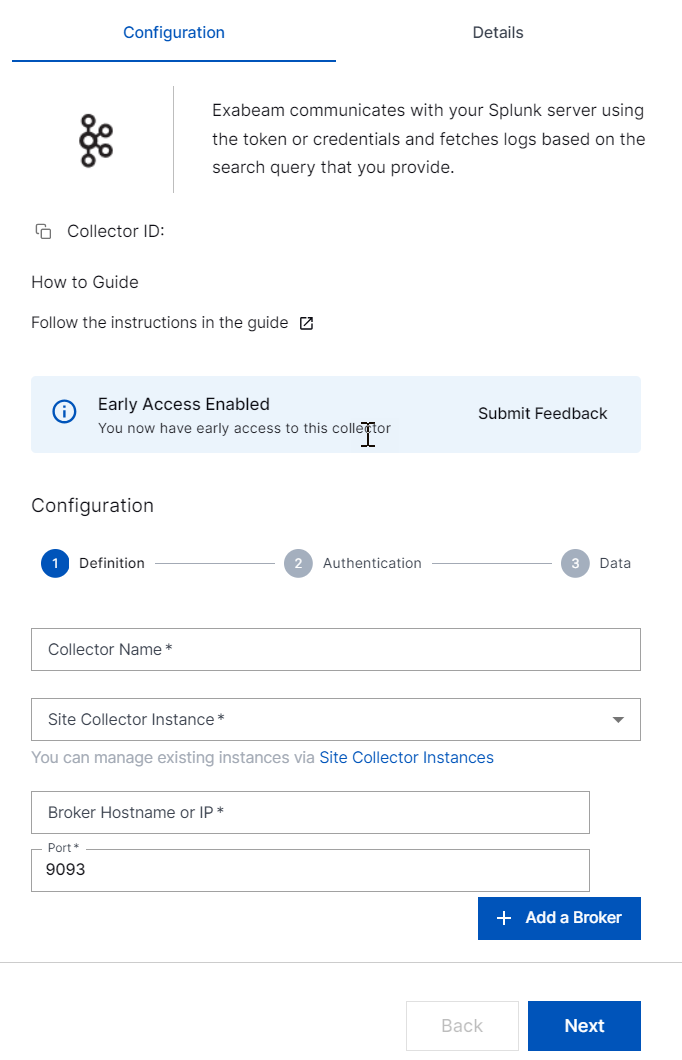

In the Definition section, enter the required information as follows.

Collector Name – Specify a name for the Kafka collector.

Note

Ensure that you specify different names for Site Collector instance and the collector.

Site Collector Instance – Select the site collector instance for which you want to set up the Kafka collector.

Broker Hostname or IP – Enter the IP address of the Kafka server from which you want the Kafka collector to pull logs.

Note

Ensure that you enter the correct Broker hostname or IP address, and topic name. If you enter incorrect broker hostname or IP address, or topic names, the Kafka Collector does not report an error because the collector cannot differentiate between correct and incorrect broker or topic, or a topic with no messages.

Port – Enter the port number of your broker.

Add Broker – Click to add more brokers which the collector can use for pulling data. You can add up to five brokers for the collector to pull data from the broker that is available if your Kafka server has a multi-broker setup for scalability and resilience.

Refer to the following example for entering broker and port details.

If your Kafka server is set up on a machine with IP Address 123.231.1.23, each broker in this server listens on one port. If you have five brokers for this server, listening on ports 10000, 10001, 10002, 10003, 10004, and you want to configure a Kafka collector to pull logs from three brokers, add IP Address and Port details accordingly as follows.

Broker Hostname or IP: 123.231.1.23 Port: 10000

Broker Hostname or IP: 123.231.1.23 Port: 10001

Broker Hostname or IP: 123.231.1.23 Port: 10002

Click Next.

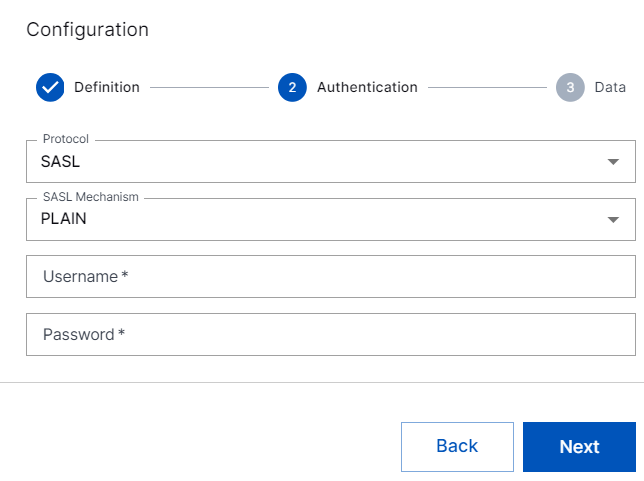

In the Authentication section, select the protocol for establishing connection with the the Kafka source based on the authentication mechanism your Kafka server is set up with.

Based on how your Kafka server is setup either with no authentication or with authentication, select No Auth, SASL, or SSL. The Kafka collector supports the following four types of Kafka server authentication: No Authentication, SASL PLAIN Authentication, SASL SCRAM SHA-256 Authentication, SASL SCRAM SHA-512 Authentication.

No Auth – Select if your Kafka server is set up for which no authentication is required.

SASL – Enter the following information for the SASL protocol which you obtained from your support team or Kafka administrator.

SASL Mechanism – Select the SASL authentication mechanism: PLAIN, SCRAM SHA-256, or SASL SCRAM SHA-512 with which your Kafka server is set up.

Username – Enter the user name of Kafka server which you obtained from your support team or Kafka administrator.

Password – Enter the password of Kafka server which you obtained from your support team or Kafka administrator.

SSL – Select SSL for SSL/TLS connection, and upload ca.pem, cert.pem and key.pem certificates.

Click Next.

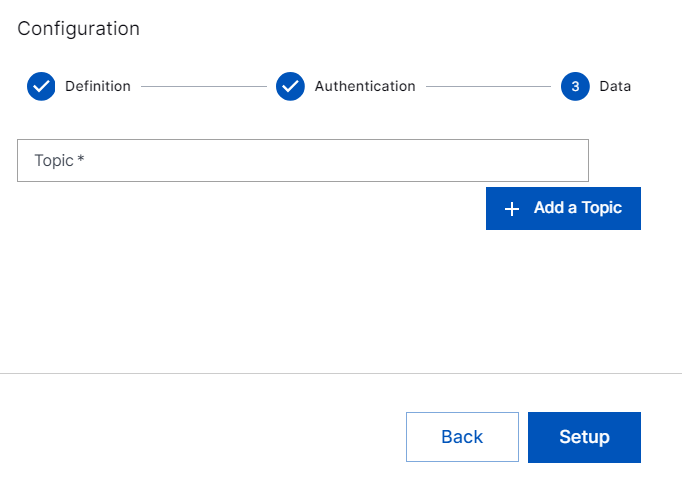

In the Data section, enter the topic name of your Kafka topic in the Kafka cluster for the collector to retrieve logs from that topic. You can add up to five topics.

Click Setup.

The Kafka collector is set up and is ready to pull logs from your Kafka sources.

In case of installation failure, the collector is disabled, and the configuration is saved. The status of the collector can be checked on the UI or using the support package.