- Advanced Analytics

- Understand the Basics of Advanced Analytics

- Configure Log Management

- Set Up Admin Operations

- Set Up Authentication and Access Control

- Additional Configurations

- Configure Rules

- Exabeam Threat Intelligence Service

- Threat Intelligence Service Prerequisites

- View Threat Intelligence Feeds

- Threat Intelligence Context Tables

- View Threat Intelligence Context Tables

- Assign a Threat Intelligence Feed to a New Context Table

- Create a New Context Table from a Threat Intelligence Feed

- Check ExaCloud Connector Service Health Status

- Exabeam Cloud Telemetry Service

- Manage Security Content in Advanced Analytics

- Health Status Page

How Advanced Analytics Works

Exabeam uses a two-layer approach to identifying incidents. The first layer involves collecting data from log sources and from external context data sources. The second layer involves processing data through the Analysis Engine and the Exabeam Stateful User TrackingTM technology.

In the first layer, events are normalized and enriched with contextual information about users and assets in order to understand entity activities within the environment across a variety of dimensions. At this level, statistical modeling profiles the behaviors of network entities while machine learning is applied in the areas of context estimation, for example, to distinguish between users and service accounts.

In the second layer, as events are processed, Exabeam uses Stateful User Tracking to connect user activities across multiple accounts, devices, and IP addresses. It places all user credential activities and characteristics on a timeline with scores assigned to anomalous access behavior. Traditional security alerts are also scored, attributed to identities, and placed on the activity timeline. The Analysis Engine updates risk scores based on input from the anomaly detection process as well as from other external data sources. The Analysis Engine brings in the security knowledge and expertise in order to bubble up significant anomalies. Incidents are generated when scores exceed defined thresholds.

Fetch and Ingest Logs

The way that logs are fetched and ingested depends on the version of Advanced Analytics you are using:

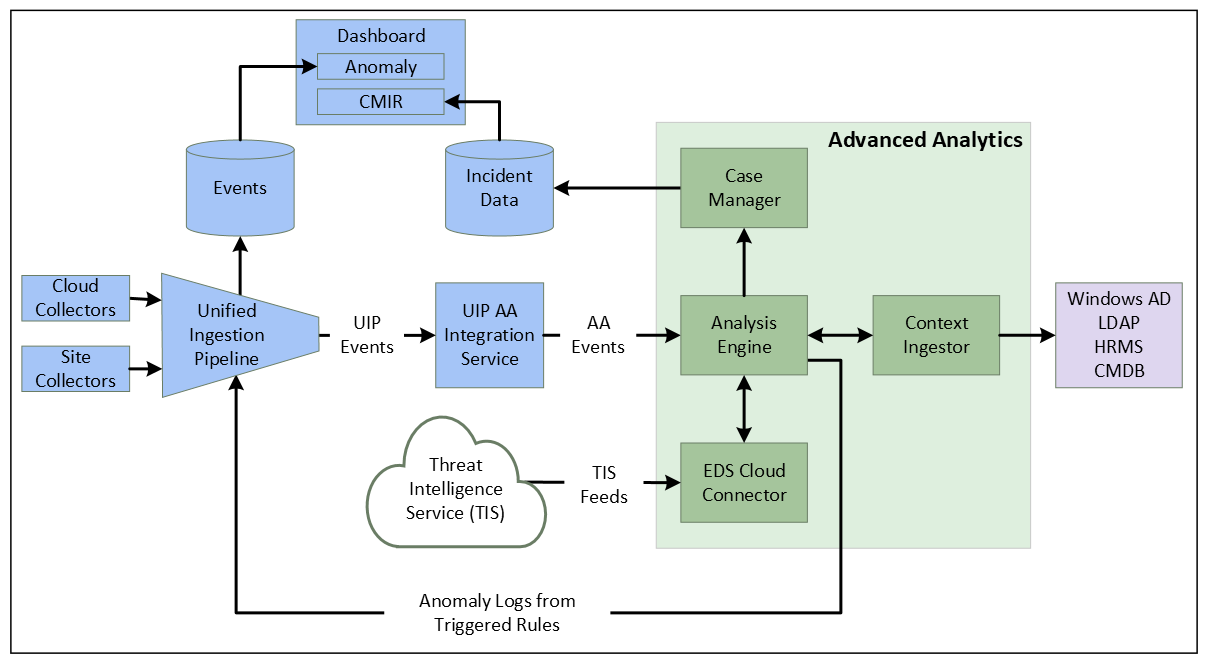

i63 and Later

In this version, log data enters Exabeam through a set of collector services. These services collect data from servers, applications, databases, and other devices across an infrastructure, whether the source is local, remote, or cloud-based. Logs can be collected from on-premises sites using Site Collectors or from third-party cloud vendors using Cloud Collectors.

The ingested logs are processed into events by the unified ingestion pipeline (UIP). These events conform to the hierarchical common information model that informs the data structure for all Exabeam products. These events are then processed through a UIP Advanced Analytics Integration service that transforms them into events that are readable by Advanced Analytics.

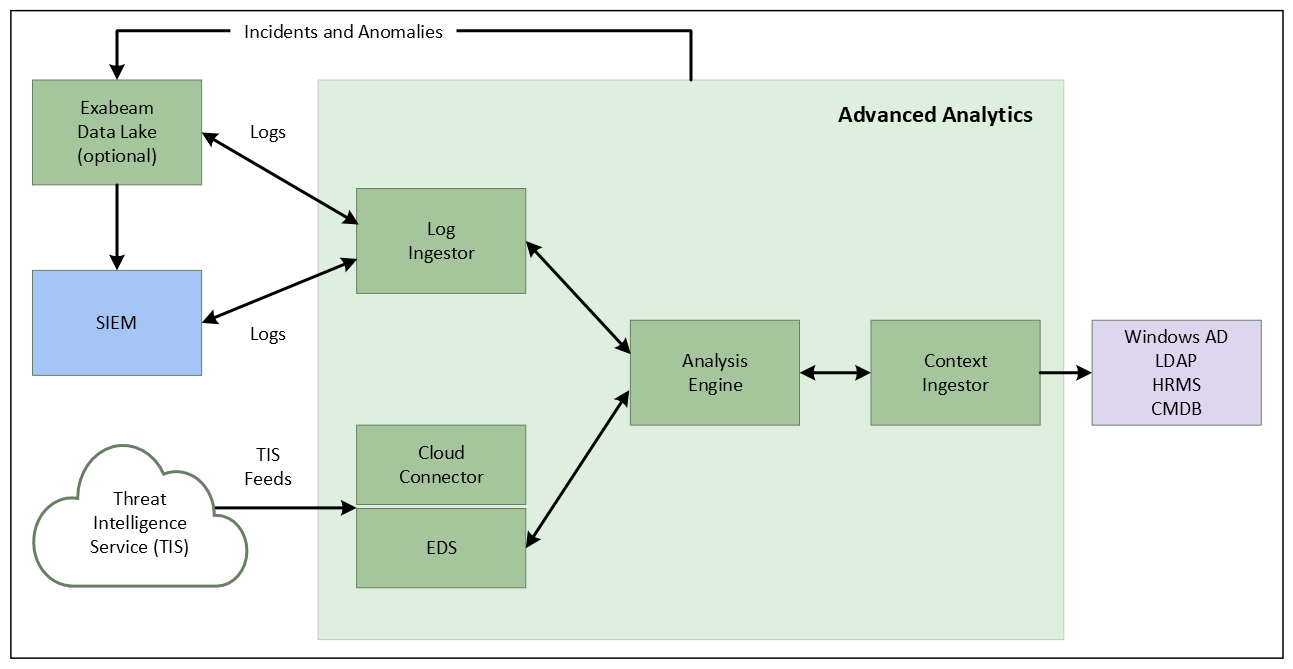

i60 to i62

In this version, Exabeam can fetch logs from SIEM log repositories and also ingest logs via Syslog. Currently log ingestion is supported from Splunk, Microfocus ArcSight, IBM QRadar, McAfee ESM and RSA Security Analytics, as well as other data sources such as Data Lake. For Splunk and QRadar, log ingestion is via external APIs and Syslog is used for all others. For SIEM solutions, such as LogRhythm, McAfee ESM, and LogLogic, ingestion is via Syslog forwarding.

The ingested logs are processed into events by the LIME (Log Ingestion and Message Extraction) engine.

Data Volume Limitations

With a Fusion license, you must use Advanced Analytics in a way that does not interfere with or disrupt the SaaS environment, servers, or networks. Additionally, you must not engage in any activity that interferes with the integrity or proper working of the service.

To ensure the proper operation of Advanced Analytics, the following limitations are in place for each 1GB of daily average consumption purchased:

You can not exceed 17 EPS.

If you exceed 10 EPS in any 30 minute time period, you can not exceed 10 EPS for at least four hours afterward.

For example, if you purchased 100 GB daily average consumption but exceed 1,000 EPS for over 30 minutes in a 4-hour timeframe, this can cause the performance and functionality of cloud-delivered Advanced Analytics to decrease.

Caution

Failure to adhere to operational limitations, can result in downtime or errors. In the event that you exceed the limitations, Exabeam will not be responsible for any resulting issues.

Add Context

Logs tell us what the users and entities are doing while context tells us who the users and entities are. Context data typically comes from identity services such as Active Directory. This data enriches the logs to help with the anomaly detection process or the data can be used directly by the Analysis Engine for fact-based rules. Regardless of where this context data is used, it goes through the anomaly detection process as part of an event. Examples of context information include the location for a given IP address, ISP name for an IP address, and department for a user. Contextual information can also include data from HR Management Systems, Configuration Management Databases, Identity Systems, etc. Threat intelligence feeds are another example of contextual data which can be used by the anomaly detection process to identify activity from a known malicious domain or IP address.

Detect Anomalies

In this part of the process, machine learning algorithms are used to identify anomalous behaviors. The anomalies may be relative to a single user, session, or device, or relative to group behavior. For example, some anomalies may refer to a behavior that is anomalous for a user relative to their past history. Other anomalies may take into account anomalous behaviors relative to people with roles similar to the individual (peer group), location, or other grouping mechanisms.

The algorithms are constantly improved upon to increase the speed and accuracy of numerical data calculations. This in turn improves the performance of Advanced Analytics.

Assess Risk

The Analysis Engine treats each session as a container and assigns risk scores to the events that are flagged as anomalous. As the sum of event risk scores reach a threshold (a default value of 90), incidents are automatically generated within the Case Management module or escalated as incidents to an existing SIEM or ticketing systems. Event scores are also available to be queried through the user interface. In some cases, these scores reflect more than just information considered anomalous on the basis of behavior log feeds alone. In some cases, the scores are provided in connection with other security alerts that may be generated by third-party sources (for example, FireEye or CrowdStrike alerts). These security alerts are integrated into user sessions and scored as factual risks.

Data Flow Diagrams

The flow of data through the components of Advanced Analytics depends on which version you are using. Select the relevant version below to view the appropriate diagram.