- Cloud Collectors Overview

- Administration

- Administrative Access

- Shareable Service Accounts

- Add Accounts for AWS Cloud Collectors

- Add Accounts for Cisco Duo Cloud Collector

- Add Accounts for Google Cloud Collectors

- Add Accounts for Microsoft Cloud Collectors

- Add Accounts for Okta Cloud Collectors

- Add Accounts for Salesforce Cloud Collectors

- Add Accounts for Splunk Cloud Collectors

- Add Accounts for Trend Micro Cloud Collectors

- Add Accounts for Wiz

- Define a Unique Site Name

- Sign Up for the Early Access Program

- Supported Cloud Collectors

- Onboard Cloud Collectors

- Abnormal Security Cloud Collector

- Anomali Cloud Collector

- AWS CloudTrail Cloud Collectors

- AWS CloudWatch Cloud Collector

- AWS CloudWatch Alarms Cloud Collector

- AWS GuardDuty Cloud Collector

- AWS S3 Cloud Collector

- AWS Security Lake Cloud Collector

- AWS SQS Cloud Collector

- Azure Activity Logs Cloud Collector

- Azure Blob Storage Cloud Collector

- Azure Log Analytics Cloud Collector

- Azure Event Hub Cloud Collector

- Azure Storage Analytics Cloud Collector

- Azure Virtual Network Flow Cloud Collector

- Box Cloud Collector

- Broadcom Carbon Black Cloud Collector

- Cato Networks Cloud Collector

- ChatGPT Enterprise Cloud Collector

- Cisco Duo Cloud Collector

- Cisco Meraki Cloud Collector

- Cisco Secure Endpoint Cloud Collector

- Cisco Umbrella Cloud Collector

- Cloudflare Cloud Collector

- Cribl Cloud Collector

- CrowdStrike Cloud Collectors

- Cylance Protect (now Arctic Wolf) Cloud Collector

- DataBahn Cloud Collector

- Dropbox Cloud Collector

- GCP Cloud Logging Cloud Collector

- GCP Pub/Sub Cloud Collector

- GCP Security Command Center Cloud Collector

- Gemini Enterprise Cloud Collector

- GitHub Cloud Collector

- Gmail BigQuery Cloud Collector

- Google Workspace Cloud Collector

- LastPass Cloud Collector

- Microsoft Defender XDR (via Azure Event Hub) Cloud Collector

- Microsoft Entra ID Context Cloud Collector

- Microsoft Entra ID Logs Cloud Collector

- Microsoft 365 Exchange Admin Reports Cloud Collector

- Supported Sources from Microsoft 365 Exchange Admin Reports

- Migrate to the Microsoft 365 Exchange Admin Reports Cloud Collector

- Prerequisites to Configure the Microsoft 365 Exchange Admin Reports Cloud Collector

- Configure the Microsoft 365 Exchange Admin Reports Cloud Collector

- Troubleshooting the Microsoft 365 Exchange Admin Reports Cloud Collector

- Microsoft 365 Management Activity Cloud Collector

- Microsoft Security Alerts Cloud Collector

- Microsoft Sentinel (via Event Hub) Cloud Collector

- Mimecast Cloud Collector

- Mimecast Incydr Cloud Collector

- Netskope Alerts Cloud Collector

- Netskope Events Cloud Collector

- Okta Cloud Collector

- Okta Context Cloud Collector

- Palo Alto Networks Cortex Data Lake Cloud Collector

- Palo Alto Networks XDR Cloud Collector

- Progress ShareFile Cloud Collector

- Proofpoint On-Demand Cloud Collector

- Proofpoint Targeted Attack Protection Cloud Collector

- Qualys Cloud Collector

- Recorded Future Cloud Collector

- Recorded Future Context Cloud Collector

- Rest API Cloud Collector

- S2W Threat Intelligence Cloud Collector

- Salesforce Cloud Collector

- Salesforce EventLog Cloud Collector

- SentinelOne Alerts Cloud Collector

- SentinelOne Cloud Funnel Cloud Collector

- SentinelOne Threats Cloud Collector

- SentinelOne Cloud Collector

- ServiceNow Cloud Collector

- Slack Cloud Collector

- Snowflake Cloud Collector

- Sophos Central Cloud Collector

- Splunk Cloud Collector

- STIX/TAXII Cloud Collector

- Symantec Endpoint Security Cloud Collector

- Tenable Cloud Collector

- Trend Vision One Cloud Collector

- Trellix Endpoint Security Cloud Collector

- Vectra Cloud Collector

- Zoom Cloud Collector

- Zscaler ZIA Cloud Collector

- Webhook Cloud Collectors

- Wiz Issues Cloud Collector

- Wiz API Cloud Collector

- Troubleshooting Cloud Collectors

Configure the Azure Log Analytics Cloud Collector

Set up the Azure Log Analytics Cloud Collector to continuously ingest security events from your Azure Log Analytics workspace.

Before you configure the Azure Log Analytics Cloud Collector, ensure that you complete the prerequisites.

Log in to the New-Scale Security Operations Platform with your registered credentials as an administrator.

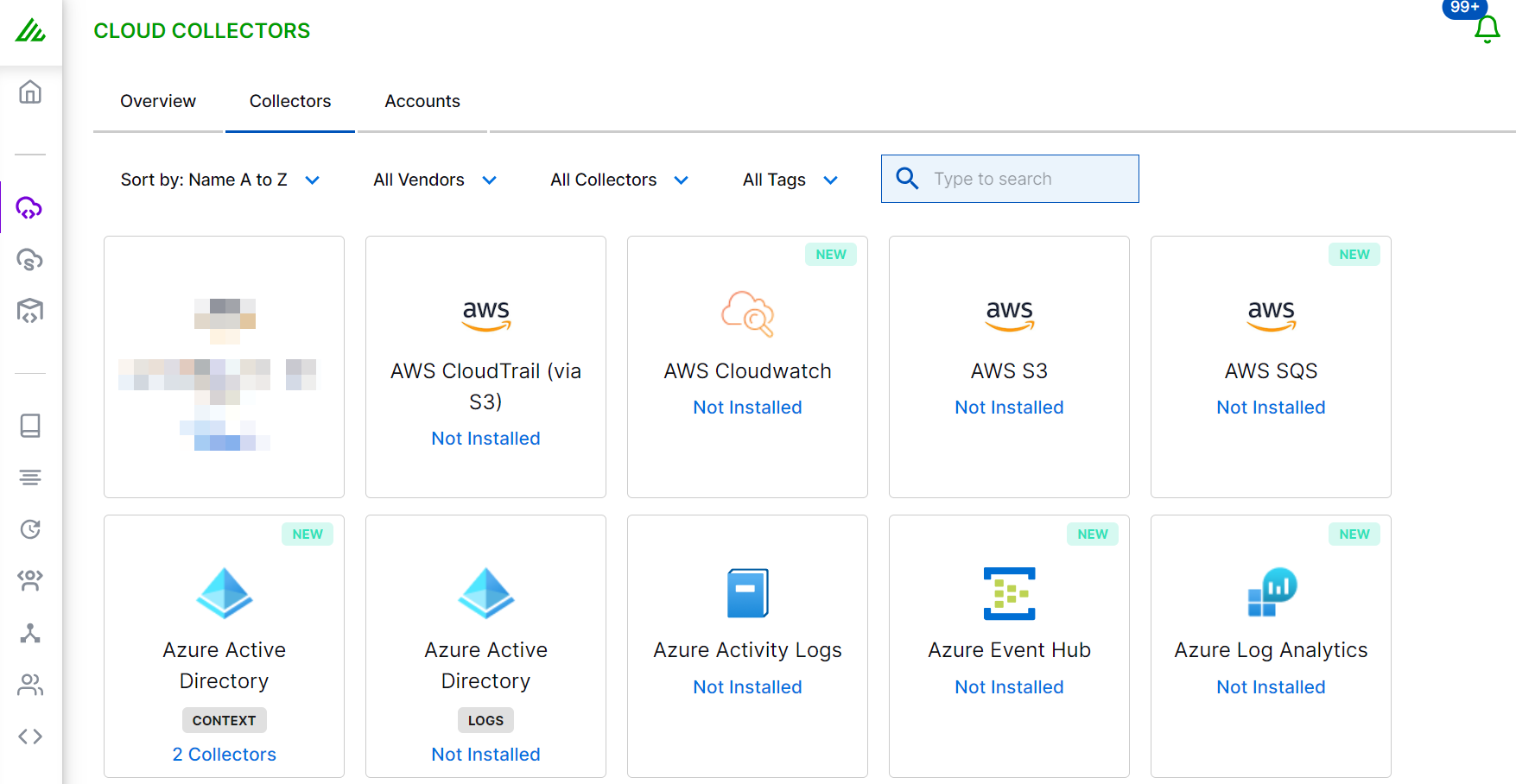

Navigate to Collectors > Cloud Collectors.

Click New Collector.

Click Azure Log Analytics.

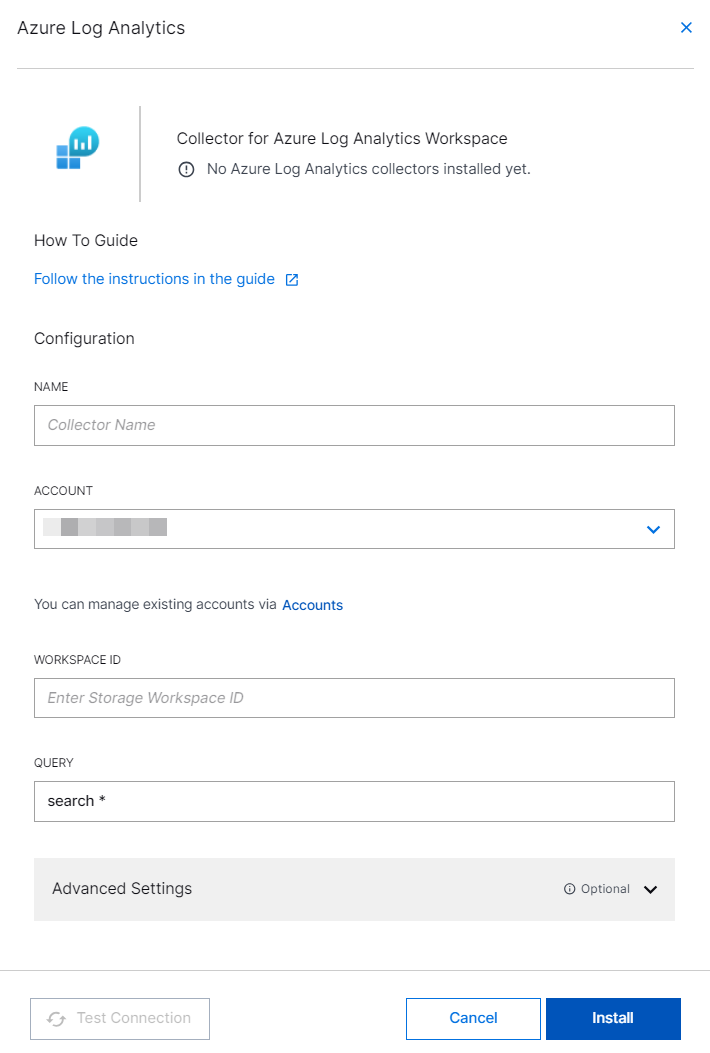

Enter the following information for the cloud collector:

NAME – Specify a name for the Cloud Collector instance.

Account – Click New Account to add a new Microsoft service account or select an existing account. You can use the same account information across multiple Microsoft cloud collectors. For more information, see Add Accounts for Microsoft Cloud Collectors.

WORKSPACE ID – Enter the Azure Log Analytics workspace ID that you obtained while completing the prerequisites.

QUERY – Enter the KQL query. For example,

search "AZMSOperationalLogs",search "StorageQueueLogs" or "StorageBlobLogs" or "StorageTableLogs" or "StorageFileLogs", andsearch "Syslog" or "Perf" or "Heartbeat".Based on your query, the cloud collector pulls logs. You can use the default query

search *. If you keep the field blank, the default query search* is applied.Note

Ensure that your query is a valid KQL query that can run on the Log Analytics workspace and the query does not contain the time field for example, search* | where TimeGenerated > ago (1h).

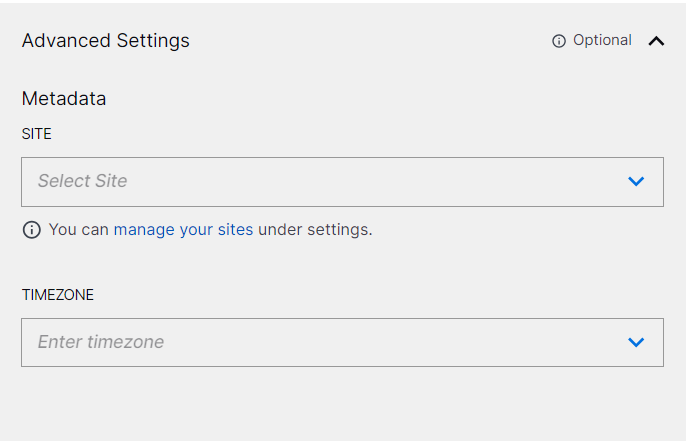

(Optional) SITE – Select an existing site or to create a new site with a unique ID, click manage your sites. Adding a site name helps you to ensure efficient management of environments with overlapping IP addresses.

By entering a site name, you associate the logs with a specific independent site. A sitename metadata field is automatically added to all the events that are going to be ingested via this collector. For more information about Site Management, see Define a Unique Site Name.

(Optional) TIMEZONE – Select a time zone applicable to you for accurate detections and event monitoring.

By entering a time zone, you override the default log time zone. A timezone metadata field is automatically added to all events ingested through this collector.

To confirm that the New-Scale Security Operations Platform communicates with the service, click Test Connection.

Click Install.

A confirmation message informs you that the new Cloud Collector is created.

Troubleshoot the Azure Log Analytics Cloud Collector Common Issues

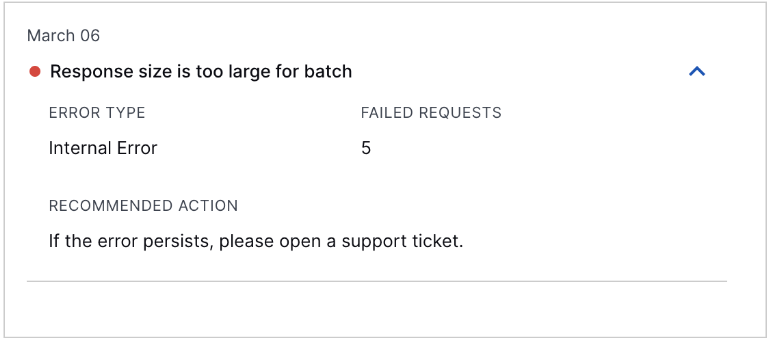

After configuring the Azure Log Analytics Cloud Collector, if the volume of data returned by the query from Log Analytics Workspace is more than 100 MB the Cloud Collector displays the error message 'Response Size is too large for batch'. This error can occur when data comes from CloudTrail S3 data source through Sentinel to the Log Analytics Workspace.

To troubleshoot this error, consider the following recommendations.

Edit the configuration for the cloud collector instance to modify the query to filter data for bringing the response size within the Azure API response size limit of 100MB.

You may experience collection latency of up to 15 minutes in data ingestion. For more information about the delays in pulling the logs from source, see Data collection endpoints and Log data ingestion time in Azure Monitor in Azure documentation.

Create multiple cloud collector instances for each type of data source. Microsoft Azure provides specific query for separate tables in the Log Analytics Workspace. For example, the query

search "AZMSOperationalLogs"collects data from the table AZMSOperationalLogs.