- Cloud Collectors Overview

- Administration

- Administrative Access

- Shareable Service Accounts

- Add Accounts for AWS Cloud Collectors

- Add Accounts for Cisco Duo Cloud Collector

- Add Accounts for Google Cloud Collectors

- Add Accounts for Microsoft Cloud Collectors

- Add Accounts for Okta Cloud Collectors

- Add Accounts for Salesforce Cloud Collectors

- Add Accounts for Splunk Cloud Collectors

- Add Accounts for Trend Micro Cloud Collectors

- Add Accounts for Wiz

- Define a Unique Site Name

- Sign Up for the Early Access Program

- Onboard Cloud Collectors

- Abnormal Security Cloud Collector

- Anomali Cloud Collector

- AWS CloudTrail Cloud Collectors

- AWS CloudWatch Cloud Collector

- AWS CloudWatch Alarms Cloud Collector

- AWS GuardDuty Cloud Collector

- AWS S3 Cloud Collector

- AWS Security Lake Cloud Collector

- AWS SQS Cloud Collector

- Azure Activity Logs Cloud Collector

- Azure Blob Storage Cloud Collector

- Azure Log Analytics Cloud Collector

- Azure Event Hub Cloud Collector

- Azure Storage Analytics Cloud Collector

- Azure Virtual Network Flow Cloud Collector

- Box Cloud Collector

- Broadcom Carbon Black Cloud Collector

- Cato Networks Cloud Collector

- ChatGPT Enterprise Cloud Collector

- Cisco Duo Cloud Collector

- Cisco Meraki Cloud Collector

- Cisco Secure Endpoint Cloud Collector

- Cisco Umbrella Cloud Collector

- Cloudflare Cloud Collector

- Cribl Cloud Collector

- CrowdStrike Cloud Collectors

- Cylance Protect (now Arctic Wolf) Cloud Collector

- DataBahn Cloud Collector

- Dropbox Cloud Collector

- GCP Cloud Logging Cloud Collector

- GCP Pub/Sub Cloud Collector

- GCP Security Command Center Cloud Collector

- Gemini Enterprise Cloud Collector

- GitHub Cloud Collector

- Gmail BigQuery Cloud Collector

- Google Workspace Cloud Collector

- LastPass Cloud Collector

- Microsoft Defender XDR (via Azure Event Hub) Cloud Collector

- Microsoft Entra ID Context Cloud Collector

- Microsoft Entra ID Logs Cloud Collector

- Microsoft 365 Exchange Admin Reports Cloud Collector

- Supported Sources from Microsoft 365 Exchange Admin Reports

- Migrate to the Microsoft 365 Exchange Admin Reports Cloud Collector

- Prerequisites to Configure the Microsoft 365 Exchange Admin Reports Cloud Collector

- Configure the Microsoft 365 Exchange Admin Reports Cloud Collector

- Troubleshooting the Microsoft 365 Exchange Admin Reports Cloud Collector

- Microsoft 365 Management Activity Cloud Collector

- Microsoft Security Alerts Cloud Collector

- Microsoft Sentinel (via Event Hub) Cloud Collector

- Mimecast Cloud Collector

- Mimecast Incydr Cloud Collector

- Netskope Alerts Cloud Collector

- Netskope Events Cloud Collector

- Okta Cloud Collector

- Okta Context Cloud Collector

- Palo Alto Networks Cortex Data Lake Cloud Collector

- Palo Alto Networks XDR Cloud Collector

- Progress ShareFile Cloud Collector

- Proofpoint On-Demand Cloud Collector

- Proofpoint Targeted Attack Protection Cloud Collector

- Qualys Cloud Collector

- Recorded Future Cloud Collector

- Recorded Future Context Cloud Collector

- Rest API Cloud Collector

- Salesforce Cloud Collector

- Salesforce EventLog Cloud Collector

- SentinelOne Alerts Cloud Collector

- SentinelOne Cloud Funnel Cloud Collector

- SentinelOne Threats Cloud Collector

- SentinelOne Cloud Collector

- ServiceNow Cloud Collector

- Slack Cloud Collector

- Snowflake Cloud Collector

- Sophos Central Cloud Collector

- Splunk Cloud Collector

- STIX/TAXII Cloud Collector

- Symantec Endpoint Security Cloud Collector

- Tenable Cloud Collector

- Trend Vision One Cloud Collector

- Trellix Endpoint Security Cloud Collector

- Vectra Cloud Collector

- Zoom Cloud Collector

- Zscaler ZIA Cloud Collector

- Webhook Cloud Collectors

- Wiz Issues Cloud Collector

- Wiz API Cloud Collector

- Troubleshooting Cloud Collectors

Scenarios that Require Specific Configuration

Some Cribl logs require additional configuration in order to be parsed effectively in Exabeam. To ensure these logs reach the appropriate Exabeam parsers, the necessary steps must be performed in Cribl Stream. Follow the links in the scenarios listed below for information about the required configurations:

Collecting Data from a Splunk Source – Configure a pipeline in your Cribl Stream connection that extracts a specific subset of the

_rawfield of a Cribl log file.Targeting Parsers that Require Augmented Metadata – Configure a pipeline in your Cribl Stream connection that includes a

Maskfunction with a regex replace statement that inserts the necessary metadata key-value pairs into the_rawfield of Cribl log files.

Collecting Data from a Splunk Source

To collect Cribl logs from a Splunk source, a specific subset of information in the _raw field of the log is required. In order for Splunk data to be collected and parsed appropriately in Exabeam, this subset of information must be extracted and used to replace the original _raw field in the log.

To streamline this extract and replace process, Exabeam provides a JSON code snippet. When included in a pipeline, this JSON code creates an Eval function that filters for the required information in the _raw field, extracts it, and overwrites the original field with the result.

To implement this configuration:

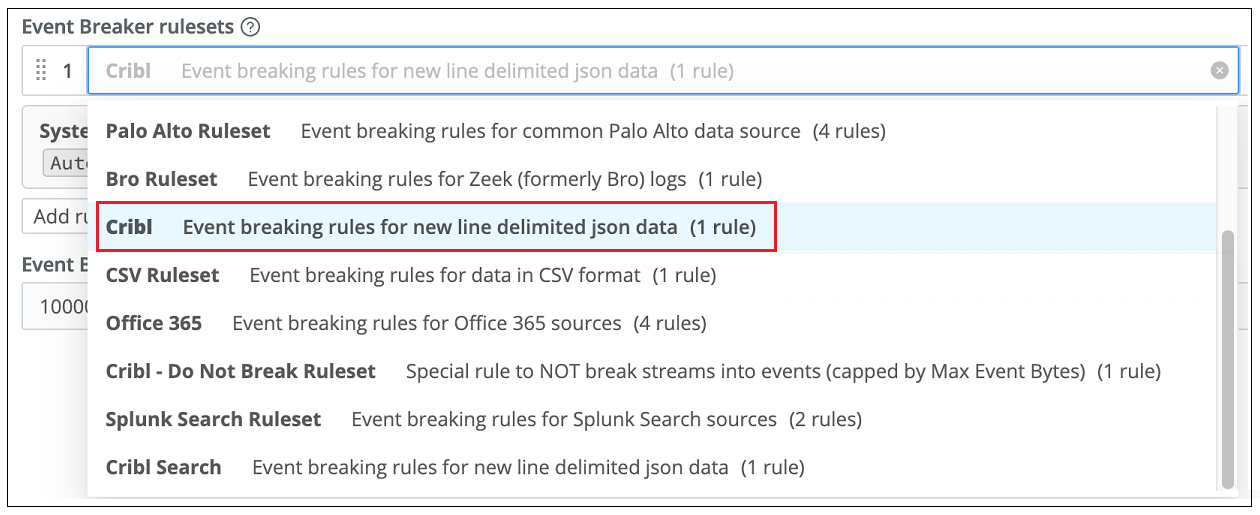

Open your Splunk Search source and navigate to the Configuration screen. Click Event Breakers and use the Ruleset drop down to switch from the default Splunk ruleset to the Cribl ruleset called Cribl Event breaking rules for line delimited json data. Save the change.

Copy the following JSON code snippet and save it.

{ "id": "Splunk_Search_Extract_Raw", "conf": { "output": "default", "streamtags": [], "groups": {}, "asyncFuncTimeout": 1000, "functions": [ { "filter": "result != null && result._raw != null", "conf": { "add": [ { "disabled": false, "name": "_raw", "value": "result._raw" } ] }, "id": "eval", "description": "Update Splunk search results (broken as JSON) to overwrite _raw with result._raw" } ] } }In your Cribl Stream worker group, navigate to Processing -> Pipelines.

Create a new pipeline using the Import from File option. See Adding Pipelines in the Cribl documentation.

Navigate to where you saved the JSON code in Step 2 and select the file. Click Import and then Save.

In Cribl Stream, navigate to Routing and select QuickConnect or Data Routes to route data to a specific destination based on the standard your organization uses.

Create a connection between your Splunk source on the left and your Exabeam destination on the right. See QuickConnect in the Cribl documentation.

Note

If you have multiple Exabeam destinations configured, make sure you select the one associated with the appropriate Cribl Cloud Collector.

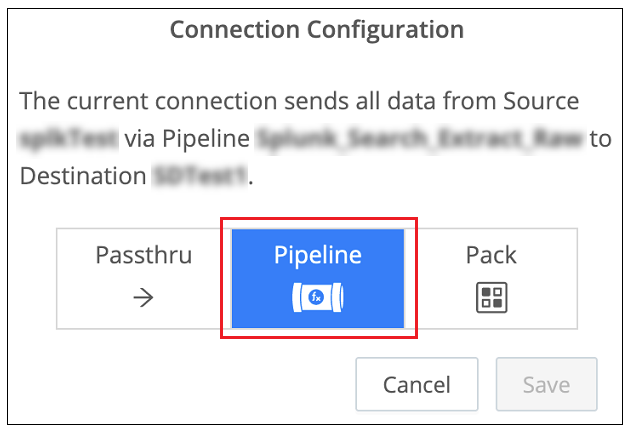

When the Connection Configuration dialog box is displayed, click the Pipeline option. The Add Pipeline to Connection dialog box opens.

Select the radio button next to the pipeline you created in Step 4 and click Save. The pipeline is added to the connection between your Splunk source and your Exabeam destination.

When the pipeline configuration is complete, verify that Splunk data is collected successfully in your Cribl Cloud Collector and that the collected logs are parsed correctly in downstream Exabeam services.

Targeting Parsers that Require Augmented Metadata

Some Exabeam parsers require a specific metadata key-value pair to be present in log messages that are ingested from Cribl. This metadata must be present in the _raw field of a Cribl log file in order for the log to be evaluated by the appropriate Exabeam parser. To determine if you are using parsers that require augmented metadata, and to find the exact conditions that must be added to outgoing logs, see the table in Parsers that Require Augmented Metadata.

One method for augmenting Cribl logs with the required metadata, is to create a pipeline and apply it to the connection between the source and your Exabeam destination. Add a Mask function to the pipeline that includes Regex statements that will insert the required exact conditions into outgoing logs. The steps below outline this procedure. However, depending on the source you are using, additional filtering logic is sometimes required. Consult your Cribl representative for help in such cases.

To implement this method of augmenting log metadata:

In your Cribl Stream worker group, navigate to Processing -> Pipelines.

Create a new pipeline using the Create Pipeline option. See Adding Pipelines in the Cribl documentation.

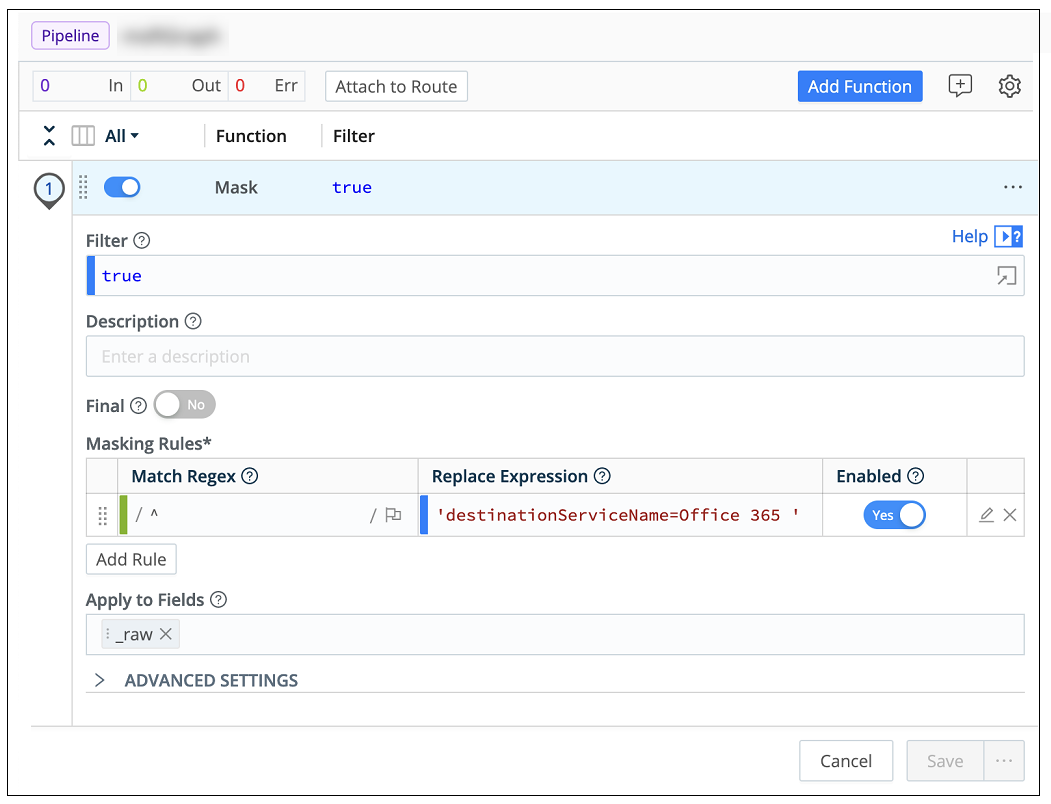

Open the new pipeline and add a standard Mask function to it. Configure the following information:

Match Regex statement – Add a Regex expression that will find the start of the log message.

Replace Expression – Enter the exact condition required for a specific parser designed for a specific vendor and product. To find the exact condition, see the table in Parsers that Require Augmented Metadata.

The image below shows an example of a configured Mask function. For more information, see Function/Mask in the Cribl documentation.

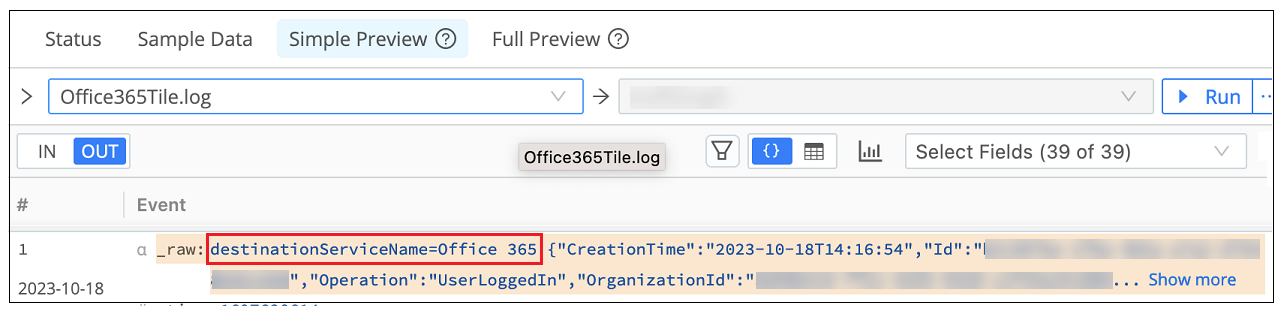

To verify that the exact condition is added properly to the front of outgoing logs, use the Simple Preview to check the outgoing message. It should look similar to the example below.

Save the pipeline configuration.

Navigate to Routing -> QuickConnect.

Create a connection between a specific source on the left and your Exabeam destination on the right. See QuickConnect in the Cribl documentation.

When the Connection Configuration dialog box is displayed, click the Pipeline option

Select the radio button next to the pipeline you just created and click Save. The pipeline is added to the connection between your source and your Exabeam destination.

When the pipeline configuration is complete, verify that data from the relevant source is collected successfully in your Cribl Cloud Collector and that the collected logs are parsed correctly in downstream Exabeam services. If the data is not parsed as expected, you might need add further logic to the pipeline. Consult your Cribl representative for help.

Parsers that Require Metadata Augmentation

This table lists Exabeam parsers, by vendor and product, that require augmentation in a Cribl log message in order for the log to be evaluated by the appropriate parser. For example, in order for a Cribl log message to be parsed by the Amazon AWS CloudTrail parser (amazon-awscloudtrail-sk4-app-activity-aws), the log message must contain a key/value pair in the form of the following exact condition: 'destinationServiceName=AWS'.

Vendor | Product | Exabeam Parser | Exact Condition |

|---|---|---|---|

Amazon | AWS CloudTrail |

|

|

|

| ||

AWS CloudWatch |

|

| |

|

| ||

Bitglass | Bitglass CASB |

|

|

BlackBerry | BlackBerry Protect |

|

|

Box | Box Cloud Content Management |

|

|

|

| ||

|

| ||

Cisco | Cisco Meraki MX applicance |

|

|

Cisco Umbrella |

|

| |

|

| ||

|

| ||

|

| ||

Duo Access |

|

| |

|

| ||

|

| ||

|

| ||

Citrix | Citrix Gateway |

|

|

Citrix ShareFile |

|

| |

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

Cloudflare | Cloudflare Insights |

|

|

|

| ||

Cloudflare WAF |

|

| |

Delinea | Centrify Zero Trust Privilege Serivices |

|

|

Egnyte | Egnyte |

|

|

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

GitHub | GitHub |

|

|

GCP CloudAudit |

|

| |

Google Cloud Platform |

|

| |

|

| ||

Google Workspace |

|

| |

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

Illumio | Illumio Core |

|

|

LastPass | LastPass |

|

|

|

| ||

|

| ||

|

| ||

Microsoft | Azure AD Activity Logs |

|

|

Azure Monitor |

|

| |

|

| ||

|

| ||

|

| ||

|

| ||

M365 Audit Logs |

|

| |

Microsoft 365 |

|

| |

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

Microsoft CAS |

|

| |

Microsoft Defender for Endpoint |

|

| |

|

| ||

Microsoft DNS Log |

|

| |

|

| ||

Network Security Group Flow Logs |

|

| |

Mimecast | Mimecast Secure Email Gateway |

|

|

|

| ||

|

| ||

|

| ||

|

| ||

Netskope | Netskope Security Cloud |

|

|

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

|

| ||

Okta | Okta Adaptive MFA |

|

|

|

| ||

OneLogin | OneLogin |

|

|

Ping Identity | PingOne |

|

|

|

| ||

|

| ||

Salesforce | Salesforce |

|

|

|

| ||

|

| ||

ServiceNow | ServiceNow |

|

|

Slack | Slack |

|

|

Symantec | Symantec Advanced Threat Protection |

|

|

Symantec CloudSOC |

|

| |

|

| ||

Symantec Web Security Service |

|

| |

|

| ||

|

| ||

|

| ||

|

| ||

Tenable.io | Tenable.io |

|

|

VMware | Carbon Black CES |

|

|

|

| ||

Carbon Black EDR |

|

| |

|

| ||

Zoom | Zoom |

|

|

|

|